A Comparison of Reinforcement Learning and Deep Trajectory Based Stochastic Control Agents for Stepwise Mean-Variance Hedging

Abstract

We consider two data-driven approaches to hedging, Reinforcement Learning and Deep Trajectory-based Stochastic Optimal Control, under a stepwise mean-variance objective. We compare their performance for a European call option in the presence of transaction costs under discrete trading schedules. We do this for a setting where stock prices follow Black-Scholes-Merton dynamics and the "book-keeping" price for the option is given by the Black-Scholes-Merton model with the same parameters. This simulated data setting provides a "sanitized" lab environment with simple enough features where we can conduct a detailed study of strengths, features, issues, and limitations of these two approaches. However, the formulation is model free and could allow any other setting with available book-keeping prices. We consider this study as a first step to develop, test, and validate autonomous hedging agents, and we provide blueprints for such efforts that address various concerns and requirements.

1 Introduction

Recent advances in data driven hedging have been heralded as one of the most exciting applications of machine learning (ML) and in particular reinforcement learning (RL) in derivatives pricing and risk management ([BMW22], [KR19], also see [She19]). One of the reasons for this excitement is that it is relatively easy to: (1) simulate or generate data for the behavior of hedging instruments, (2) model the value of a trading strategy over time given such data – even for realistically modeled incomplete markets where trades cause friction and attract transaction costs, and (3) compute hedging or risk-management objectives of such strategies. The hedging objectives could be based on how the strategies perform as measured against book-keeping prices for to-be-hedged portfolios or against to-be-made (or to-be-received) payoffs for these to-be-hedged portfolios. This means that it is straightforward to model the behavior of the trading strategies even as consistent prices or sensitivities might be difficult to compute in conventional models. Therefore one can train data-driven agents that optimize the hedging and risk-management objectives and perform better than conventional sensitivity-based and model-based hedging approaches that are currently often used for such hedging. Data-driven agents can also take into account other factors and automate hedging even in cases where conventional hedging approaches require ad-hoc decisions and tweaks by traders and risk managers to address the shortcomings of the existing approaches.

Several banks and other financial institutions have started to apply these new approaches to hedging and risk management (see [Man22] for instance). However, in our experience, one of the main concerns the quants or the traders express regarding the data-driven hedging agents is the model risk aspects of deploying such solutions [Res11], and there seems to be a need for a framework to manage the model risk which may arise from using them. To contribute to wider adoption of data-driven hedging solutions across the financial industry, we are studying here how we can apply RL and Deep Trajectory-based Stochastic Optimal Control (DTSOC) approaches to hedging problems (in particular the stepwise mean-variance hedging setting), what are commonalities and differences, what are advantages, features, issues, and limitations. We also opine on how such issues and limitation may be addressed.

1.1 Scope of This Work

This paper concentrates on hedging strategies that optimize the mean and variance of hedged portfolios (containing hedged instruments and hedging instruments) by optimizing a cumulative stepwise mean-variance objective, but the setting will apply to many trading strategies with different objectives (and we will discuss other settings in future papers).

To define the set up, we will need to model how the prices of the hedging and the hedged instruments evolve, how the trading strategy to do so performs (including transaction costs), and define the stepwise objectives to be optimized over in terms of the prices, costs, etc. involved in the strategy.

In mean-variance hedging, one assumes that both the prices of the hedging and the hedged instruments to define the hedging objectives are known. This might be the case if one tries to learn hedging strategies from settings in which both hedged instruments (say, vanilla options) and hedging instruments (underlier and cash) are liquidly quoted or where one has a book-keeping model for the hedged instruments that will provide the price. The first setting might make sense if one has over the counter (OTC) or exchange-traded options on the books and needs to hedge them with the underlying even though at least some such options are liquidly traded. The second setting might make sense for a trader in an investment bank that hedges an option on the books which will be valued by that book-keeping model111 We call a model a book-keeping model if it reflects the price or value of an instrument in some way but does not necessarily take all details and features of the instrument and/or the market into account. For instance, Black-Scholes type models and similar ”simpler” models are often used to mark instruments in systems of record (SOR) and they are validated and approved for such use because even though they do not reflect all features and details, they reflect value well enough for purpose. In particular, if the model is contained in a system of record, we call it a SOR model. Sometimes, for purposes of margins or collateral or trading through central counterparties, using such simpler book-keeping models has the advantage that there will be less uncertainty and fewer arguments about the model parameters and settings, compared to more complicated models. in the system of record (SOR) and the trader will be judged by their performance against the prices contained in the SOR.

There might be settings in which neither the hedged instrument nor any closely related instrument are liquidly quoted or in which there are no appropriate and flexible enough book-keeping models available. In these circumstances, one would need stepwise or global objectives that are defined without reference to (book-keeping or other) prices of the to-be-hedged instruments. These could be for instance objectives that consider how well the trading strategy replicates or risk-manages all cashflows of the to-be-hedged instruments, for instance in a squared difference sense, leading to quadratic hedging (we leave this case to a future paper).

We consider a case in which we have a model for how appropriate risk factors and prices of the hedging and the hedged instruments evolve that are needed to compute the objectives. What we mean by that is that we have some implementation that is able to generate as many trajectories of these instrument prices and other risk factors as needed. Based on these trajectories, we will be able to simulate the trading strategy and their performance and compute the appropriate objectives. We will here assume that the trading from our trading strategies does not impact how the prices of the hedging and the hedged instruments evolve, just how the trading strategy and the objectives evolve, which is a common assumptions in current works. We do not specify how this model is obtained, setup, or run; only that we can obtain those trajectories as needed. As such, the model could be a well-specified traditional model or it could be a trained generative model ([BRMH22], [CRW21]). Here, we will work with simulated data from Black-Scholes model and defer the settings involving synthetic data from generative models to future work.

We will test and validate our agents on simulated data from such models and leave the question whether such models trained on simulated or generated data can perform well on observed data for our settings to future work. There are indications in the literature that models trained on appropriate simulated or synthetic data can perform well when applied to observed data from the market (as in [BRMH22]).

We are not using the model(s) or any information about the model(s) in the learning and training beyond using it to generate trajectories and data including stepwise objectives. In this way, we are operating in a model-free, data-driven setting.222Particular setups might include state variables and/or compute features that are based on a particular model or setting, such as an option Delta computed under the assumption that the dynamics of the hedging instrument can be approximated by a Black-Scholes type model, but the algorithms to learn trading strategies or value functions do not use or require any model details beyond what is contained in the state and feature space. Given the above discussion, we are actually operating in a rich-data (even infinite-data) regime, corresponding to a well-defined conventional or generative model.

The rest of the paper is structured as follows: First, we will discuss the instrument and trading strategy setup and mean-variance hedging. Then, we will discuss how Reinforcement Learning (RL) conceptualizes the problem and the setting, introduce the RL techniques and algorithms that we will apply, and then introduce the deep trajectory-based empirical stochastic optimal control (DTSOC) approaches and algorithm. We will then specify the experimental setup and the models, report and analyze outcomes, visualize and interpret outcomes, and analyze sensitivity of the results with respect to various choices. Finally, we conclude and discuss some possible future directions.

1.2 Related Work

We discuss here some of the existing work in the literature on the use of RL and neural networks for pricing and hedging options (see [HXY21] for a broader survey of RL applications in finance).

In [Hal20], a European call option in the Black-Scholes model (trading at discrete times, no transaction costs) is hedged and priced using a -learning method (there, called QLBS method). The objective is minimizing the sum of the initial cash position and the weighted discounted sum of variances of the hedging portfolio at all subsequent time steps. This setting and objective can be identified with a corresponding MDP setup and thus solved with standard RL/DP approaches such as -learning [Hal20]. The price at maturity has to be equal to the final payoff and prices respective -functions at earlier times are determined by Dynamic Programming or other standard RL approaches.

The mean-variance hedging problem in a setting which includes transaction costs is considered in [CCHP21]. However, they define the reward objective in global fashion - it is the expected total cost for the entire hedging and its variance. They use versions of -learning and other methods to address this problem. They do not treat stepwise mean-variance hedging.

The stepwise mean-variance hedging under transaction costs for a single option is considered in [KR19]. The hedging agent is trained with an RL algorithm which seems to be a version of -learning. Also, stepwise mean-variance hedging under transaction costs with various RL algorithms is considered in [DJK+20].

In [MK21], authors consider a case where the stock price333Actually, the authors are using the value of the underlying index, and are assuming that the underlying index is tradeable, which is not completely accurate (futures on the index are traded but the spot index, in particular through changes to the index, is not tradeable and not entirely replicable through self-financing strategies). and the option price are taken from higher frequency data acquired from an option exchange and used to train an agent with an RL algorithm. They use a stepwise hedging objective; however, it is defined in terms of mean and standard deviation (and in terms of P&L and absolute value of P&L for the sample version). If the option is quoted and traded on an exchange, it might be less necessary to hedge or replicate said option.

There exists a large literature on applications of neural networks for option pricing. Most of these works consider the neural networks as a numerical tool to accelerate calculation tasks (such as model calibration [Her16], solving PDEs [WHJ21], etc.) more efficiently and in higher dimensions (also see [RW19] for a literature review). Among these, we distinguish the line of works initiated in [H+16] where neural networks are used to solve the stochastic optimal control problem numerically. More concretely, the DTSOC method in [H+16] is a setup for trajectory based empirical deep stochastic optimal control with both stepwise and final objectives/costs. Finally, the "deep hedging" paper [BGTW19] presents a trajectory based empirical deep stochastic optimal control approach to minimizing some global objectives related to replication and/or risk management of some final payoff, however, it does not consider a stepwise objective, just a global/final objective.

2 Framework

2.1 General Setup

We consider here dynamic trading, hedging, and/or risk management problems. These problems are typically posed on some universe of given instruments (including cash/bank account) and the trader or agent has to select a strategy that determines how much of each instrument to hold at each time to achieve a certain trading, hedging, or risk management objective. As is common in such settings, we assume that there is a given fixed grid of times at which trades can occur and that such trades can incur transaction costs. We also assume that the strategies are self-financing. The objectives can be defined at a certain time horizon or they could be defined for each time period between trading times (stepwise objectives). Here, we will consider a stepwise objective. These stepwise objectives do not necessarily have to directly correspond to trading gain or loss or transaction cost or any financial item, they can also be defined in terms of moments or other properties of the distribution of specific gains, losses, costs, or appropriate combination thereof.

On a basic level, this is a stochastic sequential decision problem. The prices of the given instruments will follow some stochastic process and it is assumed that we are given a model or generator for this process. Based on those prices and any other appropriate risk factors that can be computed based on the history of said prices, we need to decide at each trading time-step what the new holdings of the given instruments will be and thus what the necessary trade sizes are (so holdings and/or trade sizes are the appropriate decision variables), taking into account the transaction costs and other impacts associated with that trade. Given those holdings and cash-at-hand at the beginning of the no-trade interval, the value of all those holdings and cash at the end of the no-trade interval follows from the stochastic evolution of the prices of the given instruments and interest on the cash account. From this, one can define the stepwise objective, which often is based on an appropriately defined change in strategy value over that period. We assume here that we have an implementation or model that describes the evolution of the trading strategy and also the computation of the stepwise objectives.

2.2 Trading Strategy Corresponding to Hedged Portfolio

We consider the case of a hedged portfolio. This means that we are given a fixed holding of the to-be-hedged portfolio.444Which could be easily extended to a priori given holding level over time. It is assumed that the price of the to-be-hedged portfolio is given as , corresponding to a quoted/observed or a computable book-keeping price. We do not rely on how this price is computed, only that we are provided with some implementation of it. It is also assumed that we are given other "hedging" instruments also and we denote their prices by (in general a vector) and we denote the holding of them in our strategy at time with (a vector of corresponding length).

For our examples, we will typically assume a single hedging instrument. Finally, we assume that excess cash from the trading strategy is deposited in a money-market account with a certain given interest accrual and discount factor (and that negative cash balances can be borrowed at the same interest accrual and discount factor). We assume that we initially start with only the fixed holding and some initial cash but no holdings in the hedging instruments (i.e., ). The trading times are given as with . If referred to, is a time just before time 0 with no holding in hedging instruments.

Consider time , right before the trade: The value of the hedging part of the strategy then is

| (1) |

This reflects that at the last trade time , the portfolio was rebalanced to the appropriate holdings , transaction costs were charged , the thus resulting cash balance attract stepwise interest (interest accrual corresponds to dividing by appropriate stepwise discount factor ). At the same time, the holdings in the hedging instruments are now valued based on the new price of the hedging instruments.

It helps to rewrite the equation as follows:

| (2) |

In this completely linear setting without stochastic interest rates (and assuming that the transaction cost is linear in the instrument prices for positive multipliers , ), it is possible to "hide" the discounting in the value definitions in the following sense: with and , we have

| (3) |

In particular, this means for the increment

| (4) |

and we thus do not get any contribution or impact of the initial cash position beyond an additive shift.

If we are looking at the change of value in the total portfolio (both hedged and hedging instruments), we obtain

| (5) |

where we denoted and . In the following, we will work with values discounted back to initial time and will omit the tilde over the variables.

Extensions to stochastic interest rates and discounting, differential rates, more involved funding policies are possible, require further notation and details, and can be handled similarly, but are not necessary for the settings we discuss here. Differential rates and more involved funding policies could be included in the framework by adding bank accounts for positive and negative balances and other funding instruments to the hedging instruments and adding constraints to the decision variables (only positive holdings of positive bank account, only negative holdings of negative bank account i.e. bank loan, restricting secured loans/repos to the amount held in the corresponding collateral, etc.).

2.3 Stepwise Mean-Variance Objective

Often, one would like to control both the mean and variance of the stepwise gains or losses by maximizing mean of gains penalized by a multiple of variance (or equivalent minimizing mean of loss combined with variance). When sampling, one can approximate the variance by the second moment (see [Rit17] for a discussion) which means that one can express that objective by the expectation of costs

| (6) |

This can now be used as a cost or reward in RL or DTSOC algorithms. Costs to unwind the position at maturity (or convert it into the position to match the payoff of the hedged instrument) can be included as final cost if needed.

2.4 Relation to Global Mean-Variance Objective

In some settings, one tries to control only the mean and variance globally, i.e. a mean and variance combination of only the final wealth is optimized over:

where is the wealth/value process for the overall hedged portfolio as derived earlier in this section (see, for instance [KR19]).

Using the following discrete time decompositions for the mean and variance of the terminal wealth under appropriate assumptions for the variance, for instance a random walk assumption as in [Rit17],

and

[KR19] rewrite the mean variance hedging (MVH) objective in the following way,

| (7) |

and thus obtain a stepwise objective as the one discussed in the last subsection.

2.5 Modeling the Hedging and Hedged Instruments

The formulations of costs/rewards in the above sections only depend on discounted prices of the hedging instruments, hedged instrument, and transaction costs; but do not need any further information about the models for these instruments and prices. With the costs/rewards given above, the agent only needs to determine how many units to hold in each of the hedging instruments after rebalancing at each rebalancing time , i.e. . Alternatively, one could specify the units to be sold or bought at any given time together with the initial position; or assume that the amount sold or bought is proportional to the time between trades or some other variable and then specify that proportional trading rate in that setting.

For the hedged instrument, one needs to know the position in the instrument (i.e., whether long or short or some particular holdings at particular times) and additional information about the instrument - at least time to maturity and/or time. The minimal state for modeling this decision problem are these discounted prices (hedging and hedged instruments), the amount of the hedging instruments, time, and whatever state the models for these prices and the transaction costs need.

As for the models for the prices of the hedging and hedged instrument, one can model them under pricing or observational measure, with some differences in parameters and calibration or fitting. For the hedging instruments, one can use conventional quantitative finance models, possibly with hidden factors, such as Black-Scholes, Local Volatility Model, Heston model (with stochastic variance), quadratic rough Heston model, etc. One could use generative models such as GANs or appropriately trained neural Stochastic Differential Equations (SDE)s or similar. Finally, one could use historically observed data over one instrument or a cross section of similar instruments together with appropriate assumptions to generate possible future price movements or future prices.555See also [CSS21] for a discussion of possible approaches together with the associated ”model” risks.

For the hedged instrument, one of the challenges is that the prices of the hedged and the prices of the underlying instruments need to be consistent in an appropriate sense because otherwise they might allow arbitrage strategies. One could implement some book-keeping model which would give the price of that hedged instrument in terms of state variables and other parameters. In a trading setup in an investment bank or a hedge fund, holdings will be marked by some model within a system of record, and the book-keeping model would be that model in the system of record. For faster computation, one could try to learn a surrogate that approximately replicates that SOR model but can be run much faster. Alternatively, if the reference prices for the hedged instrument are observable, one may directly model such prices either as dependent on underlying prices or jointly with underlying prices, with appropriate conditions. This, however, will not work for hedged instruments that are illiquidly or rarely traded or for which no consistent pricing is known.

One could try to determine a consistent model according to some conceptualization, such as replication with controlled risk or market making according to certain strategies and models. However, the construction and validation of such consistent models is very hard, and under such circumstances, one most probably should design trading and risk management strategies that describe and manage the risk without requiring book-keeping and/or consistent modeling, such as hedging that tries to control the replication and risk management of the payoffs of the to-be-hedged instrument rather than targets a certain book-keeping value that does not adequately reflect the nature and risk of the instrument.

Particularly simple examples concern the hedging of a short call option that we sold to some counterparty or the hedging of a long call option that we bought, use the Black-Scholes model with constant (or time-dependent parameters), with log-Euler time discretization (which is exact assuming the parameters are properly chosen), with the Black-Scholes Formula for a call as book-keeping model, and size of holding as action and decision variable.

In formulas, this would mean for the price of the hedging instrument

| (8) |

or

| (9) |

such that for an appropriate function and a standard normal with covariance . For the price of the call option, we use the Black-Scholes formula as book-keeping model.

| (10) |

The minimal state would consist of with the last element being the action (or being impacted by the action). One can also add other features and information to the state space that the agent could potentially use to make better decisions or that allow the agent to be learned more easily.

If one considers more complicated models based on conventional quantitative finance models, one would simulate the (discounted) underlying instruments with that model and one would replace the Black-Scholes formula with an appropriate pricing formula or pricer under that model (an appropriate future value computation under the book-keeping model). If these models have additional factors, these factors would be added to the state. If some of these factors are latent or hidden factors, one would need to add some mechanism how these latent factors can be estimated or taken into account by some process on observed quantities, add these observed quantities to the state, and learn agents that only depend on observed and observable quantities, not the latent factors that will in general be unknown (and unknowable) to the agent.

In general, trading in hedging instruments could impact the prices in those hedging instruments either temporarily or permanently. We assume here that the hedging instruments are traded liquidly and that the hedged instrument is such that it can be hedged without impacting the prices of the hedging instruments. To a certain extent, short-term price impact can be modeled by and absorbed into the transaction cost terms.

As discussed in abstract and introduction, here we focus on Black-Scholes type models to investigate the agents and algorithms in a setting where the model and the features are simple enough, and will consider other models in future work.

3 Reinforcement Learning

Reinforcement Learning (RL) is a framework for solving problems consisting of a learning agent interacting with an environment according to a decision policy. The interaction of the agent with the environment is accompanied by receiving rewards (perhaps with delay) and the agent must learn to act according to an optimal policy which maximizes the expected sum of reward received over a given time horizon. The environment consists of everything external to the agent, and is the source of the agent’s observations as well as the reward.

RL framework can be effectively applied to an important class of sequential decision-making problems which can be recursively decomposed into sub-problems, where the result of taking a particular action does not depend on the prior history of the system up to that point. We first start by formalizing the setup for those problems.

3.1 Markov Decision Processes

We begin by defining a Markov decision process (MDP). A discounted finite horizon MDP is defined by a tuple , where is the set states, is the set of all actions, is the transition probability density , is the immediate reward (could also be stochastic), is the reward discount factor and is the time horizon (). By taking any action at the state , defines the probability distribution of the next state and , the distribution of the immediate reward.

A policy maps a state to a probability distribution over the set of actions . The interaction of an agent with the environment is formalized as follows, following a policy , starting from the state , at each time step , the agent observes the state and takes an action according to the policy . When the agent performs action , the environment makes a stochastic state transition to a new state according to the probability distribution . The agent receives a reward drawn from the distribution . This reward is a random variable, because it depends on all of the stochastic transitions up to time . The agent is allowed to make decisions, however, the transition density and the reward distribution are dictated by the environment. While the agent will observe transitions and rewards, the underlying model and details for and are not known to the agent in general.

Therefore, the evolution of the MDP following the policy (also called the trajectory) is given by,

The value of a state for a given policy is the cumulative reward across the trajectories starting from and following along the way. The value function corresponding to the policy is defined as,

| (11) |

The action-value function or the Q-function at state and action is the value of taking the action and following the policy onwards,

| (12) |

The value function can be expressed as the expectation of the Q-function across all possible actions,

| (13) |

The Q-function satisfies the Bellman expectation equation,

| (14) |

The optimal policy gives the maximum action-value function for any and ,

| (15) |

The optimal Q-function satisfies the Bellman optimality equation (see [SB18]):

| (16) |

3.2 Solving MDPs with Q-Learning

The tabular Q-learning algorithm finds the optimal Q-function (and hence the optimal policy ) satisfying (16). To formalize the notion of optimal policy, for each Q-function, we define the greedy policy as the policy that chooses the action maximizing the Q-function,

| (17) |

The optimal policy is defined as greedy policy for the optimal Q-function 666If there is more than one maximizer, the greedy policy can be defined as a uniform distribution over such maximizers..

In order to find the optimal -function, define the Bellman optimality operator as follows,

| (18) |

It is observed that equation (16) describes a fixed point of the Bellman operator in a suitable function space. In fact, it can be shown that the Bellman operator is a -contraction and hence admits a fixed point777The proof in the tabular case requires .. The Q-value iteration algorithm approximates this fixed point by initializing a random and constructing a sequence of action-value functions by defining . It is shown that converges to the optimal value function at a linear rate (see [Put14], [SB18], also [FWXY20]).

| (19) |

The Q-learning (tabular) algorithm approximates the updates in equation (19) by replacing the expectation with sample observations. At any step , after taking some action , the algorithm observes the immediate reward and the state transition and updates the -values for the state-action pair by,

The pseudo-code for the tabular -learning is given below,

Remark 1.

Convergence. For the infinite horizon with finite state and section case, the convergence of the -learning algorithm can be proven if all actions and states are sampled infinitely many times (it does not matter how we select the actions), learning rates for updating the are small and do not decrease too quickly (we considered a constant learning rate in the pseudo-code above). The proof relies on a tool from online optimization called the stochastic approximation method (see [Put14], [SB18]).

Remark 2.

Action exploration.The -learning convergence theorem states that it does not matter how the actions are selected as long as they are sampled infinitely many times. One can choose a greedy policy per equation (17). However, this has the drawback of compounding errors, namely, greedy actions based on sub-optimal -estimates may result in getting stuck in local optima and failure to estimate -values with higher values.

One solution to this is to sample an -greedy policy at each step. In the pseudo-code above, before updating the estimate, one varies the policy by randomization,

| (20) |

and the target in the -update takes the form of

3.2.1 Q-Learning with Function Approximation

The -learning method introduced above constructs a lookup table of the size . This table is prohibitively large for almost any reasonably interesting MDP in practice (curse of dimensionality). An approach to tackle the curse of dimensionality in tabular Q-learning is to approximate the optimal Q-function with function approximators [Put14]. For example, the function approximation could be parametrized as a linear regression, a regression/decision tree or a deep neural network (hence the name deep Q-network – DQN [MKS+15]). With a given function representation , the estimates for the -values for any state-action pair can be computed. As a result, in -learning with function approximators, instead of populating the table, we approximate the parameters of the function approximation to the -function.

Recall that by Bellman optimality equation for the optimal Q-function (14), we want to have the following for any state-action pair,

| (21) |

To a given transition , we assign the error,

| (22) |

The goal is to minimize the Bellman Mean Squared Error,

| (23) |

In Q-learning with function approximation, upon taking an action and receiving rewards and new state, instead of updating the table by taking the running average, one updates the parameters in . In training DQN, updating the weights can be done by stochastic gradient descent (SGD). A description of the algorithm is given below in (3).

Tricks for Training DQNs

-

•

Experience Replay. In the -learning with function approximation, the actions are generated in an -greedy fashion from the estimate of the -function at the current step. This usually leads to correlated samples. To tackle this issue, experience replay method is used. At each time step , the transition is saved into the replay memory . In deep -learning, at each training episode, a random mini-batch from is sampled to train the neural network via stochastic gradient descent.

-

•

Training Stability. By examining the Bellman squared error loss, it is seen that ground truth for the loss minimization are generated on the fly by reusing the estimate for the in the target term. This may cause instabilities in the optimization as the target may change too quickly based on changes in through gradient descent. A trick to tackle this is the lazy update of target network: the parameters in the -function as part of the target are updated less frequently.

The pseudo-code for the full DQN training algorithm is given below [FWXY20],

3.3 Policy Gradient Methods

Methods in the previous section fall under the dynamic programming approach to RL ([SB18]). In tabular and deep Q-learning methods, the goal is to approximate the optimal Q-function using the dynamic programming structure of the MDP. The optimal policy is then the greedy policy according to the optimal Q-function.

In the policy gradient approach, the policy is approximated directly. For an MDP defined by , let denote a stochastic policy parameterized by (this also includes the deterministic policies as a special case). This parametrization can be a deep neural network for instance, defining a distribution over the set of actions for each state.

Let denote the value function corresponding to the long term discounted reward collected by the agent following policy starting from the state . Therefore, the goal is to find the optimal policy through solving the following optimization problem.

| (24) |

The following classical result ([Wil92]) calculates the gradient of the objective above with respect to . Consider a trajectory resulting from rolling out the policy . Define the accumulated rewards along the path as , also the probability of the trajectory as

Theorem 3.

(Policy Gradients.) Consider the finite horizon roll-out of the policy from the initial state . Then it holds that,

Remark 4.

The above representation of the gradient of the objective is useful because it allows for calculating the gradient by rolling out the policy and estimating an expected value. Note that this does not need any separate and detailed knowledge of the state transition density or the reward model, one can use the realized states and reward values along the observed trajectories. Also note that for a neural network parametrization of , the term can be calculated efficiently via backpropagation.

Remark 5.

The characterization of the gradient in Theorem 3 can be stated in a more general form. Let be a random variable with known p.d.f. . Let be some cost function defined as

for some arbitrary function that does not depend on . Then one has,

Policy gradient formula gives an unbiased estimate of ,

| (25) |

This leads us to the vanilla version of the REINFORCE Algorithm ([Wil92]) for policy gradients.

While REINFORCE algorithm works in theory, in practice, it suffers from high variance. The following property of the gradients enables us to lower the variance of the estimator without introducing bias.

Using the above property, one can add a "baseline" in the empirical policy gradient without introducing bias in :

| (26) |

More generally, the baseline can be state-dependent,

| (27) |

We end this section by observing that one can further exploit the temporal structure of an MDP to further reduce the variance of the policy gradient estimator (25). The idea is to split the path-wise sum of rewards into parts prior to step and after step .

Removing terms in the reward summation that do not depend on the current action will help reduce variance. Therefore, denoting , we have the following form for the policy gradient estimator,

| (28) |

3.3.1 Deep Deterministic Policy Gradients

In this section, we briefly review the Deep Deterministic Policy Gradient (DDPG) method introduced in [LHP+15]. DDPG can be thought as the extension of Deep Q-learning to continuous action spaces. Recall that in Deep Q-learning, the goal is to find the optimal action-value function and then finding the optimal policy as the greedy policy with respect to , needing to solving a maximization problem .

In the case of finite discrete actions, solving the maximization problem is possible. However, when the action space is continuous, this needs exhaustive search over the continuous space which is typically not feasible. In addition, implementing common optimization algorithms would make calculating a computationally expensive subroutine. DDPG algorithms tackle this problem by using a target policy network to propose an action which tries to approximate .

On the policy gradient side, policy learning in DDPG aims to learn a deterministic policy producing actions maximizing . DDPG assumes that the Q-function is differentiable with respect to action, therefore, learning is done by performing gradient ascent (with respect to policy parameters only and treating Q-function parameters as constants) to solve,

| (29) |

Note that the above formulation allows the policy to be both continuous and deterministic. Below, we present the pseudo-code summary of the DDPG algorithm 888Adapted from https://people.eecs.berkeley.edu/~pabbeel/cs287-fa19/.,

4 Deep Trajectory-Based Stochastic Optimal Control

Deep Trajectory-Based Stochastic Optimal Control (DTSOC), proposed in [H+16] (also see [RSTD22] for an exposition), is a method for solving stochastic control problems through formulating the control problem as optimizing over a computational graph, with the sought controls represented as (deep) neural networks. The approximation power of the deep neural networks can mitigate the curse of dimensionality for solving dynamic programming problems.

We briefly review the setup of the method here. Consider a stochastic control problem given by the following underlying stochastic dynamics,

| (30) |

where, is the state, is the control (agent’s action) and is a stochastic disturbance impacting the transition between times and .

In the models for the hedging instruments derived from (discretized) SDEs, the will be the Brownian increments in the (discretized) SDEs for time respective the time step from to , respective . The price of the hedging instrument as well as the amount of them held would be part of the state and the action would either directly give the new amount to be held or an increment or rate that would allow the new amount to be computed.

We assume that the actions are given as deterministic or stochastic feedback controls or . One can extend the state with path-dependent extra state that can be computed from current and previous state, action, and disturbances; and also with particular precomputed features that might lead to more efficient training of agents or more efficient agents which also extends the set of controls that can be written as feedback controls.

The actions can be constrained to come from a set of admissible actions:

where and are inequality and equality constraints. We assume that these constraints are already taken into account in the feedback controls such that will be an admissible action or give a distribution over admissible actions.

We assume a stepwise cost (or negative reward) function is specified and also a final cost .

Given a deterministic or probabilistic policy in feedback form that gives admissible actions, we generate an episode

and obtain a cumulative cost

| (31) |

The stochastic optimal control problem now minimizes the expected cumulative cost, conditional on starting state . If is not fixed, this will be a function of . We thus try to minimize or varying the policies .

With some given functional form (such as DNN) with an appropriate parametrization (for example, determine weights and biases while activation functions are fixed for complete feedforward DNN) as deterministic policy, we obtain

| (32) |

One now jointly optimizes over all policies respective over all parameters of such to optimize the above cumulative costs (if is not fixed, this will also be a function of and we would need to take an appropriate expectation over or keep as a parameter).

The controls at each time step could be stacked into a computational graph with a loss function given in (32). For each roll out of the control problem, this computational graph takes the sequence of disturbances as input and gives the accumulated cost inside the expectation in (32) as output. As demonstrated in Figure 1, the computational graph has the following features:

-

•

The deterministic policy at time step is represented by some network with appropriate architecture, here for example a complete feed-forward neural network, with parameters

-

•

The transition of the system to a new state, based on the system dynamics is encoded in the inter-block connections between and .

-

•

Defining the cumulative cost up to time as,

the horizontal connections on top of the network, sums up the immediate costs and gives the total accumulated cost at the end of the episode (when ).

-

•

There are also connections from and to but they are not shown in the figure to avoid clutter.

Note that based on a discretization of the time horizon, the computational graph will have embedded DNN. If these embedded DNN do not share parameters and have trainable parameters each, the entire computational graph has then trainable parameters.

If desired and appropriate for the application, one can introduce an additional future discount factor which balances the importance of optimizing immediate versus future costs by setting .

The pseudo-code for training the neural network computational graph is described in Algorithm 7 below.

4.1 Relationship to FBSDE Formulation of Stochastic Control

In general, one can consider a stochastic control problem in which some functional defined by running and final costs (which depend on the evolution of some controlled forward SDE and on the control) is optimized over that control. This leads to coupled forward backward stochastic differential equations (FBSDE) and non-linear PDEs (See [Per11] or [Pha09] for an introductory treatment). In our setting, the control that the agent tries to optimize does not impact the forward SDEs describing the evolution of the prices of the instruments, it only impacts the trading strategy, leading to a controlled backward SDE only.

With being the factors and prices for the (hedging) instruments and being the value of the hedging strategy, we have the system (see [Hie19])999 In this subsection, we use notation from the FBSDE literature as adapted to the pricing and hedging domain and do not follow the generic notation for RL or trajectory-based approaches. The state in RL or trajectory-based approaches would contain , , , , and whatever is needed to compute terms and costs (or equivalent information), the action/control would be some parametrization of , the stochastic disturbance would be the or . ,

| (33) |

| (34) |

where plays the role of a control or strategy and the functional to be optimized (typically, minimized) is

| (35) |

For the example of an European option, has to replicate the appropriate payoff .

One can define

or

and add it to the stochastic system, looking for a minimum of

One can derive FBSDE characterizing the optimal controls (both primal and dual/adjoint) as well as PDEs characterizing them, but we will here concentrate on approaches that try to directly optimize over the given system for , , , and .

Upon time-discretization, one obtains stochastic control problems defined on (controlled) FBSE ( standing for "difference") where now the running cost can depend on the forward and backward components and the control at both the beginning and end of each time-period.

Applying a simple Euler-Maruyama discretization for both and , we obtain

| (36) |

| (37) |

This can be used to time-step both and forward.

Now, in general can no longer perfectly replicate but still needs to be constrained to replicate the instrument in an appropriate sense, such as by minimizing the squared differences .

Similarly, the running costs need to be accumulated

| (38) |

and the stochastic optimal control problem will try to minimize

A time-discrete setting allows one to incorporate more general transaction costs for [Hie19, Section 7.2] by more complicated generators

| (39) |

and also more complicated running costs

| (40) |

which could include running costs that depend on the profit and loss of some strategy across the corresponding time interval.

Since with appropriate generator, final values, and settings, the backward component corresponds to a replication or risk-management price of the instrument under appropriate models, one could use the solution of the FBSE and/or PE in appropriate form as the book-keeping and/or consistent model in hedging or risk management setups that require such models, as in the stepwise mean-variance hedging setup discussed here. For such setups, it might be advantageous to introduce several processes, one reflecting a book-keeping or consistent model according to some set of assumptions (and thus providing such a book-keeping or model price for use after applying standard FBSDE techniques) while one or several others reflect the performance of other strategies that will be measured against such models.

In [EHJ17, Hie19, Hie21, GYH20, GYH22, LXL19, LXL21], path-wise deepBSDE methods for such problems are discussed, at least applied to pricing and risk management where there is only a final cost (or a cost at the earlier of reaching a barrier or maturity) - as in a quadratic hedging setup.

DeepBSDE methods represent the strategy as a DNN depending on appropriate state (or features computable from such state). Path-wise forward deepBSDE methods generate trajectories of and starting from initial values and according to the current strategy. They then use stochastic gradient descent type approaches such as ADAM to improve the strategy until an approximate optimum is reached. If the initial wealth is not given, it will be determined by the optimization as well. If the starting value of the risk factor vector is fixed, would be a single value, otherwise it would be a function of . The optimization problem would typically represent this function as a DNN. Derived so far for certain kinds of final costs or where one attempts to replicate the final payoff as well as possible, path-wise backward deepBSDE methods make the same assumptions, but on each generated forward trajectory of , they start with an appropriate final value of ( for the final payoff case), compute a corresponding trajectory of by stepping backward in time, and try to minimize the range of .

Quadratic hedging for European options has been considered with forward and backward path-wise methods in [LXL19, LXL21] for linear pricing and in [YHG20] for nonlinear pricing (differential rates) while forward path-wise methods were introduced earlier by [EHJ17]. The barrier option case is treated with forward methods in [GYH20, GYH22]. We are not aware of any path-wise deepBSDE (or other FBSDE-related) method being used for stepwise mean-variance hedging problems and might treat this setting in future work.

One difference between the setup discussed in this section and other approaches considered in the paper is that here the backward SDE or SE is written in such a way that it uses parts of the forward model (i.e. ) and might not as written satisfy self-financing exactly but only up to discretization accuracy. In this way, this is a (more) model-based approach. However, one can rewrite the BSDE for so that it only uses the stochastic increment of (i.e., written in rather than using model details about ) and one can rewrite the BSE so that it only requires observations of at trading times and perfectly preserves self-financing similarly to what we wrote in earlier subsections, obtaining methods that will be more similar to the ones discussed there.

5 Experimental Setup and Model Specification

Following [KR19], we consider the example of a European call option with strike price and maturity on a non-dividend-paying stock. The strike price and option maturity are considered as fixed parameters. It is assumed that the risk-free rate is zero and that the option position is held until maturity. Rebalancing of the hedging portfolio is allowed at fixed (often regular) times and trades are subject to transaction costs. The trained hedging agent is expected to learn to hedge an option with this specific set of parameters. It is possible to train parametric agents that can hedge a parametrized set of options (such as calls with various strikes), but we will not do so here. We use the Black-Scholes model for the simulation environment where the stock dynamics is given by a geometric Brownian motion

| (41) |

and trading (buying and selling) of stock incurs a transaction cost which is assumed to have the functional form (see [KR19]),

| (42) |

where is the change in the stock position.

The default parameters for the stock, the option, and the market friction are as given in Table 1.

| Parameter | Value |

|---|---|

| 5 % (rate of return) | |

| 20 % (volatility) | |

| 0.0 % (interest rate) | |

| 100 | |

| 100 | |

| 30 (option maturity- days) | |

| 0.01 (friction parameter) | |

| 0.01 (quadratic cost factor) |

5.1 Feature Engineering and Model Architecture

The following variables were chosen to represent the state space of the problem, given as inputs to the RL and DTSOC agent at each time step,

-

•

Time , ,

-

•

Stock price at time ,

-

•

Option price ,

-

•

Option Delta101010Computed or modeled with an appropriate model. ,

-

•

Current stock holding111111Impacted by agent’s action in previous step(s). .

The input to the agent should give the agent all of the information needed for optimal decision making. This leads to feature engineering to identify necessary or helpful features to add to the state. In [KR19], a minimal set of variables including the current price of stock, either time to maturity or time, and current stock position is considered. In section 5.1.1, we test and discuss whether it is necessary and/or reasonable to include option Delta as a feature in the state.

As for the neural network formulation of deep-MVH, one can either parametrize the hedge strategy (the number of stock to hold at time ) or parametrize the rebalancing rate. The two parametrizations are related through the equation,

| (43) |

We choose to parametrize the rebalancing rate of the stock holding by a feedforward neural network with three hidden layers with 10, 15 and 10 hidden units. This is similar to how other implementations in the literature have used relatively shallow deep networks with three layers to parametrize each individual control (see [H+16], [SXZ21]). RELU was used as the nonlinear activation function in all hidden layers. The actor component of the RL-DDPG agent is a feedforward neural network with 3 layers with 12 neurons in each layer. The critic component consists of a feedforward neural network of 3 layers with 24 neurons in each layer.

5.1.1 Should Option Delta be Included as a State Feature?

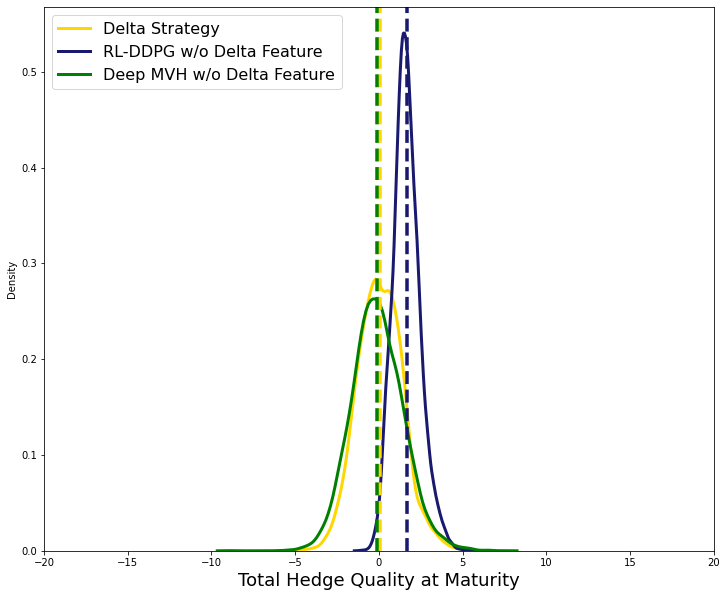

Since the option Delta is provided as a state space feature to both deep-MVH and RL-DDPG models, it is reasonable to ask what the model learns when the transaction cost is zero - since the optimal strategy for the continuous trading limit case (option Delta) is already given to the model as part of the input. To make the learning more difficult for the agents, we removed the option Delta from the state space and trained both RL-DDPG and the deep MVH models to hedge with no transaction cost.

Figure 2 shows that, even without access to the option Delta, deep-MVH performs as well as the Delta hedge if not better. The RL-DDPG strategy, however, seems to underperform relative to the deep-MVH as the mean of the total hedging cost has shifted to the right. This shows that we may drop the option Delta feature at least for the deep-MVH model, however, we decided to keep it to have identical state space specifications for both algorithms.

The box plots in Figure 3 below compare the statistics of decisions made by the deep-MVH model and the Delta hedge strategy in the intermediate steps. It is observed that if the option Delta is not provided to the deep-MVH model, the average decisions in terms of number of stocks to hold are reasonably close to those of Delta hedge strategy. It is also seen that the differences between the strategies decrease in the second half of the life of the option.

We use SHAP values (see Section 7.1 for a brief review) to gain insight how much the agents rely on each state feature in the absence of option Delta. Figure 4 shows the SHAP variable importance values for each of the 30 intermediate policies in deep MVH.

To summarize, we take average of the SHAP variable importance for each variable along all the time steps and show the results for RL-DDPG and deep-MVH agents in Figure 5.

We repeated the same test as above but this time with non-zero transaction cost (). Figure 6 shows that without option Delta as input, RL-DDPG agent performs worse than deep-MVH also when transaction costs are present, but still better than the Delta hedge strategy.

Figure 7 summarizes how important the different state features are to RL-DDPG and deep-MVH agents if there are transaction costs and if option Delta is not an input. The ordering of features based on SHAP importance remains the same for RL-DDPG regardless whether transaction costs are absent or present. While option price was most important for deep-MVH without transaction costs and stock price (and other features) were much less important in that case, with transaction costs, stock price became the most important feature while option price now became slightly less important than the stock price.

5.2 Model Training

The model was trained on a computer with 8 core i7-11850 CPU 2.50GHz processor and 32.0 GB memory. For the RL-DDPG model, both actor and critic are trained with respect to a mean squared error (MSE) loss with the TensorFlow implementation of ADAM optimizer, actor learning rate and critic learning rate are set to and respectively. The smoothing parameter for updating the weights of the target network is . We trained the DDPG-model for one epoch consisting of 5000 training episodes. Following [LHP+15], for exploration noise, we used the Ornstein-Uhlenbeck process with mean-reversion and volatility parameters fixed at 0.15 and 0.2 respectively. The above parameters were kept fixed for both zero and non-zero transaction cost regimes.

The deep-MVH model was trained using ADAM optimization with Pytorch off-the-shelf parameters. Batch normalization and dropout () were applied to each layer. The learning rate was fixed at initially and was decreased dynamically as training epochs progressed. The model was trained for 100 epochs and for each training epoch, a fresh batch of 50,000 training samples was simulated.

6 Outcome Analysis

In this section we examine the performance of the trained RL and deep-MVH under various scenarios. To compare the performance of the algorithms, we studied the histogram for the total hedge quality at maturity (also called the hedge P&L or hedge slippage- the wealth from the underlying portfolio minus the transaction cost and the final option pay-off) for various test episodes. Note that based on our conventions, positive values denote trader’s loss. Note that all the testing is conducted on a sample test of 1000 episodes, drawn independently from the training sample.

6.1 Approximating Delta Hedge under Zero-Transaction Cost

As a first step, we perform a sanity check and look at the performance of the data driven hedging strategies in the zero-transaction cost regime. Our expectation is that up to the error introduced by the time discretization, the RL and deep-MVH strategies would approximate the Delta hedge. The performance of the three hedging strategies is shown in Figure 8. It is observed that both RL and deep-MVH lead to a distribution of the final hedge P&L that is close to the Delta hedge one.

6.2 Increasing Transaction Cost

For this test, the transaction cost parameter was increased gradually as all other parameters were kept fixed. The Delta hedging strategy is expected to underperform the other strategies as the transaction cost increases, however, we expect the data driven algorithms to learn the trade-off between the hedge quality and transaction cost at training time and hence exhibit a more stable behavior. The results of the tests are shown in Figure 9 below.

6.3 Longer Option Maturity

At a high level, hedging an option with longer maturity could be more difficult as it translates to exposure to more uncertainty. To test this, we increased the option maturity and examined the performance of RL-DDPG and deep-MVH.

In Figure 10, it can be observed that the performance of deep-MVH model deteriorates noticeably as the option maturity increases. Other studies in the literature [SXZ21] reported similar behavior for other hedging problems.

This behavior may be related to the fact that the computational graph in deep-MVH becomes deeper as maturity increases (as opposed to the RL algorithm which trains a stationary policy that takes time as input) and this may affect how well the model can be trained.

6.4 Increasing the Risk-Aversion

To examine how the strategies trade off transaction cost with hedging P&L variance, we consider the effect of increasing the risk aversion parameter . Figure 11 below demonstrates the distribution of total hedging cost for the agents. It is seen that the dispersion of the distributions (more noticeably for the deep-MVH agent) decreases as the value of the risk aversion parameter increases, as expected.

6.5 Increasing Asset Volatility

All things equal, more volatile markets will mean that hedge performance will be more volatile (and more dispersed) as well. In order to examine this effect, the volatility of the stock was increased and the performance of the hedging agents was examined. The results are presented in Figure 12. It is observed that the distributions of the realized hedging cost for all three strategies become considerably wider as the volatility increases with the RL strategy showing a larger dispersion. Also, at high volatility levels, the mode of the distribution for RL appears to shift to the negative territory (meaning that gains from stock trades make up for transaction costs) while the deep-MVH maintains the mode around zero.

7 Visualization and Interpretation

In our experience, one of the first reactions from quants or traders, when exposed to the methods discussed here, is to point out their "black-box" nature. The trained agents generate suggested optimal actions without giving explanations. They do not learn parameters or parametric functions within an otherwise well-defined, parsimonious, and understandable model. In this way, ML models, in particular deep neural network-based models like DDPG or deep-MVH, achieve often state-of-the-art or at least good performance without parsimony and transparency. Their complexity results in increased predictive power but requires additional work to understand, explain, and control the agents (A discussion of the black box risk of using ML approaches in quantitative finance, in particular for pricing and hedging, can be found in [CSS21]).

Research directions in ML such as Explainable Artificial Intelligence (XAI) and interpretable ML try to produce methods for either providing post-hoc human understandable explanations for the behavior of the model or design ML algorithms which are explainable by design (see [GMR+18] for a survey). However, there has also been critique of using ML interpretation methods as the principal way to establish trust in the model (see [Lip18]).

For instance, one can find inconsistent definitions and motivations for interpretability in the literature and there are different opinions about what constitutes an interpretable model. Also, different interpretability methods can produce several different interpretations of the outputs and decisions of a given model that are contradicting each other or are inconsistent with each other.

Therefore, it is not enough to produce one set of or a variety of interpretations with these interpretability and explainability approaches. While such approaches and methods can produce valuable insight (in particular if several of these approaches give consistent results) or can inspire further investigations, more needs to be done to establish trust in the models and agents and their decisions. One still needs to assess whether the model is adequate and one needs to establish whether it was properly trained and tested. Generalization performance needs to be investigated. Model robustness and performance under generic and stressed conditions need to be studied. Conditions under which model performance deterioates or it becomes less confident in its decisions must be identified and appropriate monitoring processes and safeguards need to be implemented. Only through this wide variety of actions can one establish trust, understanding, and appropriate control of such models.

7.1 SHAP Values

SHAP (SHapley Additive exPlanations) is a method for local interpretation of a ML model output ([LL17]). The method attributes each individual prediction to the different predictors. For the sake of completeness, we give a high-level introduction of the concepts first.

For a ML model, the input features of the model are considered as the players of a game and the model output is the game outcome. Shapley values assign an importance score to each input (or parts of the input). For a set of input features, consider the index set , all features in a certain coalition cooperate towards the outcome with the default . Shapley values redistribute the total outcome value among all features based on their average individual contribution across all possible coalitions . The individual contribution of the feature in the coalition is defined by,

The Shapley value for the feature is then calculated by averaging across the coalitions,

where is a weighting term based on the number of choices for the subset S. Shapley values satisfy the properties of efficiency (), Symmetry, dummy and additivity (see [LL17] for details). The interpretation of the Shapley values for an ML model is the following. In this case, the outcome of the game is the output of the model and Shapley values ) measure the "significance" of each feature .

The SHAP framework introduced in [LL17] comes with a unifying perspective on connecting estimation of Shapley value (computing the Shapley value is NP-Hard) with several other popular explainability methods. Furthermore, they propose SHAP values as a unified measure of feature importance and prove them to be the unique solution respecting the criteria of local accuracy, missingness, and consistency (see [LL17]). Individual Shaply values can be averaged across the data to produce a SHAP variable importance, therefore, for the th feature, the SHAP variable importance is defined by,

In the following, we apply SHAP to both the RL and deep-MVH agents. In both cases, we treat the agent’s policy as a function from the state space to action space . In that sense, we are treating the policy similar to a model in the supervised learning setting. We compare the trained agents in two different environments, zero transaction cost and non-zero transaction cost (), with the results shown in Figure 13.

It is observed that in the zero-transaction cost regime, the value of the baseline (option Delta) has the highest SHAP variable importance. This seems reasonable, as this can be interpreted as the agent trying to follow the optimal strategy as close as possible - or as identifying a strategy close to the Delta hedge as approximately optimal. In the high transaction cost regime, the variable importance scores are clearly different, where now the current stock position is the second most important variable. This can be understood as the trained RL agent exhibiting a trading "inertia" by identifying a no-transaction band surrounding the current stock position in order to avoid too frequent transactions. Doing so will decrease the cost (see Figure 14 and Figure 15).

8 Sensitivity Analysis

Deploying complex models in financial applications requires careful analysis of the model behavior - and more importantly - the way it depends on various modeling choices made throughout the development process. More explicitly, model risk regulations (see [Res11] for instance) require the model developer and the model user to perform sensitivity analysis and robustness testing and consider their results when deciding whether and under what circumstances the model should be used. This is what we will do in this section.

The notions of robustness and sensitivity can have different meanings in various modelling contexts. We consider sensitivity analysis as a method for understanding the relationship between input parameters and outputs and follow the framework developed in [SHB+14] (called Parameter Space Analysis (PSA)) to study methods for solving MDPs:

“…the systematic variation of model input parameters, generating outputs for each combination of parameters, and investigating the relation between parameter settings and corresponding outputs”.

The following sections contain the results of tests designed based on our interpretation of the tasks outlined under PSA framework, covering training, optimization and parameter uncertainty.

8.1 Training Convergence

Deep RL models are known to suffer from instabilities (that is, nonmonotonic and/or overly noisy behavior) when training (see [NIA+18] for instance). During training, the cumulative reward may be unstable as training episodes progress and result in a non-monotonic curve. As explored in [NIA+18], generation of training data through an exploring agent and presence of noise in estimates of policy gradients are considered among the key reasons for this behavior. To best study whether such behavior occurs and impact model training and model behavior, several training runs under similar but different settings should be run and analyzed.

Figures 16 and 17 show the results when we repeated the training procedure with various random seeds. The learning curves in 17 show clear monotonicity and convergence. While Figure 16 is noisy, there is some monotonic behavior and convergence under that noise. These learning curves thus also show reasonable monotonicity and convergence.

Remark 6.

When comparing the training behaviors in Figures 16 and 17, one must keep in mind that the training of the Deep MVH model proceeds in several training epochs, meaning, gradient updates over average loss over a batch of 5000 episodes for several times (also note that in each batch, a fresh set of training data is simulated). On the other hand, the training curves in Figure 16 show progress for each single episode. As a result, the training of the RL-DDPG is on mini-batches of , whereas the Deep MVH training is on mini-batches of . This explains the noisiness observed in the RL-DDPG learning curves compared to the Deep MVH learning curves (see also Figure 18 below).

8.2 Sensitivity to Hyper-parameters

RL agents are known to be sensitive to the architectural choices for the neural networks and the training hyper-parameters. Therefore, understanding the effect of various design choices on performance of the RL agents is an important area of research (see [HIB+18], [IHGP17] for instance). In this section, we assess the training and performance of both RL-DDPG and deep-MVH agents under various choices of hyper-parameters.

8.2.1 Discount Factor

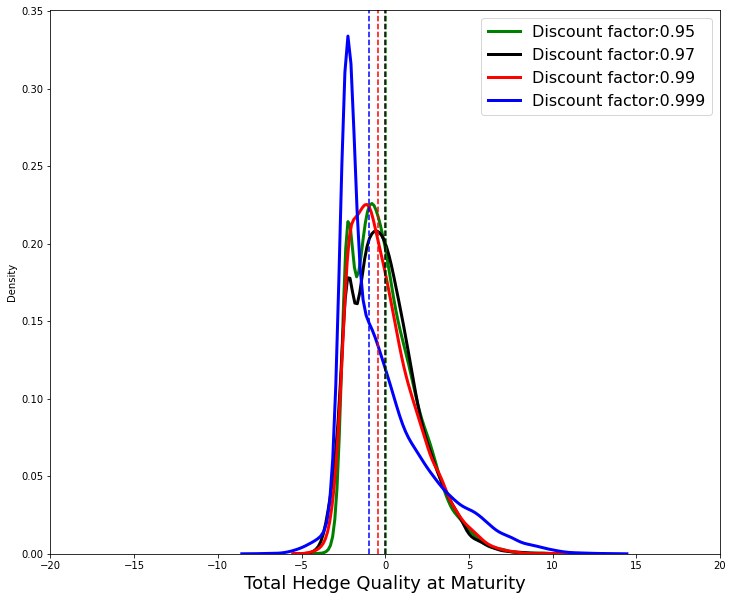

As noted in [ARS+20] for a series of benchmark tasks, the choice of reward discount factor impacts the performance of RL agents strongly. We tested the sensitivity to this parameter by varying it through the range of . For this test, we kept the transaction cost at zero and all other parameters fixed.

Figures 19 and 20 below show the total hedge quality of the RL-DDPG and deep-MVH agents under various choices of . While it seems that the changes in the deep-MVH performance are within the range of inherent variability and all follow a similar shape, some noticeable changes are observed in the RL-DDPG agent’s total hedge quality and the shape of the distribution changes the closer the discount factor is to one.

8.2.2 Learning Rates

In this section, we examine the effects of the learning rates in training the neural networks in deep-MVH and RL-DDPG. For the RL-DDPG model, we considered the common range of values for the actor-critic learning rates in other domains (see [ARS+20], [HIB+18], [IHGP17]). More specifically, we kept the learning rate for the actor network smaller than the learning rate for the critic network.

The hyper-parameter grid of actor-critic learning rate pairs considered is as follows: . The learning curves for each choice of the hyper-parameter are plotted in Figure 21. The pair seems to result in smaller (better) optimization objective, however, the differences are relatively modest given the inherent variability in the training algorithm (also see Figure 16).

The plot in Figure 22 below shows the learning curves for the deep-MVH training to various choices of learning rate in ADAM optimizer (we turned off the dynamic decreasing of the learning rate for this plot). The learning rate parameter (as implemented in our default setting) seems to result in the best performance in the optimization for the parameters tested.

8.2.3 Neural Network Architecture

In this section we assess the effect of architectural choices on the training and performance of both deep MVH and RL-DDPG agents. For the deep-MVH architecture, recall that the depth of the computational graph depends on the time discretization. This dependence was assessed by examining the performance for the longer maturities (see Section 6.3). Here we examine how the width and the depths of each control at each time-step (which translates into total width and total depth of the global computational graph) impacts the training and the performance of the agents.

For this test, we considered the following feedforward architectures for each time-step policy function: , where the length of each list denotes the depth of the network and each entry denotes the number of neurons in the corresponding layer. The plots in Figure 23 and 24 show the results. It is observed that the deeper architectures achieve smaller (better) optimization objective while exhibiting more noise in training. It is also interesting to note that deeper architectures result in lower level of noise in total hedge P&L at maturity.

We repeat the same test for the actor component of the RL-DDPG agent while keeping the critic component fixed and considering architectures ranging from a linear regression on the state variable to a deep feed forward architecture with 5 layers (see [IHGP17]). The results are presented in Figures 25 and 26. The learning curves are similar across the architectures. Shallower networks seem to generate P&L histograms with mode further shifted to the negative side but the shape of the distributions is similar considering the noise in the training process and model outputs.

Neural Network Parametrization of Deep-MVH

As mentioned in Section 5.1, we parametrized the stock position rebalancing rate by a neural network first and then translated the network output to the hedging decision (number of stocks to hold – see equation (43)). To test the sensitivity of the deep-MVH model performance to this choice of parametrization, we implemented an alternative architecture where at each time step, the position in the hedge instrument is directly parametrized by a neural network. Figure 27 shows that the overall performance of the deep-MVH model does not show significant impact to this neural network parametrization. However, we notice that the histogram of total hedging cost for deep-MVH with direct hedge position parametrization has lower dispersion.

Conclusions and Future Directions

We considered reinforcement learning and trajectory-based stochastic optimal control approaches to stepwise mean-variance hedging in presence of transaction costs and discrete trading times. The main goal of the study was to describe both approaches in a self-contained manner and examine the performance of these two approaches on simulated data to be able to arrive at a clear assessment of their advantages and limitations in the simplest setting with fewest uncertainties. Extensive outcome analysis and sensitivity testing was performed for this purpose. It was observed that both RL-DDPG and deep-MVH agents approximate the optimal hedging strategy (Delta Hedge) when the transaction cost is zero and they outperformed Delta hedge as transaction cost levels increased. Both algorithms showed reasonable behavior in tests where the risk aversion parameter and market volatility increased. So far, the trajectory-based stochastic optimal control approach (deep MVH) does not perform well for longer maturities yet, but we leave the design and testing of trajectory-based approaches that perform better in this setting to future work.

There are several directions to pursue in future work. One area worth studying would be to look at other vanilla derivatives (puts, Asians, etc.) and at portfolios of such to compare to the results on a single call as presented here, including the parametric case (parametric both in the type of instrument (strike, maturity, …) and in the risk aversion and objective, as done in [BMW22] for a deep-hedging type of approach for different hedge objectives). In a similar vein, one can look at more exotic instruments with a stronger market data dependency (such as Cliquets) or with embedded optionality such as Americans or Bermudans where in addition to hedging decisions exercise decisions need to be made, which would require an extension of the setup.

Another important direction would be related to the models of the hedging instruments and the hedged instrument which here were Black-Scholes models and formulas with given, pre-calibrated parameters. The proposed approaches and methods should be further tested on models that capture more features of the market and correspond to the types of models used to simulate and price options in investment banks that engage in market making and competitive pricing and hedging of such instruments. It should be studied how one can calibrate such models best to current and ongoing market data and how such approaches perform when run on actually observed prices and quotes of hedging and hedged instruments. Instead of using calibrated or fitted models, one could also consider the use of generative data-driven models such as various market models to create a data-driven end-to-end setup (For an example of generative models with GANs for commodity see [BRMH22], for a variety of models for equity using GANs, normalizing flows, and/or neural SDEs see [WM22, WWP+21, WBWB19, CRW21]). Finally, other network architectures for deep-MVH could be explored as well as specialized training strategies that are adapted to the very deep computational graphs that occur in the trajectory-based stochastic optimal control approach.