- 2G

- Second Generation

- 3G

- 3 Generation

- 3GPP

- 3 Generation Partnership Project

- 4G

- 4 Generation

- 5G

- 5 Generation

- AA

- Antenna Array

- AC

- Admission Control

- AD

- Attack-Decay

- ADSL

- Asymmetric Digital Subscriber Line

- AHW

- Alternate Hop-and-Wait

- AMC

- Adaptive Modulation and Coding

- AP

- Access Point

- ANN

- artificial neural network

- APA

- Adaptive Power Allocation

- AR

- autoregressive

- ARMA

- autoregressive moving average

- ARIMA

- auto regressive integrated moving average

- ATES

- Adaptive Throughput-based Efficiency-Satisfaction Trade-Off

- AWGN

- additive white Gaussian noise

- BPNN

- back propagation neural network

- BB

- Branch and Bound

- BD

- Block Diagonalization

- BER

- bit error rate

- BF

- Best Fit

- BLER

- BLock Error Rate

- BPC

- Binary power control

- BPSK

- binary phase-shift keying

- BPA

- Best pilot-to-data power ratio (PDPR) Algorithm

- BRA

- Balanced Random Allocation

- BS

- base station

- CAP

- Combinatorial Allocation Problem

- CAPEX

- Capital Expenditure

- CBF

- Coordinated Beamforming

- CBR

- Constant Bit Rate

- CBS

- Class Based Scheduling

- CC

- Congestion Control

- CDF

- Cumulative Distribution Function

- CDMA

- Code-Division Multiple Access

- CL

- Closed Loop

- CLPC

- Closed Loop Power Control

- CNN

- convolutional neural network

- CNR

- Channel-to-Noise Ratio

- CPA

- Cellular Protection Algorithm

- CPICH

- Common Pilot Channel

- CoMP

- Coordinated Multi-Point

- CQI

- Channel Quality Indicator

- CRM

- Constrained Rate Maximization

- CRN

- Cognitive Radio Network

- CS

- Coordinated Scheduling

- CSI

- channel state information

- CSIR

- channel state information at the receiver

- CSIT

- channel state information at the transmitter

- CUE

- cellular user equipment

- D2D

- device-to-device

- DCA

- Dynamic Channel Allocation

- DE

- Differential Evolution

- DFT

- Discrete Fourier Transform

- DIST

- Distance

- DL

- downlink

- DMA

- Double Moving Average

- DMRS

- Demodulation Reference Signal

- D2DM

- D2D Mode

- DMS

- D2D Mode Selection

- D-MIMO

- distributed multiple input multiple output

- DPC

- Dirty Paper Coding

- DRA

- Dynamic Resource Assignment

- DSA

- Dynamic Spectrum Access

- DSM

- Delay-based Satisfaction Maximization

- ECC

- Electronic Communications Committee

- EFLC

- Error Feedback Based Load Control

- EI

- Efficiency Indicator

- ELM

- extreme learning machine

- eNB

- Evolved Node B

- EPA

- Equal Power Allocation

- EPC

- Evolved Packet Core

- EPS

- Evolved Packet System

- E-UTRAN

- Evolved Universal Terrestrial Radio Access Network

- ES

- Exhaustive Search

- FDD

- frequency division duplexing

- FDM

- Frequency Division Multiplexing

- FER

- Frame Erasure Rate

- FF

- Fast Fading

- FSB

- Fixed Switched Beamforming

- FST

- Fixed SNR Target

- FTP

- File Transfer Protocol

- GA

- Genetic Algorithm

- GBR

- Guaranteed Bit Rate

- GLR

- Gain to Leakage Ratio

- GOS

- Generated Orthogonal Sequence

- GPL

- GNU General Public License

- GRP

- Grouping

- GRU

- gated recurrent unit

- HARQ

- Hybrid Automatic Repeat Request

- HMS

- Harmonic Mode Selection

- HOL

- Head Of Line

- HSDPA

- High-Speed Downlink Packet Access

- HSPA

- High Speed Packet Access

- HTTP

- HyperText Transfer Protocol

- ICMP

- Internet Control Message Protocol

- ICI

- Intercell Interference

- ID

- Identification

- IETF

- Internet Engineering Task Force

- ILP

- Integer Linear Program

- JRAPAP

- Joint RB Assignment and Power Allocation Problem

- UID

- Unique Identification

- IID

- Independent and Identically Distributed

- IIR

- Infinite Impulse Response

- ILP

- Integer Linear Problem

- IMT

- International Mobile Telecommunications

- INV

- Inverted Norm-based Grouping

- IoT

- Internet of Things

- IP

- Internet Protocol

- IPv6

- Internet Protocol Version 6

- ISD

- Inter-Site Distance

- ISI

- Inter Symbol Interference

- ITU

- International Telecommunication Union

- JOAS

- Joint Opportunistic Assignment and Scheduling

- JOS

- Joint Opportunistic Scheduling

- JOELM

- jointly optimized extreme learning machine

- JP

- Joint Processing

- JS

- Jump-Stay

- KF

- Kalman filter

- KKT

- Karush-Kuhn-Tucker

- L3

- Layer-3

- LAC

- Link Admission Control

- LA

- Link Adaptation

- LC

- Load Control

- LOS

- Line of Sight

- LP

- Linear Programming

- LS

- least squares

- LSTM

- long short-term memory

- LTE

- Long Term Evolution

- LTE-A

- LTE-Advanced

- LTE-Advanced

- Long Term Evolution Advanced

- M2M

- Machine-to-Machine

- MAC

- Medium Access Control

- MANET

- Mobile Ad hoc Network

- MC

- Modular Clock

- MCS

- Modulation and Coding Scheme

- MDB

- Measured Delay Based

- MDI

- Minimum D2D Interference

- MF

- Matched Filter

- MG

- Maximum Gain

- MH

- Multi-Hop

- mMIMO

- massive multiple input multiple output

- MIMO

- multiple input multiple output

- MINLP

- Mixed Integer Nonlinear Programming

- MIP

- Mixed Integer Programming

- MISO

- Multiple Input Single Output

- ML

- machine learning

- MLP

- multilayer perceptron

- MLWDF

- Modified Largest Weighted Delay First

- MME

- Mobility Management Entity

- MMSE

- minimum mean squared error

- MOS

- Mean Opinion Score

- MPF

- Multicarrier Proportional Fair

- MRA

- Maximum Rate Allocation

- MR

- Maximum Rate

- MRC

- maximum ratio combining

- MRT

- Maximum Ratio Transmission

- MRUS

- Maximum Rate with User Satisfaction

- MS

- mobile station

- MSE

- mean squared error

- MSI

- Multi-Stream Interference

- MTC

- Machine-Type Communication

- MTSI

- Multimedia Telephony Services over IMS

- MTSM

- Modified Throughput-based Satisfaction Maximization

- MU-MIMO

- multiuser multiple input multiple output

- MU

- multi-user

- NARX

- nonlinear autoregressive network with exogenous inputs

- NAS

- Non-Access Stratum

- NB

- Node B

- NE

- Nash equilibrium

- NCL

- Neighbor Cell List

- NLP

- Nonlinear Programming

- NLOS

- Non-Line of Sight

- NMSE

- Normalized Mean Square Error

- NN

- neural network

- NORM

- Normalized Projection-based Grouping

- NP

- Non-Polynomial Time

- NRT

- Non-Real Time

- NSPS

- National Security and Public Safety Services

- O2I

- Outdoor to Indoor

- OFDMA

- orthogonal frequency division multiple access

- OFDM

- orthogonal frequency division multiplexing

- OFPC

- Open Loop with Fractional Path Loss Compensation

- O2I

- Outdoor-to-Indoor

- OL

- Open Loop

- OLPC

- Open-Loop Power Control

- OL-PC

- Open-Loop Power Control

- OPEX

- Operational Expenditure

- ORB

- Orthogonal Random Beamforming

- JO-PF

- Joint Opportunistic Proportional Fair

- OSI

- Open Systems Interconnection

- PAIR

- D2D Pair Gain-based Grouping

- PAPR

- Peak-to-Average Power Ratio

- P2P

- Peer-to-Peer

- PC

- Power Control

- PCI

- Physical Cell ID

- Probability Density Function

- PDPR

- pilot-to-data power ratio

- PER

- Packet Error Rate

- PF

- Proportional Fair

- P-GW

- Packet Data Network Gateway

- PL

- Pathloss

- PPR

- pilot power ratio

- PRB

- physical resource block

- PROJ

- Projection-based Grouping

- ProSe

- Proximity Services

- PS

- Packet Scheduling

- PSAM

- pilot symbol assisted modulation

- PSO

- Particle Swarm Optimization

- PZF

- Projected Zero-Forcing

- QAM

- Quadrature Amplitude Modulation

- QoS

- Quality of Service

- QPSK

- Quadri-Phase Shift Keying

- RAISES

- Reallocation-based Assignment for Improved Spectral Efficiency and Satisfaction

- RAN

- Radio Access Network

- RA

- Resource Allocation

- RAT

- Radio Access Technology

- RATE

- Rate-based

- RB

- resource block

- RBG

- Resource Block Group

- REF

- Reference Grouping

- ReLU

- rectified linear unit

- RLC

- Radio Link Control

- RM

- Rate Maximization

- RNC

- Radio Network Controller

- RND

- Random Grouping

- RNN

- recurrent neural network

- RRA

- Radio Resource Allocation

- RRM

- Radio Resource Management

- RSCP

- Received Signal Code Power

- RSRP

- Reference Signal Receive Power

- RSRQ

- Reference Signal Receive Quality

- RR

- Round Robin

- RRC

- Radio Resource Control

- RSSI

- Received Signal Strength Indicator

- RT

- Real Time

- RU

- Resource Unit

- RUNE

- RUdimentary Network Emulator

- RV

- Random Variable

- SAC

- Session Admission Control

- SCM

- Spatial Channel Model

- SC-FDMA

- Single Carrier - Frequency Division Multiple Access

- SD

- Soft Dropping

- S-D

- Source-Destination

- SDPC

- Soft Dropping Power Control

- SDMA

- Space-Division Multiple Access

- SE

- spectral efficiency

- SER

- Symbol Error Rate

- SES

- Simple Exponential Smoothing

- S-GW

- Serving Gateway

- SINR

- signal-to-interference-plus-noise ratio

- SI

- Satisfaction Indicator

- SIP

- Session Initiation Protocol

- SISO

- single input single output

- SIMO

- Single Input Multiple Output

- SIR

- signal-to-interference ratio

- SLNR

- Signal-to-Leakage-plus-Noise Ratio

- SMA

- Simple Moving Average

- SNR

- signal-to-noise ratio

- SORA

- Satisfaction Oriented Resource Allocation

- SORA-NRT

- Satisfaction-Oriented Resource Allocation for Non-Real Time Services

- SORA-RT

- Satisfaction-Oriented Resource Allocation for Real Time Services

- SPF

- Single-Carrier Proportional Fair

- SRA

- Sequential Removal Algorithm

- SRS

- Sounding Reference Signal

- SU-MIMO

- single-user multiple input multiple output

- SU

- Single-User

- SVD

- Singular Value Decomposition

- SVM

- support vector machine

- SVR

- support vector machine for regression

- TCP

- Transmission Control Protocol

- TDD

- time division duplexing

- TDMA

- Time Division Multiple Access

- TDL

- tapped delay line

- TETRA

- Terrestrial Trunked Radio

- TP

- Transmit Power

- TPC

- Transmit Power Control

- TTI

- Transmission Time Interval

- TTR

- Time-To-Rendezvous

- TSM

- Throughput-based Satisfaction Maximization

- TU

- Typical Urban

- UE

- user equipment

- UEPS

- Urgency and Efficiency-based Packet Scheduling

- UL

- uplink

- UMTS

- Universal Mobile Telecommunications System

- URI

- Uniform Resource Identifier

- URM

- Unconstrained Rate Maximization

- UT

- user terminal

- V2V

- vehicle-to-vehicle

- V2X

- vehicle-to-everything

- VR

- Virtual Resource

- VoIP

- Voice over IP

- WAN

- Wireless Access Network

- WCDMA

- Wideband Code Division Multiple Access

- WF

- Water-filling

- WiMAX

- Worldwide Interoperability for Microwave Access

- WINNER

- Wireless World Initiative New Radio

- WLAN

- Wireless Local Area Network

- WMPF

- Weighted Multicarrier Proportional Fair

- WPF

- Weighted Proportional Fair

- WSN

- Wireless Sensor Network

- WWW

- World Wide Web

- XIXO

- (Single or Multiple) Input (Single or Multiple) Output

- ZF

- zero-forcing

- ZMCSCG

- Zero Mean Circularly Symmetric Complex Gaussian

IEEE Copyright Notice

© 2023 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works

A Comparison of Neural Networks for

Wireless Channel Prediction

††thanks: The authors are with KTH Royal Institute of Technology.

Oscar Stenhammar and Gabor Fodor are also with Ericsson AB.

Abstract

The performance of modern wireless communications systems depends critically on the quality of the available channel state information (CSI) at the transmitter and receiver. Several previous works have proposed concepts and algorithms that help maintain high quality CSI even in the presence of high mobility and channel aging, such as temporal prediction schemes that employ neural networks. However, it is still unclear which neural network-based scheme provides the best performance in terms of prediction quality, training complexity and practical feasibility. To investigate such a question, this paper first provides an overview of state-of-the-art neural networks applicable to channel prediction and compares their performance in terms of prediction quality. Next, a new comparative analysis is proposed for four promising neural networks with different prediction horizons. The well-known tapped delay channel model recommended by the Third Generation Partnership Program is used for a standardized comparison among the neural networks. Based on this comparative evaluation, the advantages and disadvantages of each neural network are discussed and guidelines for selecting the best-suited neural network in channel prediction applications are given.

Index Terms:

6G mobile communication, channel aging, channel prediction, channel state information, deep learning, machine learning.Introduction

As the sixth generation (6G) of wireless communication technologies and services emerges, higher expectations for mobile broadband services are set by end users. Concepts of the evolving 5G and emerging 6G networks, such as distributed multiple input multiple output systems rely critically on the availability of up-to-date channel state information (CSI). However, obtaining accurate CSI is non-trivial since the channel evolves over time as the scattering environment and the position of the user equipment (UE) change. The evolution of the channel is often referred to as channel aging and poses a major challenge in the design of modern wireless systems. To meet this challenge, channel prediction has emerged as a key tool to combat channel aging [1, 2]. Most commonly, channel prediction is incorporated by exploiting time series of past channel estimations. Using predicted CSI, it is possible to improve the performance of wireless communication, even in the presence of high mobility and rapidly changing channels. Accurately updated CSI allows an adaptive transmitter to proactively tune the communication parameters, such as the transmit power, constellation size, and coding rate to enhance the network performance.

A general and widely used method to characterize the evolution of the wireless channel is autoregressive (AR) models [1]. This approach is model-based because it relies on analytical models of the dynamical evolution of the channel. In the modeled-based approach, the wireless channel is modeled as a linear combination of the previous realizations of the channel with some additive process noise. Conventionally, model-based methods such as Kalman and Wiener filtering have been used for channel prediction. Assuming that the channel evolves according to an AR model with Gaussian noise and the second-order statistics of the AR process are known or can be acquired, Kalman filtering is optimal in a mean squared error (MSE) sense [3]. However, with increasing bandwidth and number of antennas, the complexity of Kalman filters grows relatively fast. Furthermore, the computational complexity is proportional to the square of the amount of previous channel data used in the model [1]. To ensure satisfactory performance, it is often necessary to use higher-order models that employ many parameters. Thus, the inherent trade-off between the model order and the associated computational complexity often limits the performance of traditional model-based methods [4].

A recently proposed method to overcome channel aging is to use predictor antennas mounted on vehicles [2]. The predictor antenna is specifically designed for vehicles moving at high speed and is typically placed on the exterior of the vehicle in front of the main antenna. In this way, the predictor antenna can estimate the channel from the position that the main antenna will reach soon. For vehicles moving at high speed, it is a valuable suggestion. However, mounting predictor antennas on legacy vehicles may not be viable in practice. Arguably, a more economical and viable solution is to find a prediction scheme with satisfactory performance and to update the software instead of installing hardware on existing connected vehicles.

In light of the above considerations, the increasing popularity and improvements of neural networks over the last years appear as a viable approach to wireless channel prediction. Specifically, by implementing neural networks according to the so-called data-driven approach, no underlying model needs to be assumed, as opposed to the model-based approach. This makes the predicting model less sensitive to disturbances and interference since it can learn from realistic data. In the case of predicting future channels based on solely the previous channel estimations, the channel prediction problem becomes a time series learning problem. Indeed, in the past years, channel prediction has been studied extensively and numerous techniques have been considered with the use of neural networks. For instance, channel prediction can also be conducted using the location of the UE, which is appropriate for a static scenario.

However, in a real-world crowded urban environment, the spatial correlation of the channel can be very small or completely absent, due to moving objects. Also, by depending on the location of the UE, an algorithm may become computationally more complex. By relying solely on historical time series data and the temporal correlation of the channel, channel prediction algorithms become computationally more efficient and scalable among different environments. For this reason, the present article aims to overview the most prominent neural networks methods and to identify research gaps in channel prediction that strictly uses historical channel measurements as input data. The most promising neural networks for channel prediction, which has performed well in previous studies, are compared using a dataset, with and without noise, simulated by the common and realistic 3 Generation Partnership Project (3GPP) tapped delay line (TDL)-A model [5]. The performance of predicting fast-fading channels is studied over a large span of prediction horizons.

The advantages and disadvantages of each method are discussed to ultimately identify the most promising neural network for wireless channel prediction. This paper is, to the authors best knowledge, the first comparison of channel prediction methods that represent multiple different classes of neural networks. We provide a deeper understanding of the state-of-the-art in channel prediction to direct future research toward optimal models for real-world implementations.

To summarize, the contributions of this paper are the following:

-

•

An overview of previous works, focusing on channel prediction that employs data-driven machine learning (ML) methods.

-

•

An original quantitative comparison of the most promising data-driven methods identified from previous works. The data-driven methods are also compared to Kalman filtering.

-

•

A discussion on how to develop the state-of-the-art in channel prediction, based on numerical evaluation arguments.

The rest of the sections in this paper are organized as follows: the representative state-of-the-art in channel prediction using ML is overviewed; the prediction schemes that will be compared are described and justified; the proposed prediction schemes are numerically evaluated and compared by their performance; the outcome of the experiments are discussed; and finally, the results from the study we proposed in this paper are concluded.

Overview of previous works

Model-based methods have been widely used to perform channel prediction. However, recent advances in ML have accelerated several research areas, and recent studies have suggested that ML has the potential to outperform conventional channel prediction model-based methods. The ML model is a function that maps input data to an output decision or prediction, defined by its trainable parameters and its architecture. Training a supervised ML model means tuning the parameters to output a satisfactory output, usually by solving an optimization problem that minimizes a loss function. In our case, the ML model uses historical time series of channel measurements as input and outputs the future channel.

The performance of the model-based Kalman filter has been compared to a multilayer perceptron (MLP) in [4]. The MLP is a basic neural network that consists of several layers of nodes, where each node in one layer connects by a trainable parameter to every node in the following layer. To replicate the training process as with real channel data, [4] used noisy simulated data to train the MLP. All other papers surveyed in our work that use simulated data assume perfect knowledge of the channel when training the neural network. The comparison of the Kalman filter and the MLP method, with a small advantage to the Kalman filter, suggests a need for more advanced neural networks.

Several works have implemented more advanced structures for enhanced prediction accuracy. A popular model in image recognition is the convolutional neural network (CNN), which in contrast to MLP can take a matrix as input instead of a vector. It can learn to recognize patterns in smaller sections from an input matrix. By constructing a matrix of the size given by the time steps and the number of antennas, a CNN is proposed in [6] to predict AR coefficients for channel evolution. Channel prediction has also been performed using a recurrent neural network (RNN) that utilizes the temporal correlation in sequential data, in contrast to the CNN. A subset of frequency subcarriers was predicted individually by an RNN in [7], followed by performing interpolation to predict the entire frequency domain used by the antenna. Other works have combined CNNs and RNNs to predict the channel. Both [8, 9] have combined a CNN with a long short-term memory (LSTM) model, which is a type of RNN. A well-known issue with RNN is that it has training convergence issues due to vanishing or diverging gradients. LSTM alleviates these problems. A comparison between LSTM to conventional model-based methods has been proposed in [10], where the effect of moving at different velocities were studied for channel prediction. In a recent paper [11], the authors modeled the channel between a UE and base station (BS) via a reconfigurable intelligent surface as a fast-fading channel using the LSTM, assuming stationarity between the BS and the reconfigurable intelligent surface.

| Model | Performance | Prediction horizon | Noisy label | Mobility | Data generation | Prediction procedure | Paper |

| LSTM | Superior to ARIMA and SVR | 0.1-1ms | N | Medium & High | S | Time series | [10] |

| Transformers | Superior to LSTM | 0.625- 3.125ms | N | Medium | S | Time series | [12] |

| LSTM & GRU | Superior to RNN | 1-5ms | N | High | S | Time series | [13] |

| RNN | Inferior to KF | 1.28ms | N | Medium & High | S | Time series | [7] |

| MLP | Inferior to KF | 40ms | Y | Low | S | Time series | [4] |

| LSTM & GRU | Superior to ARIMA | 1-10s | N | Low & High | S | Encoder- decoder | [14] |

| CNN/RNN | Superior to KF | - | N | Low & Medium | S | Time series | [6] |

| LSTM/CNN | Superior to MLP | - | Y | None | M | Time series | [8] |

| LSTM/CNN | Inferior to LSTM and CNN | - | N | Medium | S | UL-DL & subcarriers | [9] |

| LSTM | Superior to Minimum Variance Unbiased | - | N | Low | S | Time series | [11] |

| C-GRBFnet | Superior to LSTM | - | N | None | S | Spatial prediction | [15] |

Aside from LSTM, gated recurrent unit (GRU) has also been proposed to improve the sequential RNN, and is more computationally efficient than LSTM. In channel prediction, the GRU has been tested empirically by several researchers. The authors in [14] compared the LSTM and GRU with a proposed prediction model exploiting an encoder-decoder scheme, with LSTM or GRU layers at both the encoder and decoder side. Several datasets, one including 4G measurements, revealed a slight advantage to the LSTM. In [13], an overview of channel prediction has been made where the LSTM and GRU have been studied over several prediction horizons. The first evaluation of a deep GRU has been conducted, in favor of the GRU.

One additional type of neural network has been recently proposed in [12], which adopts the transformer model to predict the channel. The transformer has the ability to predict multiple future time steps in parallel, by learning to identify and pay attention to critical behavior in sequential data. Another transformer-based model has been proposed in [15] to predict the channel impulse response, based on the location of the UE. It does not use historical channel measurements as input like the previously discussed papers but shows good results compared to the LSTM.

In Table I, contributions from all papers are categorized in columns and summarized, with the topics of interest in this paper. As can be seen in the column Prediction procedure, the channel is predicted using time series for all papers in Table I, except for [15]. Although there are some papers using slightly different prediction procedures, time series are the foundation to make the prediction procedure more efficient.

One conclusion from Table I is that the majority of the papers evaluate the channel prediction models based on simulated data. This is understandable since it is less costly and less time-consuming to collect simulated data. However, in a real-world implementation of channel prediction, measured data have to be used to conduct the predictions. The channel measurement and estimation process is unavoidably affected by noise. For this reason, noise was introduced in the training process in [4], including the true data that is used to update the model. The column Noisy label indicates whether the paper considers a noisy label for training the model. If the data is generated by measurements, the label is automatically noisy. There is only one paper that considers noisy labels while using a simulator to generate the dataset to train the prediction model. In our paper, we investigate its role and we show that it can have a major effect when evaluating the performance of the prediction methods.

The prediction horizons considered in Table I are almost exclusively correlated with mobility. If a paper considers high mobility of the UE, the horizon is short, and vice versa, due to difficulties of predicting the channel over long horizons with a fluctuating channel. If the prediction horizon exceeds the coherence time, the channel’s temporal correlation vanishes, and it becomes impossible to infer the channel out of current or past measurements. The prediction horizons of the papers listed in Table I are generally short. Half of the papers do not state on what time horizon the channel is predicted. Furthermore, no paper has included a prediction horizon long enough for the performance to fail.

From the summary of previous works in Table I, research gaps can be found. First, it is not obvious which neural network is the most suitable for channel prediction. Second, although different data-driven models may each have good results, they have not been compared to each other. In most of the existing literature, data-driven models are compared to conventional model-based methods. The overviewed papers generally do not perform comparisons among data-driven models, or at best do partial comparisons. For example, [13] compares LSTM to a deep GRU. The present paper is arguably the first to make a comprehensive comparison among the most prominent data-driven approaches.

Channel prediction using neural networks

To identify the most promising neural network algorithm for the purpose of channel prediction, the most prominent algorithms found in the previous section are further analyzed and compared. Throughout the rest of this paper, five different regular types of neural networks are compared.

A common type of neural network is the feedforward neural network. It consists of a set of layers, each layer with multiple neurons, and basically constitutes the conceptual framework of all neural networks. All layers between the input- and output layers are called hidden layers. A feedforward neural network that has one or more hidden layers of neurons is called a MLP. The MLP has shown convincing results in many areas and is a very general framework compatible with many applications, since the input to a MLP must be a one-dimensional vector. A vector that can be constructed from any type of data. However, the ordering of the elements in the input vector is disregarded. The result is that the potential importance of the position of the elements in the input vector is neglected.

One type of neural network that is built to preserve spatial data is the CNN. The idea is to identify patterns in the input matrix, making the position of each element in the input matrix relevant compared to the MLP. CNNs have acquired state-of-the-art status for their ability to detect patterns within image recognition. By using previous channel samples from multiple antennas, one can construct a matrix as a type of image as input to the CNN. The advantages of the CNN could be utilized to find patterns across time and among antennas.

A class of neural networks that is suitable for time series is the RNN since it stores information from prior inputs in its internal state to influence the current output. That makes the RNN able to benefit from sequential data better than the MLP and CNN. However, the classical RNN has major problems with vanishing or exploding gradients. To solve these problems, modified networks have been suggested. One type of RNN, that has reached state-of-the-art results in fields like speech recognition and language processing, is LSTM. Every LSTM cell is built by three gates, the input, output, and forget gate. As its name reveals, it has one long and one short-term memory. Since LSTM was proposed, new algorithms with small modifications have been created. One of those, with promising results, is GRU. The GRU has two gates and one memory, making it computationally faster.

Another model that takes advantage of sequential data is the transfer model, a neural network architecture that is among the recently proposed prediction model [12]. It is most commonly used in natural language processing tasks but can also be applicable in time-series regression. It employs a self-attention mechanism to capture relationships among historical dynamics in a sequence. Multiple attention heads are used to capture different dependencies and relationships in parallel. The transformer incorporates positional encoding to convey the timeliness of each number. With its ability to capture long-range dependencies and parallel processing, the transformer has significant performance in time series prediction.

To evaluate and compare the performance of the promising neural networks for channel prediction, we consider a downlink MIMO scenario with antennas at the BS and antennas at the UE. We model the received signal as , where is the transmitted signal, is the received signal, is the additive noise and represents the channel. For various adaptive wireless technologies, outdated CSI can cause heavy performance degradation. To obtain up-to-date CSI, channel prediction is performed. To predict the future channel on a desired prediction horizon , we use historical measurements of the channel, indicated as , where determines the time interval between each sample. Since the channel is complex-valued, real and imaginary values are separated in the input channel matrix .

Experimental evaluation

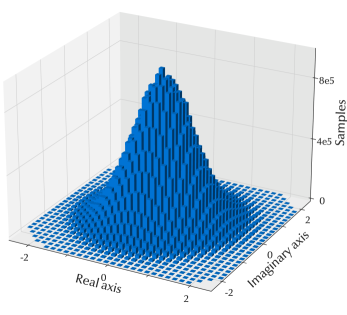

To evaluate the neural networks discussed in the previous section, fast-fading channel data is simulated using the standardized 3GPP TDL-A model [5]. The TDL-A model is based on Rayleigh fading in a non-line-of-sight scenario and is useful when simulating the channel for cellular systems. The BS and the UE are assumed to have 2 antennas each, communicating at 2 GHz. The mobility of the UE is set to 20 km/h, which gives a maximum Doppler shift of approximately 37 Hz. With 52 resource blocks, the number of subcarriers is 624. To be consistent with the overviewed literature, only one subcarrier is considered at a time in the input and output of the prediction. This result is a dataset of around 26 milion data points. The distribution of the original dataset in the complex plane is plotted in Fig. 1, showing the zero mean circularly symmetric complex Gaussian distribution of the channel. The histogram includes the samples from all MIMO channels and all subcarriers. The distribution symmetry and smoothness are results of the massive dataset. From this original dataset, the training dataset was randomly sampled to obtain 90000 samples, and the test dataset 10000 samples.

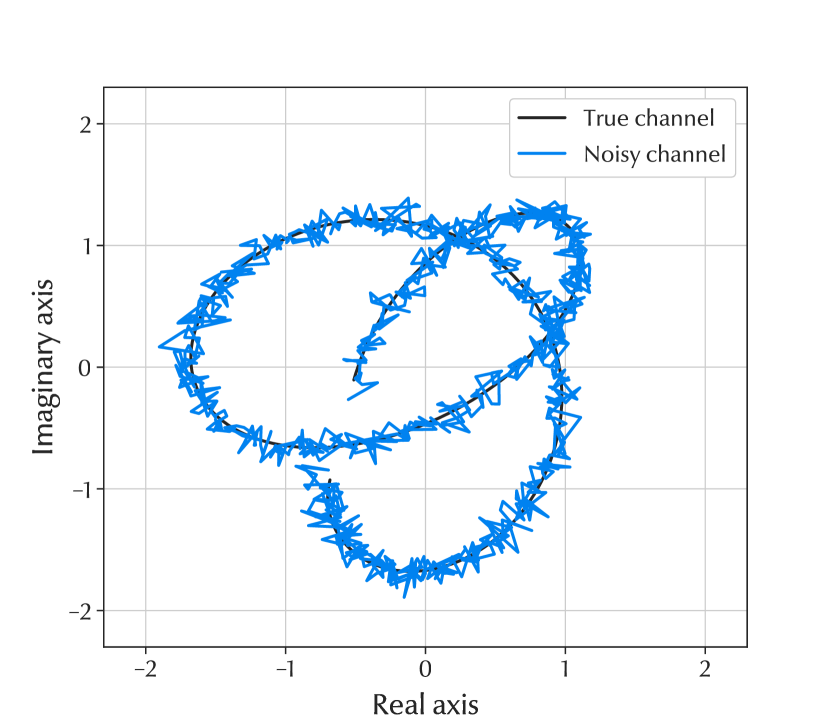

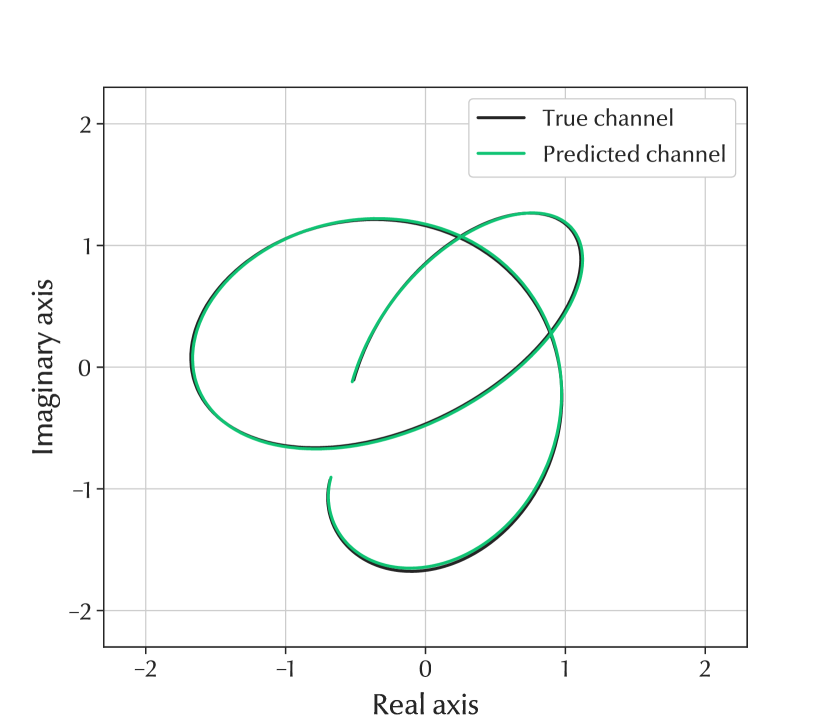

Experiments are conducted for two versions of the dataset, one using the original dataset and the other with the presence of noise. To imitate a realistic scenario and represent the uncertainties from channel estimation, the channel is distorted with Gaussian noise yielding an SNR of 20 dB. The noise is present in the inputs and outputs of the training data. In this way, the model is trained with realistic noisy channel data, which to the authors knowledge, has not been studied earlier when using CNNs, LSTMs or GRUs. In the test data however, only the inputs are distorted to evaluate the predictor correctly. To reconstruct the predicted channel, the output vector from the neural networks is reshaped into a vector of complex-valued channels for each time instance. In Fig. 2(a), a small sample of the noisy-, true-, and predicted channels are plotted.

With the Adam optimizer, the aim is to minimize the MSE over 200 epochs. The number of historical channel measurements used to predict the future channel is with a sampling time of 1 ms throughout the paper. The neural networks are trained to optimize the accuracy for each prediction horizon. The MLP is set to have 6 layers. For the CNN, the number of convolutional layers is 4, each followed by a pooling layer, ending with 2 linear layers with rectified linear unit (ReLU) activation functions just as for the MLP. The RNNs has 3 stacked layers. The hyperparameters of the models are tuned experimentally to obtain the best performance. For further insights of the models, the project can be found at Github111https://github.com/osst3224/Channel_prediction_DNN.git. The number of hidden states is set to throughout this paper. The activation function used is the , as suggested in [13]. Complexity analyses for the different models can be found in [4, 12, 13]. An empirical evaluation of the size of all models used in this paper is summarized in Table II. The neural networks are trained for prediction horizons in the range of ms, with the purpose of investigating longer prediction horizons, which has been marginally done in previous works.

| Model | Trainable parameters | Elapsed time per prediction |

| MLP | 1 990 402 | |

| CNN | 4 338 | |

| LSTM | 455 102 | |

| GRU | 341 402 | |

| Transformer | 463 874 | 97 |

The naive predictor, which assumes that the future channel is equal to the outdated (current) channel is used as a benchmark. To further evaluate the performance of neural networks in channel prediction, Kalman filtering is implemented as well. For longer prediction horizons of several sampling times ahead, the channel prediction of 1 ms ahead is used as historical measurements for the next prediction. This procedure was repeated until the desired prediction horizon is reached, just as in [4]. To fairly compare the performance of the Kalman filter and the neural networks, the MSE of the Kalman filter was calculated after it had reached convergence.

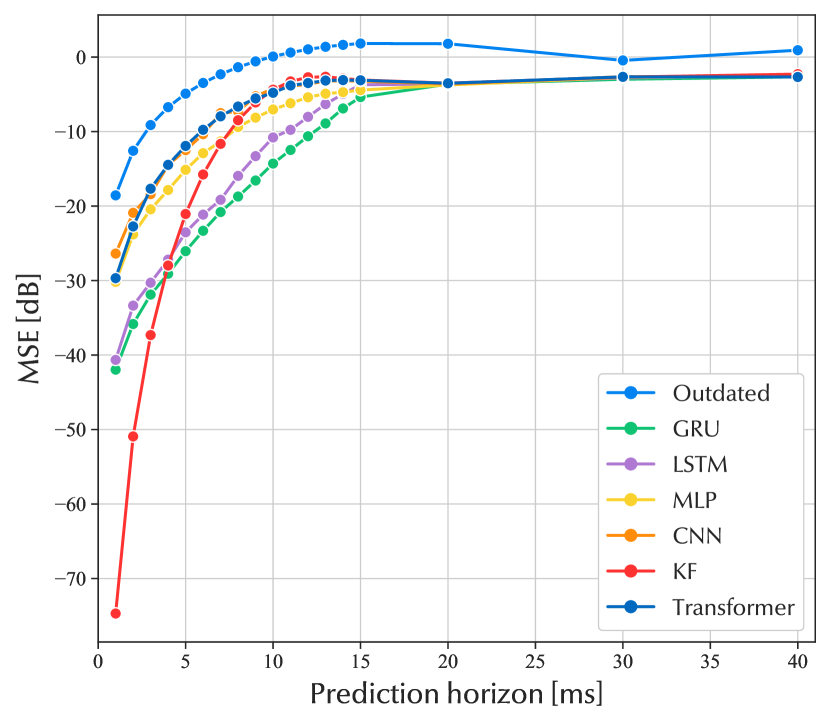

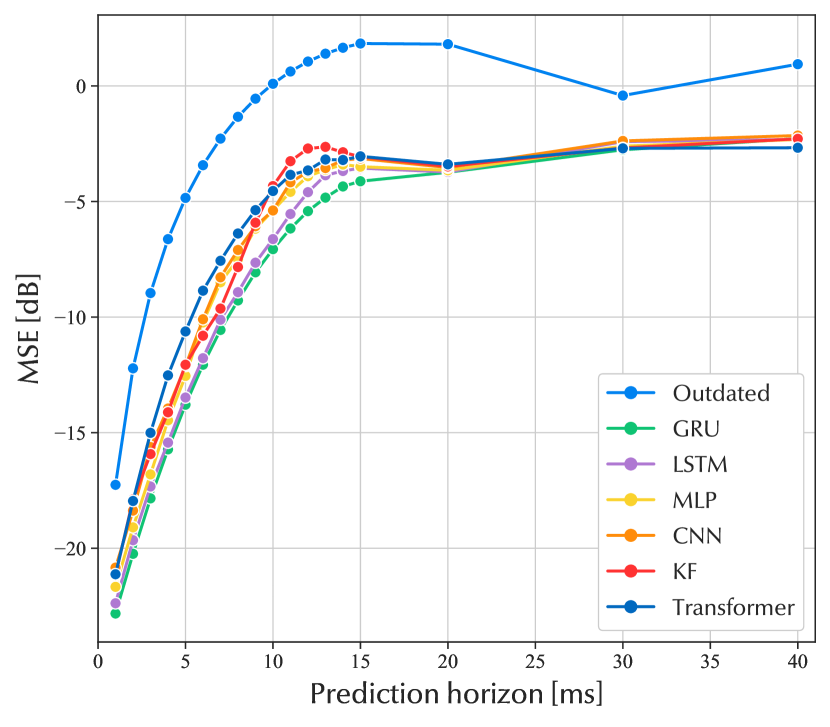

The MSE for the test dataset is plotted in decibels as a function of the prediction horizon in Fig. 3(a). The performance of the RNNs are similar, with a small advantage to the GRU. The reason they are performing very similarly is that their architecture is closely related. With a little higher MSE, the MLP, CNN, and transformer also have quite similar performance. The MSE grows at a steady pace as the prediction horizon increases. As the prediction horizon grows, the channel’s temporal correlation weakens, which naturally makes it more difficult to predict the channel. When the prediction horizon is around 15 ms, the performance of the neural networks reaches a level of error that stays relatively constant with a higher prediction horizon. This indicates that the coherence time of the channel is approximately 15 ms. The rate at which the channel ages is determined by the UE mobility in this channel model. With a lower UE mobility in the simulation, the performance of the neural networks would be better for longer prediction horizons.

The behavior is repeated for the performance of the neural networks trained with the noisy dataset, visualized in Fig. 3(b). The performance of the neural networks is kept somewhat constant for prediction horizons longer than 15 ms. With shorter horizons, the performance of all neural networks is in the same relative order to each other in the noisy and noise-free case. The MSE of the test data is substantially higher when noise is introduced in the training and test dataset. However, when noise is introduced and the prediction horizon is short, there is no significant difference in performance between the RNNs, MLP, CNN, and transformer.

The Kalman filter performs very well in a noise-free environment on short prediction horizons. When the prediction horizon considered is over 5 ms, LSTM and GRU perform better than the Kalman filter. In a noisy environment, the Kalman filter behaves as the neural networks. But from Fig. 3, it is apparent that the RNNs outperforms the Kalman filter over long prediction horizons.

Discussion

From Fig. 3(a), it is evident that the recurring memory cell gives a strong advantage in wireless channel time series prediction and constitutes a robust performance for the GRU respectively LSTM compared to the MLP, CNN, and transformer model, regardless of the prediction horizon. From these results, it is concluded that GRU is the state-of-the-art in channel prediction. The intuitive explanation for this is the GRU’s innate ability to find correlations in sequential data. The GRU is custom-made to predict sequentially temporal data. It has fewer parameters than the similar LSTM network, which makes the GRU inclined to learn better and faster. However, in form of practical feasibility in real base stations, due to constraints in computational power and energy consumption, it might be better to consider the MLP out of the neural networks due to its low complexity, especially during training. The computational time and power spent to run the prediction model is crucial for real-world implementations. Future research could further extend the identified methods for real-world implementations such as quantization, continual learning and one-shot learning.

For the scenario with the distorted channel, the difference in error between the GRU and the MLP is constant around 1dB. The MLPs lower computational complexity might make it more suitable in a real-world implementation where computational power is limited due to time and energy constraints. As shown in [4], the MLP has lower computational complexity than the Kalman filter. Another fact that makes neural networks more suitable in a real-world implementation is that the channel might not always follow a smooth pattern as in Fig. 2. Since the MLP is data-driven, it can learn easier than the Kalman filter in such a case.

This paper has considered the scenario of non-line-of-sight communication, for the standardized 3GPP TDL-A channel model. The simulated dataset consists of 26 million data points and is statistically sufficient to cover the scenario in TDL-A. Therefore, the results presented in this paper are general due to the generality of the TDL-A model. It would be beneficial if future research could investigate neural networks’ robustness in wireless channel prediction, in the presence of abrupt changes in the communication environment due to the appearance or disappearance of dominant paths. In the case of appearing or disappearing dominant paths, the neural networks trained in this paper would only need five consecutive samples after the abrupt change, to reinitialize satisfactory predictions. The reason is that the input to the neural networks is of five samples. Moreover, line-of-sight communication is more static and fades slower than non-line-of-sight communication. Hence, neural network models trained in this paper will perform well in scenarios containing slower variations than those exhibited by the training dataset as well. The Kalman filter, on the other hand, requires more than five samples to converge to acceptable results, as seen in [4].

Conclusions

This paper overviewed the most prominent research results from the literature on machine learning for channel prediction. The main advantage of machine learning is that it does not assume any underlying model, which makes it flexible and able to learn a model from the data itself. From simulations of the non-line-of-sight scenario of the 3GPP standardized TDL-A model, the neural networks were trained and validated. In the scenario of a noise-free channel, the numerical experiments of this paper established that two RNNs, namely GRU and LSTM, achieved considerably better results for prediction horizons up to 15 ms than the MLP, CNN, and transformer model. However, the Kalman filter performs better than all neural networks up to the prediction horizon of 4 ms. In the case of channel measurements with noisy data, the difference in performance between the neural networks was not as significant. However, from ordering the neural networks by their performance, the order was the same in the case with and without noise. The Kalman filter performed similarly as the MLP, CNN, and transformer model in the noisy case.

Ultimately, this overview suggests that the GRU is most suitable to perform channel prediction and has the potential to be considered the most promising for future real-world implementations. For future work, we plan to perform channel prediction using more than one carrier. Also, data-efficient machine learning models will have to be considered in future research if the predictions have to be made in resource-constrained wireless devices.

Acknowledgment

This work was partially supported by the Wallenberg AI, Autonomous Systems and Software Program (WASP), funded by the Knut and Alice Wallenberg Foundation. The work of C. Fischione was also funded by Digital Futures KTH research center and SSF.

References

- [1] K. T. Truong and R. W. Heath, “Effects of Channel Aging in Massive MIMO Systems,” IEEE JCN, vol. 15, no. 4, pp. 338–351, 2013.

- [2] J. Björsell, Predictor Antennas: Enabling channel prediction for fast-moving vehicles in wireless broadband systems. PhD thesis, Uppsala University, Signals and Systems, 2022.

- [3] D. Aronsson and M. Sternad, “Kalman Predictor Design for Frequency-Adaptive Scheduling of FDD OFDMA Uplinks,” in IEEE PIMRC, pp. 1–5, 2007.

- [4] H. Kim et al., “Massive MIMO Channel Prediction: Kalman filtering Vs. Machine Learning,” IEEE Trans. Commun., vol. 69, no. 1, pp. 518–528, 2021.

- [5] “Study on channel model for frequencies from 0.5 to 100 GHz (3GPP TR 38.901 version 16.1.0 Release 16),”

- [6] J. Yuan, H. Q. Ngo, and M. Matthaiou, “Machine Learning-Based Channel Prediction in Massive MIMO With Channel Aging,” IEEE Trans. Wirel. Commun., vol. 19, no. 5, pp. 2960–2973, 2020.

- [7] W. Jiang and H. D. Schotten, “Recurrent Neural Network-Based Frequency-Domain Channel Prediction for Wideband Communications,” in IEEE VTC, pp. 1–6, 2019.

- [8] C. Luo et al., “Channel State Information Prediction for 5G Wireless Communications: A Deep Learning Approach,” IEEE Trans. Netw. Sci. Eng., vol. 7, no. 1, pp. 227–236, 2018.

- [9] J. Wang et al., “UL-CSI Data Driven Deep Learning for Predicting DL-CSI in Cellular FDD Systems,” IEEE Access, vol. 7, pp. 96105–96112, 2019.

- [10] G. Liu et al., “Deep Learning-Based Channel Prediction for Edge Computing Networks Toward Intelligent Connected Vehicles,” IEEE Access, vol. 7, pp. 114487–114495, 2019.

- [11] W. Xu et al., “Time-Varying Channel Prediction for RIS-Assisted MU-MISO Networks via Deep Learning,” IEEE TCCN, vol. 8, no. 4, pp. 1802–1815, 2022.

- [12] H. Jiang et al., “Accurate Channel Prediction Based on Transformer: Making Mobility Negligible,” IEEE JSAC, vol. 40, no. 9, pp. 2717–2732, 2022.

- [13] W. Jiang and H. D. Schotten, “Deep Learning for Fading Channel Prediction,” IEEE OJ-COMS, vol. 1, pp. 320–332, 2020.

- [14] A. Kulkarni et al., “DeepChannel: Wireless Channel Quality Prediction Using Deep Learning,” IEEE Trans. Veh. Technol., vol. 69, no. 1, pp. 443–456, 2019.

- [15] Z. Xiao et al., “C-GRBFnet: A Physics-Inspired Generative Deep Neural Network for Channel Representation and Prediction,” IEEE JSAC, vol. 40, no. 8, pp. 2282–2299, 2022.

Biographies

Oscar Stenhammar [M] (oscar.stenhammar@ericsson .com) is currently pursuing his Ph.D. at KTH Royal Institute of Technology as an industrial doctoral student, employed by Ericsson AB and affiliated with the Wallenberg AI, Autonomous Systems and Software Program (WASP). He received an M.S. in Engineering Physics at Uppsala University in 2021.

Gabor Fodor [SM] ([email protected]) received a Ph.D. in electrical engineering from the Budapest University of Technology and Economics in 1998 and received a D.Sc. from the Hungarian Academy of Sciences in 2019. He is currently a Master Researcher with Ericsson Research and an Adjunct Professor with KTH Royal Institute of Technology, Stockholm, Sweden. He is currently serving as an Editor for IEEE Transactions on Wireless Communications and IEEE Wireless Communications.

Dr. Carlo Fischione [SM] ([email protected]) is full Professor at KTH Royal Institute of Technology, Network and Systems Engineering, Stockholm, Sweden. He received a Ph.D. in Electrical and Information Engineering in 2005 from University of L’Aquila, Italy, and has held research positions at Massachusetts Institute of Technology, Cambridge, MA (2015); Harvard University, Cambridge, MA (2015); and University of California at Berkeley, CA (2004-2005 and 2007-2008). His research interests include applied optimization, wireless Internet of Things, and machine learning. He received the “2018 IEEE Communication Society S. O. Rice” award and the 2007 best paper award of IEEE Transactions on Industrial Informatics.