]Max Planck Institute for the Physics of Complex Systems, Nöthnitzer Str. 38, 01187 Dresden.

Max Planck Institute for the Mathematics in the Sciences, Inselstr. 22, 04103 Leipzig.

A combinatorial view of stochastic processes: White noise

Abstract

White noise is a fundamental and fairly well understood stochastic process that conforms the conceptual basis for many other processes, as well as for the modeling of time series. Here we push a fresh perspective toward white noise that, grounded on combinatorial considerations, contributes to give new interesting insights both for modelling and theoretical purposes. To this aim, we incorporate the ordinal pattern analysis approach which allows us to abstract a time series as a sequence of patterns and their associated permutations, and introduce a simple functional over permutations that partitions them into classes encoding their level of asymmetry. We compute the exact probability mass function (p.m.f.) of this functional over the symmetric group of degree , thus providing the description for the case of an infinite white noise realization. This p.m.f. can be conveniently approximated by a continuous probability density from an exponential family, the Gaussian, hence providing natural sufficient statistics that render a convenient and simple statistical analysis through ordinal patterns. Such analysis is exemplified on experimental data for the spatial increments from tracks of gold nanoparticles in 3D diffusion.

I Introduction

The incorporation of stochastic ingredients in models describing phenomena in all disciplines is now a standard in scientific practice. White noise is one of the most important of such stochastic ingredients. Although tools for identifying white and other types of noise exist [1, 2], there is a permanent demand for reliable and robust statistical methods for analyzing data in order to distinguish noise and filter it from signals in experiments. Or in hypothesis tests, for assessing the plausibility of the outcome of an experiment being the result of randomness and not a significant, controllable effect. Due to its ubiquity in experiments and its mathematical simplicity, white noise is very often the most convenient stochastic component that adds realism to a dynamic model, commonly regarded as the noise polluting observations. It can be continuous or discrete both in time or in distribution, so it can be applied to many scenarios. It is a stationary and independent and identically distributed process, all relatively simple properties for a stochastic process. Here we present a combinatorial perspective to study white noise inspired in the concept of ordinal patterns. An ordinal pattern of length is the diagramatic representation of the inequality fulfilled by a subsequence of points in a time series . We discuss ordinal patterns in detail in Sec. II. This concept was used in 2002 by Bandt and Pompe [3] for building a measure of complexity for time series named Permutation Entropy (PE). PE has proven its value not only in applications, when used to analyze time series from a great variety of phenomena [4, 5], but it is also of theoretical relevance since it is equivalent to the Kolmogorov-Sinai entropy for a large class of piece-wise continuous maps of the interval [6, 7]. The procedure for computing PE consists in first choosing the size for the window that will be used in the analysis, corresponding to the embedding dimension in a Takens embedding [2]. Then we slide the window through the series (equivalent to construct the lagged or delay vectors [2]), to find the frequencies with which ordinal patterns occur. Then we compute the associated Shannon entropy . For white noise in the long time series limit all the patterns are equally likely, therefore their frequencies follow a discrete uniform probability mass function (p.m.f.) with support on the integers , which is equivalent to the probability measure of permutations over the symmetric group of degree , . Despite its relevance and wide range of applications, there are few rigorous studies on the properties of PE for its use in statistical inference. To the best of the author’s knowledge, this is addressed only in works such as [8], where the authors investigate the expectation value and variance of PE for finite time series of white noise, and later the same authors address the effect of ordinal pattern selection on the variance of PE [9]. In the customary PE approach every permutation is, in a sense, considered as a class, since the count of every single permutation is important. The effect of this is that the empirical distributions obtained from finite-length observations will be very sensitive to relatively minor changes in the proportions of each observed pattern [8]. This lack of robustness represents a liability when trying to distinguish noise from structure. Another problem is the factorial growth of the number of classes, enlarging the discrete support correspondingly and making the analysis both impractical and meaningless for values of the embedding dimension beyond low or moderate (), since the required length of a time series that will have a chance to display roughly one representative member of each class would be on the same order of (already around 3 million observations for ).

In this work, we address these limitations by introducing a new statistic for permutations in Sec. III. This statistic is a functional over the symmetric group , that can be interpreted as a measure of asymmetry for ordinal patterns. The functional divides the symmetric group into classes corresponding to a coarse notion of levels of overall increasing or decreasing behaviour of a pattern. In turn, this results on the transformation of the original discrete uniform probability measure of the patterns over , into a new probability measure that concentrates around its expected value, as we show in Sec IV. This has practical and conceptual consequences, such as the ability of performing a suitably modified version of the ordinal pattern analysis for very large embedding dimensions, since now the number of classes of patterns to be tracked is reduced from to merely . The probability mass functions corresponding to our functional can be approximated by a Gaussian distribution, that is itself an exponential family. This guarantees the existence of natural sufficient statistics for the estimation of our statistic, as we explain in Sec IV. We open Sec. V with an illustration of our framework by analysing white noise from different source distributions and discussing its potential for distinguishing the deterministic signature of a chaotic orbit from a discrete map by inspection of the empirical p.m.f. obtained from our modified pattern analysis. Then we take experimental 3D tracks of gold nanoparticles and design a test for identifying the statistical independence among the spatial increments on each coordinate in a plane of observation. We finish by discussing our results and making additional remarks on the advantages and drawbacks of the presented framework.

II Ordinal Patterns

and their symmetries

A permutation is a bijection . If we take the set to be the sequence of integers , then maps this sequence into itself . Due to its bijective nature, we call the arrangement also a permutation of length . Alternatively we can call it a word produced by on symbols. The set together with the operation of functional composition is the symmetric group on symbols, of cardinality [10]. We will denote the set of symbols as and an interval of symbols is denoted by .

From a simplified perspective, the elements can be regarded all equivalent to each other a priori. Therefore, the corresponding discrete measure over would be , assigning each permutation a weight of . Under this measure, permutations can be enumerated by lexicographic order, which ranks the words according to their size as integers. The lexicographic order in is shown in Table 1 following the index along with other statistics for permutations, such as the inversion number, the major index and the runs (number of ascending sequences) [10],[11]. In the last column, we include the corresponding values of the functional introduced in Sec. III.

| sgn | inv | maj | runs | |||

|---|---|---|---|---|---|---|

| 1 | 123 | +1 | 0 | 0 | 1 | 2 |

| 2 | 132 | -1 | 1 | 2 | 2 | 1 |

| 3 | 213 | -1 | 1 | 1 | 2 | 1 |

| 4 | 231 | +1 | 2 | 2 | 2 | -1 |

| 5 | 312 | +1 | 2 | 1 | 2 | -1 |

| 6 | 321 | -1 | 3 | 3 | 3 | -2 |

Consider the lattice formed by the cartesian product . An ordinal pattern [3], that we will denote here as or simply is a directed graph in that connects ordered pairs that are consecutive in their first index , , hence . The angle brackets explicitly indicate that the elements of the -tuple are connected consecutively conforming a graph.

For instance, the graph , or in the lattice is an ordinal pattern, since , then corresponds to the identity permutation in , . The graph is not a valid diagram since the word is not a permutation, i.e. . The set of all diagrams is denoted by . Tables 2 and 3 display the permutations and their corresponding graph sets , , respectively.

| pattern | pattern | pattern | ||||||

|---|---|---|---|---|---|---|---|---|

| 123 | 2 | 213 | 1 | 312 | -1 | |||

| 132 | 1 | 231 | -1 | 321 | -2 |

| pattern | pattern | pattern | pattern | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1234 | 4 | 2134 | 4 | 3124 | 2 | 4123 | 0 | ||||

| 1243 | 4 | 2143 | 4 | 3142 | 2 | 4132 | 0 | ||||

| 1324 | 2 | 2314 | 0 | 3214 | 0 | 4213 | -2 | ||||

| 1342 | 2 | 2341 | 0 | 3241 | 0 | 4231 | -2 | ||||

| 1423 | 0 | 2413 | -2 | 3412 | -4 | 4312 | -4 | ||||

| 1432 | 0 | 2431 | -2 | 3421 | -4 | 4321 | -4 |

II.1 Reflections of diagrams

It is instructive to explore the symmetries of the ordinal patterns, since they are reflected in the statistical properties of the permutations as we explain in Sec. III for inspecting the properties of the distribution of the statistic introduced there.

Vertical reflections

A vertical reflection consists on flipping a diagram along a vertical axis and relabeling the nodes (ordered pairs) accordingly. It is a bijection that acts on ordered pairs as

| (1) |

i.e. it leaves the second coordinate invariant. The action of over is explicit

| (2) |

meaning that simply mirrors the symbols along a vertical axis of reflection to the right of the permutation, as illustrated in Fig. 1. For even permutation length , the effect of as a reflection with respect to an external vertical axis, is the same as an internal reflection of the symbols of the word along an internal axis splitting the permutation in equal parts of symbols. For odd , the permutation is split into equal parts of but the symbol is left invariant since it becomes the internal axis of reflection itself. As a simple illustration, let us take a diagram of length , as shown schematically in Fig. 1(a). Ranking the points from smallest to largest gives the ranking permutation , corresponding to the permuted indices of the vector of ranks: relative to the original positions . Hence, in the example of Fig. 1(a), , where we put double dots instead of a comma in the middle only to highlight the internal axis of symmetry of the diagram.

Horizontal reflections

A horizontal reflection consists on flipping a diagram along a horizontal axis. Its action over ordered pairs is

| (3) |

meaning that acts directly on the permutation symbols. Its explicit action corresponds to the involution

| (4) |

III A functional over permutations

All of the permutation statistics displayed in Table I reflect different symmetries in . The sign of the permutation is arguably the most basic statistic, telling explicitly whether the number of flips of symbols in a permutation relative to the identity is even () or odd (). The inversion number explicitly counts the pairs for which the symbol in position is larger than the one at . Summing up the indices of the larger symbols in each inversion yields the major index .

Below we introduce a new statistic of permutations that accounts for an imbalance in weight when considering the symbols conforming every permutation as a collection of weights. The objective of defining and characterizing this new statistic is twofold: On one hand, we have the intrinsic interest on new insights on the study of the symmetric group and the implications of these discoveries on other areas such as dynamical systems and stochastic processes. On the other hand, there is also a practical interest on the analysis of time series, specifically in connection with ordinal pattern analysis.

The construction of the set of ordinal patterns from a realization of any process , consists on mapping portions of that process time series (with an index set, usually ), to the diagrams in Sec.II by sliding a window of size over . We can inspect larger portions of the series without increasing by skipping (lagging) a number of points after every choice on each window, so the number is correspondingly known as lag. For instance, a lag of means that we do not skip any point and we slide the window without gaps. The collection of windows of points are called delay or lagged vectors, and the process of construction of these vectors is known as an embedding [2]. Correspondingly, the natural number indicating the window size is called embedding dimension. This terminology comes from the embedding theorems that form the basis of the state space reconstruction methods from scalar time series, known in general as time delay embeddings [2]. Hence, for the ordinal pattern analysis, we first make the embedding of the time series for a chosen dimension and lag . This yields a total of lagged vectors. Then we rank the amplitudes on every lagged vector according to their magnitude, thus obtaining the ranked vectors . The sequence of indices in these ranked vectors are the ranking permutations , where . Finally, these permutations have associated ordinal patterns as we saw in Sec. II. In this way the local information on the relative ordering is preserved, and as a consequence also the relevant information on the correlation structure of the series. However, a simple but careful inspection of this mapping makes evident that the relative ordering is not the only information preserved. In fact, if we interpret the ranks as abstract weights assigned to the amplitudes of the process, then it is clear that also information on the overall variation within portions of these windows of data points is preserved. Questions such as the weight accumulated in sub-intervals within each bin of size arise naturally from this observation. An equally natural choice of sub-interval for analysis within the -window is half the bin, so as to build a measure to estimate the tendency of the process to have local increasing, decreasing or close to constant behavior. This is in close analogy to the concept of derivative, but getting rid of the information on the specific amplitudes that the process takes. In order to study this asymmetry on the total weight concentrated on each half of a length window, we provide the following

Definition 1 Functional . For every permutation , a non-negative integer, define the functional

(5) where .

The functional thus defined is invariant under a shift of the sequence by an integer. This transformation is also invariant under monotonic transformations of the process , since the relative ordering is not affected. The case for odd gives mixed statistical behavior, since the middle permutation symbol is ignored in the computation of , implying that for a given permutation lenght , when the ignored symbol happens to be , there will be permutations that display the same statistics as in the full problem for permutation lenght . By the invariance of under a shift of by an integer, when the middle symbol is we have the same situation as before. Other ignored symbols produce different and more complicated effects. Therefore, we shall limit here to the case for even , which reduces Eq. (5) to

| (6) |

Hence, splits into equally sized intervals and with partial sums and , respectively. We get by summing up the symbols on each half, and subtracting: . Notice that , and . This means that , as it is the case, for instance, for the identity permutation . By symmetry, using the reflections in Eqs. (2) and (4) the minimum value of is . This means that is a bounded variable for finite .

The definition of allows for an obvious source of degeneracy or multiplicity, since the order of the summands on each half is irrelevant. Let us denote the number of permutations that share the same value of by . For every distinct value of , there are (by shuffling the terms on each sum ) at least reorderings that share the same value, hence . For instance, if we consider we get as above, which has the least degeneracy . The next possible value of can be obtained from by switching , the largest symbol in , with the smallest symbol in , which is . This clearly increases the value of the left partial sum by one while decreasing the right partial sum by one unit , with the effect of decreasing by two units , then the next value is . Proceeding in this way, we get the set of all possible -values over (for even )

| (7) |

whose size is . Notice that if , then and for , a difference that becomes irrelevant as . It is not difficult to see that the degeneracies come in multiples of , meaning that , , . The coefficients for form sequences

| (8) | |||||

that are mirrored for . The sequences in Eq. (8) were found by direct numerical computation, but in the following we obtain them analytically from the observations in the construction of the set and the definition in Eq (6) for .

It is clear that , therefore,

| (9) |

Thus, the central binomial coefficient counts the number of ways of computing in a nontrivial way. This is because also counts the number of different ways to assign a sign to out of symbols and a sign to the rest of symbols, which is precisely the problem of computing without counting the shuffling of the terms in the sums. Yet, Eq. (9) tells us the total number of different ways to compute , not the value of the individual coefficients . To find the nontrivial multiplicities , we notice the equivalence between our problem and the combinatorial problem of sums of partitions of sets. The multiplicities correspond to the number of permutations such that . This is equivalent to the problem of finding the number of sets made of elements out of total elements, such that the sum of the -element set is fixed. This, in turn, fixes the sum of the remaining elements. These sums are clearly discussed before. Since fixing either of the sums fixes the value of , we have thus just rephrased the computation of our functional as a combinatorial problem which has an elegant solution, stated in the form of the following

Theorem (Bóna 2012 [10]) Let and be fixed non-negative integers so that . Let denote the number of -element subsets of whose elements have sum , that is, larger than the minimum. Then we have

(10) In other words, is the ordinary generating function of the -element subsets of according to the sum of their elements.

The object in Eq. (10) belongs to a special kind of polynomials known as Gaussian polynomials or Gaussian binomial coefficients [10], regarded as a generalization of the binomial coefficients and defined as

| (11) |

which are polynomials of the form in Eq. (10) whose degree is and with symmetric coefficients . The symbol it is defined as

| (12) |

also a polynomial, which reduces to in the limit . From the same limit, we also recover from Eq. (11). The polynomial in is called a -analog of , a natural choice for generalizing a representation of nonnegative integers parametrized by . The generalization of the factorial as a -analog is the -factorial

| (13) | |||||

Our main result, the computation of the numbers in Eq. (8), corresponding to the nontrivial contribution to reduces to a corollary of the Theorem in Eq. (10) for the particular choice . In fact, using we recover a generating function for our sequence

| (14) |

with . Therefore

| (15) |

where the notation indicates the coefficient of the -th power of the polynomial . We can compute statistical properties of a sequence from its generating function with relative ease [12]. If we sample a permutation at random and assign the random variable to the associated value of , the probability of observing the value is given by the ratio

| (16) |

hence, is a probability mass function with support in (Eq. 7) and Eq. (9) is the normalization condition . The associated probability generating function (p.g.f.) is

| (17) | |||||

| (18) | |||||

| (19) |

where . From the p.g.f in Eq. (19) we can, at least in principle, compute any desired moment of by differentiation of with respect to . However, the derivatives are more cumbesome than illuminating and we will not present them here.

By applying the functional defined in Eq. (6) to the set of observed permutations obtained from embedding a stochastic process as explained previously, we get an associated stochastic process in terms of . The functional can be applied to any process, but here we limit to its use for the analysis of white noise processes.

A white noise process is a continuous or discrete time stochastic process with the following properties

| (20) | |||

| (21) |

where , is the set in which take values, for instance for a continuous process. From these properties, the zero-mean and finite variance conditions are irrelevant for the application of , since they merely are information about the scale of the process, to which our functional is blind.

The values of over an embedding in a process are well defined, so long as the source process has a continuous support (later we will see that this condition can be sometimes relaxed), thus having a zero probability of observing repeated amplitudes of the process . This means that the ranking permutations are well defined. Of course, real observations have a finite resolution, but even considering this, repetition of amplitudes from a stochastic process with continuous support would be expected to be highly unlikely at least in a finite time. Considering the former facts, we arrive at the following

Definition 2 Induced -process and -series. For every choice of non-negative integers , and (with ) and every white noise process , the functional in Eq. (6) induces the discrete-time process with discrete support (7). Correspondingly, we denote a realization or time series of the process as

(22) where , is the length of an observed realization of , and , is the number of delay vectors in an embedding with fixed values and .

In general, for arbitrary values of and , the process and, consequently, are correlated due to the overlap of the embedding vectors, that is in turn reflected in a sequence of permutations that are not independent. Nevertheless, the process could become effectively uncorrelated for some values of or at large values of both and . In order to visualize how the p.m.f changes as increases, let us plot Eq. (16) for different values. To facilitate comparisons, we make use of the standardized variable . Since , we have the simple quotient

| (23) |

we introduce the notation for referring to the standardized random variable. We will denote its realizations by and the corresponding p.m.f. will be or simply , with moments , , and associated -process (see (22)). We plot for in Fig. 2.

Let us come back briefly to the reflections of diagrams introduced in Sec. II to add understanding to . As illustrated in Figs. 1 and 1, reflecting the diagrams change the corresponding values of up to sign only. This means that for every the compositions of and fulfill

| (24) |

and we will denote them simply by and in the following. Since in general , this is the source of the degeneracy that is not accounted for by simple permutation of the terms of the partial sums . An illustration of Eq. (24) is seen in Fig. 1. We get diagrams that are different in a nontrivial permutational way but with the same value of via the composition , as illustrated there by going from to

The symmetry of the coefficients is thus equivalent to the reflection symmetry in Eq. (24).

IV Continuous approximations and Sufficient statistics

By direct computation of the variance for succesive values we arrive at the formula

| (25) |

which for becomes

| (26) |

As previously noticed, the probability mass function converges to a Gaussian as increases. However, as can be noticed from Fig. 2 for low values of the shape of the distribution is different from a Gaussian, specially around its center. We discuss this case in Appendix A.

The one-parameter exponential or Darmois-Koopman-Pitman families such as the Normal distribution arise naturally from the optimization of the Shannon entropy of under normalization, first and second moment constraints [13]

optimization yields . Using the normalization condition, together with we get

with . We find by making a direct identification with the Gaussian p.d.f.

| (29) |

with in formula (26), and in order to get the correct normalization factor. This yields and thus, an explicit continuous approximation of our p.m.f for large as

| (30) |

where , . A convenient form for finding the natural sufficient statistics for our distribution is given by dropping the index , i.e. so now is seen as a continuous variable. Allowing the mean back into the expression, we get

| (31) |

thus is a probability density function that approximates for large and allows for the possibility of a change in location and scale. More importantly, Eq. (31) indicates directly that the natural sufficient statistics for estimating the mean and variance from a sample are given by the sample mean and variance

| (32) | |||||

| (33) |

where is the set of permutations corresponding to the ordinal patterns observed in a time series of length and is the number of patterns observed in that realization. The sample mean is of special interest for statistical analysis, as we will show in the next section.

V Time series analysis

As an illustration, let us apply our framework as explained at the beginning of Sec. III to white noise time series of length generated from different distributions: Standard Normal (unbounded support), Cauchy (unbounded support, heavy tails), Exponential (asymmetric distribution, unbounded support), Poisson (discrete unbounded support), Continuous Uniform (bounded support), and the special case of deterministic chaotic trajectories generated from the logistic map

| (34) |

at control parameter value . At this value of , the logistic map is ergodic and its invariant density has a closed form , which is equal to . Furthermore, its orbits display exponential decay of correlations [14] and thus are effectively random in the long run. Therefore we can regard long time series obtained from 34 at as white noise generated from a Beta distribution as a source, i.e., . As discussed in Sec. III, the validity of the theory developed for our functional requires the process to have a source distribution with continuous support, since a total order is needed for obtaining well-defined ranking permutations, but this is not the case for the Poisson distribution. Nevertheless, here we relax this condition to see that, in a practical situation where the discrete support of is large enough to make the probability of repeated neighbouring points in time very low, then we can expect our method to apply to a good approximation.

For the sake of comparison, we use the standardized variable , whose associated process is . Now let us choose an embedding dimension (sliding window length) with and let us use a lag of . With this choice we ensure that the shape of is well approximated by a Gaussian (see Fig. 2-(d)).

In Fig 3, we can confirm empirically the statement that the only requirements for obtaining a Gaussian distribution for , are the statistical independence in the observations and the continuity of the support of . These conditions can be relaxed to include processes with sufficiently rapid (i.e. exponential) decay of correlations as in the case of the deterministic chaotic trajectory, or to discrete processes with sufficiently large support.

The usefulness of our analysis is not limited to large values of the embedding dimension. In Fig. 4 we show the distributions for embedding dimensions , obtained from a chaotic trajectory of length from the logistic map with and initial condition . The discrepancies between the distributions for the chaotic trajectory and realizations of uniform white noise come from the impossibility of the logistic map of displaying some types of patterns, known as forbidden patterns [15]. For instance, in [15] it is shown that the pattern of the type , and more generally patterns of the form called outgrowth patterns (where indicates any other symbol in the pattern) cannot be displayed by orbits of the logistic map with . Although one of the drawbacks of our method is that we are limited to even values of , we can still see the effect of the forbidden patterns by the asymmetry in the distributions in Fig 4, which has less mass in the negative side of the support. This is because the forbidden pattern has a negative value of our functional , and thus, patterns that are negative in the sense will be more likely to belong to the outgrowth set patterns of . Correspondingly, an excess of positive patterns is observed, yielding an asymmetric distribution. This makes our analysis potentially useful for detecting signatures of determinism in an observed process by direct comparison with white noise. As it can be seen in Fig. 4, this effect is lost for a larger value of the lag due to the increased scale of observation and consequent loss of correlations.

V.1 3D Diffusion of Gold Nanoparticles

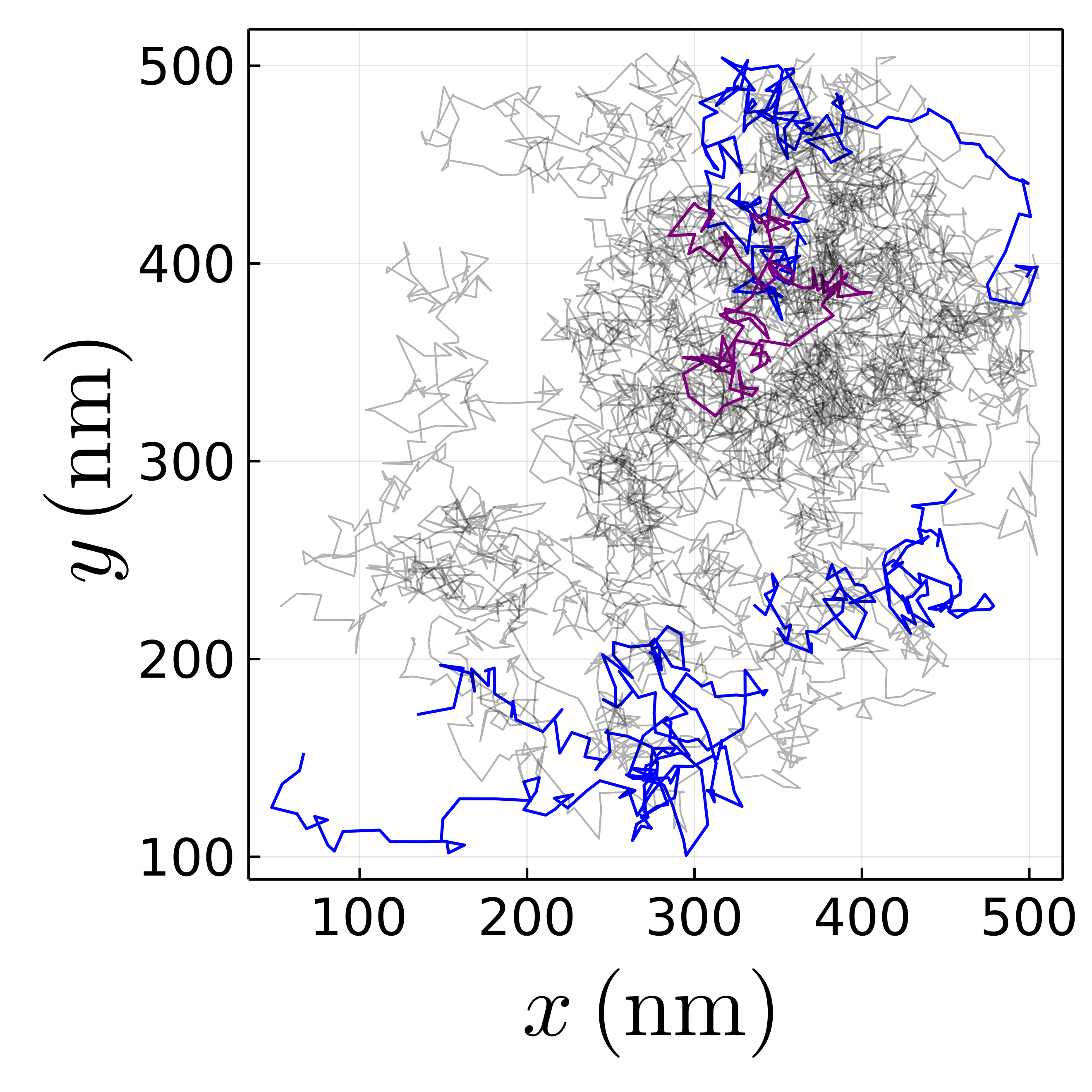

Now, let us analyze experimental data gathered using a recent and powerful technique for direct observation of the 3D dynamics of nanoparticles (NPs), known as liquid-cell scanning transmission electron microscopy (LCSTEM). Although it provides a very sharp resolution, this technique have been reported to yield observations of NP dynamics that is 3 to 8 orders of magnitude slower than the theoretical predictions [16]. This discrepancy can be atributed to the damping effect of the strong beam of electrons, the viscosity of the media, and interactions of the particles with the boundaries of the experimental cell [16]. In [16], Welling et al. address the problem of observed slowed down diffusion by tuning the electron beam to a low dose rate and using high viscosity media, such as glycerol, for the NP diffusion. With those modifications, they track the 3D diffusion of charge-neutral 77 nm gold nanoparticles (Au-NPs) in glycerol as well as charged 350 nm titania particles in glycerol carbonate. The independence between the spatial increments is one of the defining properties of Brownian motion. In the following we show how to use our transformed ordinal pattern framework for testing this independence in the experimental particle tracks from the set of Au-NPs in Ref. [16]. There are more than NP tracks observed in the - plane in this data set, whose original experimental labels are kept here for identification. We analyzed all the trajectories whose length is points (with a maximum of ), for a total of tracks (See Fig. 5).

The procedure is as follows. For each of the tracks ,

-

1)

Obtain the vectors of increments (analogously for ).

-

2)

Choose an embedding dimension and lag in order to apply the -analysis to . This yields a pair of vectors, denoted for simplicity in notation by and . The notation has to be interpreted as first making an embedding of the series with the chosen values and then applying the functional to the corresponding collection of ranking permutations.

-

3)

We get the standardized versions as

where and (Eq. 25). This amounts to obtaining the induced time series for both coordinates, i.e., .

-

4)

We now account for the correlations among the values that are introduced by construction, by computing the effective length of the vectors via [1] through the correction

(35) where is the autocorrelation function (ACF) of the series from the previous step, at time lag .

-

5)

Finally, since the variance of is known (), we can perform a one-sample -test on the series, under the assumption that the increments are independent, and using computed in previous step as the sample size. Therefore, our null hypothesis is simple: The mean value of the standardized variable is zero, . In other words, we want to test

(36) with a selected level of confidence.

The robustness of this test is guaranteed by the fact that the quantities and (Eqs. LABEL:Eq:SuffStats_alpha_s2) obtained in Sec. IV are sufficient statistics for . Therefore, we can rely on the statistic as the the unbiased estimator of the standardized mean . For each spatial dimension of the diffusion, we have the estimators . We will follow common practice and choose a confidence level of , corresponding to a type-I error (false-positive) rate of , denoted here by . This error rate is customarily denoted by “”, conflicting with the notation for the main object in the paper. The author hopes that this change from the standard notation does not affect the reading. Correspondingly, we will denote the rate of a type-II error (false-negative) as , customarily denoted by , and will require an power, or .

We choose an embedding dimension of , since in that case we can consider to be well aproximated by a Gaussian (see Fig. 2-(d)). For the lag we choose , because it ill give the maximum series length . The correlations introduced by this choice of lag are accounted for by Eq. (35), but, before proceeding with next steps, let us estimate the minimum value of that complies with the chosen and . This estimate for is given from the usual two-sided -test by [17]

| (37) |

where (see Eq. (23)), and are the quantiles of the standard normal distribution at and respectively, and is the desired threshold of detection for deviations from the mean, regularly written as proportional to the standard deviation, so .

For , , and , we get from Eq. (37). All of the trajectories considered have a corresponding , therefore we can reliably apply our test. A summary of the results of the test is provided in Table 4, where we show the tracks for which the null hypothesis is rejected (-value lower than ).

In contrast with the analysis displayed in Fig. 6, where only 3 -trajectories are detected as outliers (labels ), there are trajectories that get rejected by the single trajectory hypothesis test (See Table 4), with labels (-tracks) and (-track).

Nevertheless, the agreement between the single trajectory -tests and the independent analysis through the box plot is reasonably good, suggesting that the correction in Eq. (35) represents a sensible approximation that accounts for the autocorrelation in the processes.

| NP label | , -val | , -val | ||||

|---|---|---|---|---|---|---|

| 11 | 0.000 | 0.963 | -0.3902 | 0.0051 | 81 | 81 |

| 94 | 0.024 | 0.089 | 0.2849 | -0.2128 | 63 | 64 |

| 144 | 0.032 | 0.667 | -0.3620 | 0.0717 | 35 | 36 |

| 159 | 0.276 | 0.031 | -0.1700 | 0.3364 | 41 | 41 |

VI Discussion

We have illustrated the main advantages of avoiding working with the direct statistics of patterns by, instead, first dividing the symmetric group into classes through the functional defined by Eq. (6), so that the problem is reduced to analyze these classes. However, this procedure does not come without drawbacks, and next we will discuss this, as well as other positive points in more detail.

An interesting conceptual consequence of the presented view of white noise is, that a sense of typicality emerges in terms of the functional due to its concentration around zero. Therefore, the stationarity of white noise acquires a combinatorial character arising from statistical constraints.

In Sec. V, we have successfully shown a use case of our framework to test for independence in the spatial increments of diffusing particles in 3 dimensions, whose motion was recorded in a 2-dimensional plane. Although computing the ensemble averaged mean squared displacement (MSD) is the customary check for diffusive behavior, it does not provide the single-trajectory detail and statistical power of our method. Indeed, our method can be implemented for a single particle if that is the only information available, and still being reliable assuming a minimum effective trajectory length is achieved (as seen in Sec. V.1), which is a sensible requisite.

The time series available were of a relatively short length. Yet, notably, the test is able to handle these short time series. None of the trajectories were rejected by our test for the two dimensions at the same time. That could be interpreted as a good indication that the observation technique used in [16] performed well enough as to preserve the 3D Brownian diffusion overall, by keeping the introduction of correlations in the motion through the observation scheme at a minimum. After discussion with one of the authors in [16], we can explain the higher rejection rate for the -coordinate tracks by the observation procedure: The LCSTEM probe is progressively scanning line by line along the -dimension, thereby potentially introducing weak correlations in the particles motion in that direction.

The former is supported from the theory exposed and the reasonably good agreement between our analysis and the quartile analysis in Fig. 6. Furthermore, the quartile analysis did not determined a track whose -component was rejected by our test, suggesting our method is more sensitive for a given confidence level.

For all the examples of white noise processes considered in Sec. V, the customary ordinal pattern analysis for the PE computation would be practically impossible for the choice of used here, since we have to keep track of patterns. The analysis and graphical representation of the final distribution would be also impossible without a further coarsening of the support, something that would render the analysis crippled of the detail that characterizes it in the first place. Instead, in the present framework the size of the support of our distribution is (see Sec.II). This is an important simplification, while still keeping relevant information about the patterns both in terms of correlations, and even rough information about the variation of the amplitudes in the form of weights. A remarkable aspect of our framework when comparing white noise of different sources is the robustness of the empirical distributions. The empirical distribution over in the customary PE approach approximates a discrete uniform with support , implying that for moderate to large , it would display strong variations when estimated from a finite sample. In the considered case with and series length , the number of observations falls extremely short for having at least one representative out of the possible patterns. Therefore the obtained empirical density would be composed mostly of void regions and uneven peaks. In order to prevent this effect, in our current example of time series of length one should limit to () and still, we could get an uneven empirical density with gaps despite the rather large time series. In contrast to this, in our approach there are just classes to keep track of, as in the illustrative examples considered in Fig. 3. The choice was done mainly to guarantee a good approximation of by a Gaussian p.d.f. Nevertheless, we can think of alternative analysis for lower values exploiting the fact that we know the specific form of for hypothesis testing.

Now, let us address the autocorrelations introduced in the embedding procedure. We already mentioned in Sec. III that the sequence of values obtained from a time series are correlated due to the overlap among the embedding vectors, that in turn translates into overlapping ranking permutations and, finally, correlated values. This is specially so for low values of the lag . As illustrated in Sec. V, for large enough values of , the effect of the correlations in the original process can be significantly diminished, but also the overlap between the patterns can be diminished or eliminated (see Fig.4). Nevertheless, even for low , the autocorrelations in the series do not affect the Gaussian character of , since this characteristic comes from the fact that, as , the partial sums discussed in Sec. III are composed of integers from the uniform distribution, that become effectively independently sampled as grows, and thus the Central Limit Theorem applies. This is the same effect as the effective loss of statistical dependence when drawing with replacement from a very large pool. Thus, despite the correlations introduced by construction in the method, this does not come at a cost so high that it ruins the statistical power of the analysis, specially for large , large , or both large values.

The absence or over representation of patterns that is expected to happen due to finite sampling is lessened by the fact that it is very likely that a pattern in the same or a similar class will take the place of the missing one. Or vice-versa, over represented patterns in a sample would induce over representation of patterns in neighboring classes for low lag values, specially when the window has the greatest overlap. This has the overall effect of perturbing the shape of the Gaussian around the over represented pattern. It is a similar situation for absent patterns. An example of this can be seen for the chaotic trajectory of the logistic map in Fig. 3-(f), where there is a region in the center which gets a slightly increased probability density than it should for a white noise process. This is explained by the missing forbidden patterns as discussed in Sec. V. The over representation of the patterns with values of around zero is apparently more likely to happen for the processes with bounded support as is the case of Uniform white noise and the deterministic chaotic trajectory (See Fig 3(e)-(f)). This could be explained by the boundeness of the noise and the finiteness of the trajectory, making less likely that increasing (decreasing) sequences appear, corresponding to positive (negative) values of that are far from the average around zero. Thus, values get over represented.

A major drawback for the applicability of our framework is, that an even embedding dimension must be used. Nevertheless, a workaround to this could be to perform the analysis for the adjacent even values of the desired odd value if the actual odd structure of the patterns is not relevant. Furthermore, in principle the odd analysis is also possible by the Definition 1 (Eq. (5)) that we can approximate the exact distribution of the corresponding functional since we have the general expression 11 describing the statistics of the degeneracy of for any choice of and . This degeneracies can be computed numerically from the expression 11 by means of a recursion for the Gaussian binomial coefficients [10]. The distributions for for odd thus obtained are skewed, but still bell-shaped. We wish to make a general analysis of this case, together with possible practical implications in a future work.

To finish, we stress that an important message conveyed with this contribution is, that the full detail of the customary ordinal pattern analysis (prior to PE computation) is not needed and instead hinders its statistical applications and, on the other hand, that the computation of the Shannon entropy directly from the ordinal pattern analysis washes away information that is valuable statistically. The approach presented here represents a middle ground with several extra benefits and relatively minor drawbacks.

Acknowledgements.

Funding for this work was provided by the Alexander von Humboldt Foundation in the framework of the Sofja Kovalevskaja Award endowed by the German Federal Ministry of Education and Research, through Matteo Smerlak. The author acknowledges Tom Welling, Marijn A. van Huis, Professor van Blaaderen and their collaborators for sharing their experimental data. David Davalos, Holger Kantz and Mario Díaz-Torres are acknowledged for useful discussions and comments.References

- Gelman et al. [2013] A. Gelman, J. B. Carlin, H. S. Stern, D. B. Dunson, A. Vehtari, and D. B. Rubin, Bayesian Data Analysis, 3rd ed. (CRC Press, 2013).

- Kantz and Schreiber [2003] H. Kantz and T. Schreiber, Nonlinear Time Series Analysis, 2nd ed. (Cambridge University Press, 2003).

- Bandt and Pompe [2002] C. Bandt and B. Pompe, Permutation entropy: A natural complexity measure for time series, Phys. Rev. Lett. 88, 174102 (2002).

- Zanin et al. [2012] M. Zanin, L. Zunino, O. A. Rosso, and D. Papo, Permutation entropy and its main biomedical and econophysics applications: A review, Entropy 14, 1553 (2012).

- Zanin and Olivares [2021] M. Zanin and F. Olivares, Ordinal patterns-based methodologies for distinguishing chaos from noise in discrete time series, Communications Physics 4, 10.1038/s42005-021-00696-z (2021).

- Bandt et al. [2002] C. Bandt, G. Keller, and B. Pompe, Entropy of interval maps via permutations, Nonlinearity 15, 1595 (2002).

- Misiurewicz [2003] M. Misiurewicz, Permutations and topological entropy for interval maps, Nonlinearity 16, 971 (2003).

- [8] D. J. Little and D. M. Kane, Phys. Rev. E doi.org/10.1103/PhysRevE.94.022118.

- Little and Kane [2017] D. J. Little and D. M. Kane, Variance of permutation entropy and the influence of ordinal pattern selection, Phys. Rev. E 95, 052126 (2017).

- Boná [2012] M. Boná, Combinatorics of Permutations (CRC Press, 2012).

- Andrews and Eriksson [2004] G. E. Andrews and K. Eriksson, Integer Partitions, 1st ed. (Cambridge University Press, 2004).

- Wilf [1994] H. S. Wilf, Generatingfunctionology (Academic Press, 1994).

- Cover and Thomas [2005] T. M. Cover and J. A. Thomas, Elements of Information Theory (2005) pp. 1–748.

- Keller and Nowicki [1992] G. Keller and T. Nowicki, Spectral theory, zeta functions and the distribution of periodic points for Collet-Eckmann maps, Communications in Mathematical Physics 149, 31 (1992).

- Amigó et al. [2007] J. M. Amigó, S. Zambrano, and M. A. Sanjún, True and false forbidden patterns in deterministic and random dynamics, Epl 79, 10.1209/0295-5075/79/50001 (2007).

- Welling et al. [2020] T. A. Welling, S. Sadighikia, K. Watanabe, A. Grau-Carbonell, M. Bransen, D. Nagao, A. van Blaaderen, and M. A. van Huis, Observation of Undamped 3D Brownian Motion of Nanoparticles Using Liquid-Cell Scanning Transmission Electron Microscopy, Particle and Particle Systems Characterization 37, 10.1002/ppsc.202000003 (2020).

- DeGroot and Schervish [2012] M. H. DeGroot and M. J. Schervish, Probability and Statistics, 4th ed. (Pearson Education Inc., 2012).

- Shao [2007] J. Shao, Mathematical Statistics, 2nd ed. (Springer-Verlag, 2007).

- Marchal and Arbel [2017] O. Marchal and J. Arbel, On the sub-Gaussianity of the Beta and Dirichlet distributions, Electronic Communications in Probability 22, 1 (2017), 1705.00048 .

- Hoeffding [1963] W. Hoeffding, Probability Inequalities for Sums of Bounded Random Variables, Journal of the American Statistical Association 58, 13 (1963).

- Chernoff [1952] H. Chernoff, A Measure of Asymptotic Efficiency for Tests of a Hypothesis Based on the sum of Observations, The Annals of Mathematical Statistics 23, 493 (1952).

Appendix A Continuous approximation for finite permutation length

It is clear from Fig. 2, that the p.m.f is not well approximated by a Gaussian for low values of . Therefore, we propose a Beta distribution as a better continuous approximation for this case. Recall its probability density function (p.d.f.)

| (38) |

where .

To match the random variable with the Beta random variable , we need first to transform the support of in Eq. (7) to be a subset of the unit interval by a shift and re-scaling

| (39) |

where is a Beta p.d.f. (Eq. 38). As in the case of , here we adopt the notation for the transformed random variable, for its realizations and for the p.m.f..

| (40) | |||||

| (41) |

This low approximation of by a Beta distribution is consistent with the fact that in the limit the Beta p.d.f. in Eq. (38) converges to a Gaussian, as seen in Sec IV.

The random variable can be interpreted as a probability itself. A value would correspond to the probability of a pattern to have a degree of asymmetry ranging from to . This means that is the probability of sampling an overall decreasing pattern, is the probability of sampling an overall constant pattern, and is the probability of finding overall increasing patterns.

The Chebyshev-Pearson method of moments [18] provides the following expressions for estimating the parameters of the distribution from the mean and variance from a random sample

| (42) | |||||

| (43) |

Since in our computations the sample comprises the population itself (the whole symmetric group) the parameters we have characterized so far are the exact population mean and the exact variance, respectively, i.e., , , , . Then, replacing Eqs. (41) in formulas (LABEL:Eq:MethodMoments_BetaParams) to obtain the exact parameters

| (44) |

that becomes for , in agreement with the symmetry of and the linear increase observed for the parameters from the numerical computations. For the case we get . In this limit, the p.d.f. for the Beta distribution in Eq. (38) becomes a Bernoulli distribution with , which is precisely the same as for , or, after a location shift to zero, is also equivalent to and which are symmetric Bernoulli distributions.

Appendix B Concentration of measure by

Before drawing a conclusion on the continuous asymptotic shape of , let us characterize its moments.

First, we find a bound for the scaling of in order to test formula 25 through the following sub-gaussianity argument.

A random variable with finite expectation is called sub-gaussian if there exists a positive number such that

| (45) |

The constant is called proxy variance or sub-gaussian norm [19]. From the classical Hoeffding’s theorem [20] together with the definition in Eq. (45), we can conclude that every integrable random variable supported on a compact set is sub-gaussian [19]. Therefore, considering that for fixed our functional is bounded , we conclude that is subgaussian, and so it is .

The left-hand side of inequality (45) is nothing more than the moment generating function of the random variable . If we apply of Markov’s inequality to we obtain

| (46) | |||||

by finding the minumum in the right-hand side of the inequality in Eq. (46) with respect to the parameter we get the optimal bound (Chernoff bound [17],[21]) for subgaussian random variables [19]

| (47) |

which is an equivalent but more usable statement of the subgaussianity property, Eq. (45). Although inequalities (46) and (47) are more useful when treating sums of i.i.d variables due to factorizability, they are valid also for certain instances of dependency in the samples, such as sampling without replacement [20]. Computing is a problem of sampling symbols without replacement from the set , so we can apply these techniques in our problem. Considering that and the fact that we always know the tail probability of

| (48) | |||||

as stated in the previous section. Using Eq. (47) with together with Eq. (48), taking logarithm in both sides and using Strling’s approximation for the logarithm of the central binomial coefficients up to the first term: , and recalling , we get the bound

| (49) |

which reduces rapidly to

| (50) |

as , which is consistent with formula (26) for .