A Color Elastica Model for Vector-Valued Image Regularization

Abstract

Models related to the Euler’s elastica energy have proven to be useful for many applications including image processing. Extending elastica models to color images and multi-channel data is a challenging task, as stable and consistent numerical solvers for these geometric models often involve high order derivatives. Like the single channel Euler’s elastica model and the total variation (TV) models, geometric measures that involve high order derivatives could help when considering image formation models that minimize elastic properties. In the past, the Polyakov action from high energy physics has been successfully applied to color image processing. Here, we introduce an addition to the Polyakov action for color images that minimizes the color manifold curvature. The color image curvature is computed by applying of the Laplace–Beltrami operator to the color image channels. When reduced to gray-scale images, while selecting appropriate scaling between space and color, the proposed model minimizes the Euler’s elastica operating on the image level sets. Finding a minimizer for the proposed nonlinear geometric model is a challenge we address in this paper. Specifically, we present an operator-splitting method to minimize the proposed functional. The non-linearity is decoupled by introducing three vector-valued and matrix-valued variables. The problem is then converted into solving for the steady state of an associated initial-value problem. The initial-value problem is time-split into three fractional steps, such that each sub-problem has a closed form solution, or can be solved by fast algorithms. The efficiency and robustness of the proposed method are demonstrated by systematic numerical experiments.

1 Introduction

In image processing and computer vision, a fundamental question related to denoising and enhancing the quality of given images is what is the appropriate metric. In the literature, image regularization for gray-scale images has been extensively studied. One class of such models takes advantage of the Euler elastica energy defined by [19, p.63]

| (1) |

where is a gray-scale image given as a function on a bounded domain , , with the coordinates of the generic point of , while and are two positive scalar model parameters.

Euler’s elastica has a wide range of uses in image processing, such as image denoising [21, 38, 47], image segmentation [2, 11, 46, 49], image inpainting [35, 38, 45], and image segmentation with depth [13, 27, 48], to name just a few. An important image denoising model, incorporating the Euler’s elastica energy, is

| (2) |

where is the input image we would like to enhance and denoise. To find the minimizer of (2), one class of methods is based on the augmented Lagrangian method. In [38], an augmented Lagrangian method was proposed for image denoising, inpainting and zooming. Based on the method in [38], [12] suggested a fast augmented Lagrangian method and [47] proposed a linearized augmented Lagarangian method. A split-Bregman method was suggested in [45] to solve a linearized elastica model introduced in [1]. Recently, an almost ‘parameter free’ operator-splitting method was presented in [9]; this method is efficient, robust, and has less parameters to adjust compared to augmented Lagrangian based methods.

A color image is a vector-valued signal which can be represented, for example, by three RGB channels or four CMYK channels. While there are many papers and models that deal with gray-scale image regularization, most of them can not be trivially extended to handle color images when taking the geometric properties of the color image into consideration. Inspired by an early paper [10] that focused on regularization functionals on vector-valued images, [34] proposed an anisotropic diffusion framework and [5] introduced a color TV norm for vector-valued images. Another generalization of the scalar TV, known as the vectorial total variation, was proposed in [33] and studied in [20]. A total curvature model was proposed in [39], in which the color TV term in [5] is replaced by the sum of the curvatures of each channel. A generic anisotropic diffusion framework which unifies several PDE based methods was studied in [40]. The Beltrami framework was proposed in [36], it considers the image as a two-dimensional manifold embedded in a five-dimensional space-color space. The image is regularized by minimizing the Polyakov action [28], a surface area related functional. Evolving the image according to the Euler–Lagrange equation of the functional gives rise to the Beltrami flow. To accelerate the convergence rate of the Beltrami flow, a fixed-point method was used in [3] and a vector-extrapolation method was explored in [29]. In [37], the authors used a short-time kernel of the Beltrami flow to selectively smooth two-dimensional images on manifolds. Later on, a semi-implicit operator-splitting method [30] and an ALM based method [31] were proposed to minimize the Polyakov action for processing images. In [50], the authors used a primal-dual projection gradient method to solve a simplified Beltrami functional. A fidelity-Beltrami-sparsity model was proposed in [42], in which a sparsity penalty term was added to the Polyakov action. A generalized gradient operator for vector bundles was introduced in [4], which is applied in vector-valued image regularization. In [6], the Beltrami framework has been applied in active contour for image segmentation. Finally, in [32, 43] the Beltrami framework was extended to deal with patch based geometric flows, or anisotropic diffusion in patch space.

Most existing models for vector-valued image regularization contain only first order derivatives, like the Polyakov action [36], color TV [5] and vectorial TV [20]. This maybe insufficient when considering image formation models containing geometric properties, like the curvature as captured in the single channel Euler’s elastica model. The Polyakov action was shown to be a natural measure which minimization leads to selective smoothing filters for color images. To enrich the variety of image formation models, here, we propose a color elastica model which incorporates the Polyakov action and a Laplace–Beltrami operator acting on the image channels, as a way to regularize color images. While the Polyakov action measures the area of the image manifold, the surface elastica can be computed by applying the Laplace–Beltrami operator on the color channels. It describes a second order geometric structure that allows for more flexibility of the color image manifold and could relax the over-restrictive area minimization. The new model is a natural extension of the Euler elastica model (2) to color images, which takes the geometrical relation between channels into account. Compared to the Polyakov action model, the suggested model is more challenging to minimize since the Laplace–Beltrami term involves high order nonlinear terms. Here, we use an operator-splitting method to find the minimizer of the proposed model. To decouple the non-linearity, three vector-valued and matrix-valued variables are introduced. Then, finding the minimizer of the proposed model can be shown to be equivalent to solving a time-dependent PDE system until the steady state is reached. The PDE system is time-discretized by the operator-splitting method such that each sub-problem can be solved efficiently. To the best of our knowledge, this is the first numerical method to solve the color elastica model. Unlike deep learning methods, the suggested model does not require training, though the model itself could be used as an unsupervised loss in general settings. Numerical experiments show that the proposed model is effective in selectively smoothing color images, while keeping sharp edges.

While the model we propose may not outperform some existing methods like the BM3D [8] and some other deep learning methods [25, 44, 24] on vector-valued image regularization, it manifests properties which have simple geometric interpretations of images. The proposed model provides a new perspective on how to regularize vector-valued images and can be used in many other image processing applications, like image inpainting and segmentation, or as a semi-supervised regularization loss in network based denoising and deblurring. In addition, the model and algorithm proposed here could be also important for many other applications with vector-valued functions that need to be regularized. Many inverse problems, surface processing and image construction problems need this.

The rest of this paper is structured as follows: In Section 2, we briefly review the Polyakov action and the Beltrami framework. The color elastica model is introduced in Section 3. We derive the operator-splitting scheme in Section 4 and describe its finite difference discretization in Section 5. In Section 6, we demonstrate the efficiency, and robustness of the proposed method by systematic numerical experiments. We conclude the paper in Section 7.

2 The Polyakov action

In this section we briefly review the Riemannian geometry relevant to the Polyakov action as applied to color images. Since the Polyakov action is adopted from high energy physics, we temporarily follow the Einstein’s summation notation convention in this section. We first introduce the metric on the Riemannian manifold. Denote a two-dimensional Riemannian manifold embedded in by . Let be a local coordinate system of and be the embedding of into . Given a metric for , of which is a symmetric positive definite matrix-valued metric tensor, the squared geodesic distance on between two points and is defined by,

Assume embeds into a -dimensional Riemannian manifold with metric . If is known, we can deduce the metric of by the pullback,

| (3) |

where denotes the partial derivative with respect to the coordinate, .

Consider a color image where denote the spatial coordinates in the image domain, and denote the RGB color components. can be considered as a two-dimensional surface embedded in the five-dimensional space-feature space: , where is a scalar parameter controlling the ratio between the space and color. Assuming the spatial and color spaces to be Euclidean, we have on the space-feature space, where is the Kronecker delta, if and otherwise. Set . According to (3), the metric on the image manifold is

| (4) |

Denote the image manifold and its metric, and its space-feature space and the metric on it. The weight of the mapping is measured by

| (5) |

for and , where and with and being the inverse of the image metric . With , the functional is known as the Polyakov action [28] in String Theory.

Substituting and (4) into (5) gives rise to

| (6) |

Minimizing (6) by variational gradient descent, yields the Beltrami flow [23],

| (7) |

where is a column vector by convention. The term on the right hand side of (7) is known as the Laplace–Beltrami operator applied to .

Notations

In the rest of the paper, we will use regular letters to denote scalar-valued functions, and bold letters to denote vector-valued and matrix-valued functions.

3 Formulation of the color elastica model

We assume the domain to be a rectangle. Periodic boundary condition is a common condition in image processing. In this paper, we assume all variables (scalar-valued, vector-valued and matrix-valued) have the periodic boundary condition. Let be the given -dimensional signal to be smoothed, in which each component represents a channel. Based on the Polyakov action (6), we define the color elastica model as,

| (8) |

where , are weight parameters, denotes the Sobolev space with periodic boundary condition on :

Similar to (4), let be a symmetric positive-definite metric matrix depending on , where,

for some . We denote . in (8) is the Laplace–Beltrami operator associated to ,

| (9) |

Here, we consider the case for color images: whose components represent the RGB channels. Then is the metric of the surface induced by the denoised image .

Remark 3.1.

As described in Section 2, represents the ratio between the spatial and feature coordinates. As goes to , the ratio vanishes and the space-feature space reduces to the three-dimensional color space.

Remark 3.2.

Remark 3.3.

In the one channel case, the Polyakov action relates closely to existing models. As , the Polyakov action converges to the TV model, and the corresponding gradient flow converges to a level set mean curvature flow. As , the gradient flow of the Polyakov action converges to a diffusion process, i.e., Gaussian smoothing. In the multi-channel case, the Polyakov action contains interactions between channels. By letting , the Polyakov action does not reduce to the color TV model suggested in [5]. The color area minimization also tries to remove potential torsion of the image manifold and align the gradient directions of different channels. Correspondingly, its gradient flow converges to the mean curvature flow in the color space. Thus, the Polyakov action can be thought of as a natural extension of the TV model in the multi-channel case. By adding the Laplace-Betrami operator (LBO) acting on the color channels to the Polyakov action, the proposed color elastica model becomes a natural extension of the Euler’s elastica model, since it reduces to the Euler’s elastica model in one-channel case as . Moreover, when the measure we end up with captures the area of the image manifold in the space for which the minimization is a mean curvature flow. The new term we add is the LBO acting on the coordinates which is, in fact, the mean curvature vector of the surface, here denoted by . Thus, we end up minimizing the squared value of the mean curvature for .

The energy in (8) is nonlinear and is difficult to minimize, since it contains the determinate and inverse of a Hessian matrix. To simplify the nonlinearity, we introduce three vector-valued and matrix-valued variables. For and , let us denote by the real valued function and by the Jacobian matrix

Denote . We introduce the matrix with

| (12) |

for each row of . We immediately have . We shall use to be the matrix-valued function defined by

| (13) |

and denote by , that is,

Define to be the solution of

| (14) |

Denote

We define the sets and as

| (15) | |||

| (16) |

and their corresponding indicator functions as

If is the minimizer of the following energy

| (17) |

In (17), we rewrite the Laplace–Beltrami term as where linearly depends on . The metric is also a function of . In the fidelity term, which solves (14) can be uniquely determined by . Thus the functional only depends on and under some constraints on their relations. We then remove the constraints by incorporating the functional with the two indicator function and . The resulting problem (17) is an unconstrained optimization problem with respect to and only.

Remark 3.4.

While the periodic boundary condition is used in this paper, we would like to mention that zero Neumann boundary condition is another natural condition to consider. The model and algorithm proposed in this paper can be adapted to zero Neumann boundary condition with minor modification.

4 Operator splitting method

In this section, we derive the operator-splitting scheme to solve (17). We refer the readers to [18] for a complete introduction of the operator-splitting method and to [17] for its applications in image processing.

4.1 Optimality condition

Denote

| (18) | |||

| (19) |

with . If is the minimizer of (17), it satisfies

| (20) |

where denotes the partial derivative with respect to if its operand is a smooth function, and the sub-derivative if its operand is an indicator function. To solve (20), we introduce the artificial time and associate it with the initial value problem

| (21) |

where is the initial condition which is supposed to be given, and are positive constants controlling the evolution speed of and , respectively. The choice of will be discussed in Section 4.4.

4.2 Operator-splitting scheme

Similar to [16, 26, 15], we use an operator-splitting method to time discretize (21). One simple choice is the Lie scheme [19]. We denote as the iteration number, by , the time step by and set . Since only contains , when there is no ambiguity, we write and interchangeably. We update as follows,

Initialization

| (22) |

Fractional step 1

Solve

| (23) |

and set .

Fractional step 2

Solve

| (24) |

and set .

Fractional step 3

Solve

| (25) |

and set .

Scheme (23)-(25) is only semi-discrete since we still need to solve the three sub-initial value problems. There is no difficulty in updating in (23)-(25) if is fixed, since we have the exact solution . To solve other subproblems, we suggest to use the Marchuk-Yanenko type discretization:

Initialize .

For , we update

as:

| (26) | |||

| (27) | |||

| (28) |

In the following subsections, we discuss the solution of each of the sub-PDE systems (26)-(28).

Remark 4.1.

Scheme (22)-(25) is an approximation of the gradient flow. Thus the convergence rate of the proposed scheme closely relates to that of the gradient flow together with an approximation error. It is shown that when there is only one variable and the operators in each step is smooth enough, the approximation error is of (see [7] and [14, Chapter 6]). In this article, due to the complex structures of and , we cannot use existing tools to analyze the convergence rate and need to study it separately.

4.3 On the solution of (26)

If is the minimizer of the following problem, then the Euler-Lagrangian equation for it is exactly (26) and this means solves (26):

| (29) |

This problem can be solved by Newton’s method. The functional in (29) is in the form of

| (30) |

for some . The first and second order variation of with respect to are

| (31) | |||

| (32) |

with

| (33) | ||||

| (34) |

for . From an initial guess of , is updated by

| (35) |

until for some stopping criterion . Then we set where is the converged variable.

Remark 4.2.

In our algorithm, the initial guess of is set to be , the minimizer of (29) in the previous iteration. As long as the time step is small enough, and are close to and , respectively. Thus, the functional in (29) does not change too much from that in the previous iteration. It is reasonable to expect its minimizer is close to , and is a good initial guess. This is verified in our numerical experiments.

4.4 On the solution of (27) and the choice of

The solution is the minimizer of

| (38) |

Recall that

Thus, we have . Substituting this into (38), the right hand side becomes a functional of only:

| (39) |

For simplicity, we temporally use to denote in this subsection. Computing the variation of with respect to gives

| (40) |

with

By the optimality condition, we get that

| (41) |

for . And is computed as

| (42) |

4.5 On the solution of (28)

In (28), since is the solution to (14), we write as for . If minimizes (28), is the solution to

| (45) |

Equivalently, is the weak solution of

| (46) |

Problem (46) can be solved by FFT. Once it has been solved, is updated as .

Our algorithm is summarized in Algorithm 1.

Remark 4.3.

We remark that the proposed algorithm can be easily extended to solve image deblurring problems. Consider the following deblurring model (a variant of (8))

| (47) |

where with being the blurring kernel and denoting convolution. Define the set as

If is the minimizer of the following energy

| (48) |

then solving

| (49) |

minimizes (47). Scheme (26)-(28) can be applied to solve (48) by replacing by and by

in (28). The solution to the new subproblem can be solved as , where

| (50) |

with and being the adjoint of . (50) can be solved by FFT using the celebrated convolution theorem.

5 Space discretization

5.1 Basic discrete operators

We assume to be a rectangle with pixels. are used to denote the two spatial directions along which all functions are assumed to be periodic. We use spatial step . For a vector-valued function (resp. scalar-valued function ) defined on , we denote its -th pixel by (resp. ). By taking into account the periodic boundary condition, the backward () and forward () approximation for and are defined as

Based on the above notations, the backward () and forward () approximation of the gradient and the divergence are defined as

In our discretization, only and are used so that recovers the central difference approximation of .

Define the shifting and identity operator as

| (51) |

and denote the discrete Fourier transform and its inverse by and , respectively. We have

We use to denote the real part of its argument.

5.2 Numerical solution for in (29)

In this subsection we discretize the updating formula of in problem (29). We set . In , the divergence is approximated by for . is computed as

Then and can be computed pointwise. Set an initial condition . Then is updated through (35) until it converges. Denoting the converged variable by , we update . In our algorithm, is used.

5.3 Numerical solution for in (37)

Problem (37) is discretized as

| (52) |

for . Instead of solving (52), we use the frozen coefficient approach (see [9, 22]) to solve

| (53) |

with some properly chosen . We suggest to use . (53) can be written in matrix form

| (54) |

with

The linear system (54) is equivalent to

| (55) |

Applying the discrete Fourier transform on both sides gives rise to

where

with for and . We have

5.4 Numerical solution for in (38)

5.5 Numerical solution for in (46)

5.6 Initialization

For initial condition, we first initialize or . Then we initialize as

| (63) |

for and

| (64) |

is computed as

| (65) |

6 Numerical experiments

In this section, we present numerical results that demonstrate the effectiveness of the proposed model and solver. All experiments are implemented in MATLAB(R2018b) on a laptop of 8GB RAM and Intel Core i7-4270HQ CPU: 2.60 GHz. For all of the images used, the pixel values are in . For simplicity, is used. For all experiments, is used in the Newton method (35) to update .

We consider noisy images with Gaussian noise and Poisson noise. For Gaussian noise, the parameter is the standard deviation (denoted by ). The larger is, the heavier the noise. In Poisson noise case, the parameter is the number of photons (denoted by ). Images have better quality with more photons. When adding Poisson noise, we use the MATLAB function imnoise. To add Poisson noise with photons to an image , we refer to the function as imnoise(,’poisson’).

In our experiments, when not specified otherwise, we use , , and stopping criterion .

| (a) | (b) | (c) |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| (a) | (b) | (c) |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

(a)

Proposed

Polyakov

CTV

VTV

Portrait

33.09

(0.94)

30.54

(0.91)

31.69

(0.90)

32.92

(0.96)

Chips

33.88

(0.97)

31.81

(0.97)

32.41

(0.96)

33.67

(0.97)

(b)

Proposed

Polyakov

CTV

VTV

Portrait

128

(69.73)

79

(3.12)

99

(2.62)

287

(10.79)

Chips

113

(61.22)

90

(3.77)

102

(2.76)

301

(14.49)

| Noisy | Proposed | Polyakov | CTV | VTV |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

(a)

Proposed

Polyakov

CTV

VTV

Portrait

27.71

(0.86)

26.00

(0.82)

26.30

(0.80)

27.33

(0.88)

Vegetables

27.13

(0.83)

26.05

(0.79)

25.93

(0.78)

26.57

(0.83)

(b)

Proposed

Polyakov

CTV

VTV

Portrait

153

(75.88)

135

(5.56)

118

(3.17)

421

(16.95)

Vegetables

117

(15.95)

141

(1.49)

125

(0.75)

364

(4.12)

| Noisy | Proposed | Polyakov | CTV | VTV |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

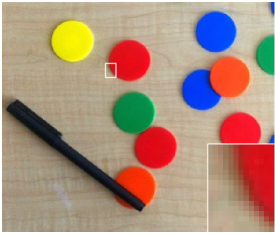

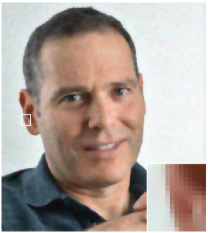

6.1 Image denoising for Gaussian noise

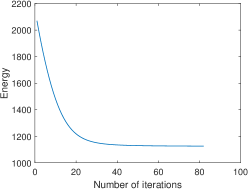

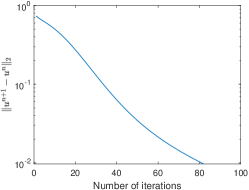

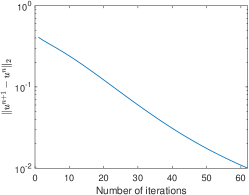

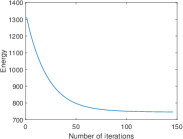

We use the proposed model to denoise images with Gaussian noise. We add Gaussian noise with to three images: (a) portrait, (b) orange ball and (c) chips (shown in the first row of Figure 1). The noisy images and denoised images with are shown in the second and third rows of Figure 1, respectively. is used for the orange ball, and is used for the portrait and chips. For all of three examples, while they are denoised, sharp edges are kept. To demonstrate the efficiency of the proposed method, in Figure 2, we present the evolution of the energy and of the three examples shown in Figure 1. For the three examples, the energy decreases very fast and achieves the minimum within 40 iterations. Linear convergence is observed on the evolution of .

We next add Gaussian noise with to these images. The noisy and denoised images are shown in Figure 3. The evolution of the energy w.r.t. the number of iterations are also shown in the third row. Our method is efficient and performs well. The energy achieves its minimum within 70 iterations. To better demonstrate the smoothing effect, we select the zoomed region of the orange ball and show the surface plot of each channel (RGB channel correspond the red, green and blue surface) in Figure 4. The surface plot of the noisy image is shown in the first row, which is very oscillating. The surface plot of the denoised image is shown in the second row. The surfaces of all three channels are very smooth which verifies the smoothing property of the proposed model.

We then compare the proposed model with the Polyakov action model [23], the color total variation (CTV) model [5] and the vectorial total variation (VTV) model [20]. For Gaussian noise with , the denoised images by these models are shown in Figure 5. In the proposed model, is used. In CTV and VTV, we set and respectively. Compared to other models, the proposed model smoothens flat regions while keeping sharp edges and textures. To quantify the difference of the denoised images, we compute and compare the PSNR and the SSIM [41] value of each denoised image in Figure 5 and present these values in Table 2(a). The proposed model and VTV outperform the other two models in both values. For the image of Chips, the result by VTV smoothens out almost all textures of the background. In comparison, these textures are kept by our proposed model. The number of iterations and CPU time of different methods are reported in Table 2(b). Due to the complex structures of the color elastica model, the proposed method is slower than the other three methods.

To further demonstrate the effectiveness of the proposed model, we compare these models on images with heavy Gaussian noise. In Figure 6, the noisy images contaminated by Gaussian noise with are shown in the first column. The results by the proposed method, the Polyakov action, CTV and VTV are shown in Column 2 - Column 5, respectively. In the proposed model, is used. In CTV and VTV, we set and respectively. The PSNR and the SSIM value of each denoised image are shown in Table 2(a). The proposed model and VTV outperform the other two models in both values. While VTV is powerful in smoothing the flat region of images, the edges in the denoised image are oscillating. Compared to VTV, the proposed model provides results with smooth and sharp edges, and with larger PSNR values. This justifies the effectiveness of the high-order derivatives in the proposed model. The number of iterations and CPU time of different methods are reported in Talbe 2(b).

| (a) | (b) | (c) |

|---|---|---|

|

|

|

|

|

|

|

|

|

| (a) | (b) | (c) |

|---|---|---|

|

|

|

|

|

|

| (a) | (b) | (c) |

|---|---|---|

|

|

|

|

|

|

| (a) | (b) | (c) |

|---|---|---|

|

|

|

|

|

|

|

(a)

Proposed

Polyakov

CTV

VTV

Stop Sign

32.05

(0.99)

28.52

(0.98)

30.36

(0.98)

31.73

(0.97)

Vegetables

31.25

(0.98)

30.04

(0.98)

30.60

(0.97)

31.04

(0.98)

(b)

Proposed

Polyakov

CTV

VTV

Stop Sign

152

(52.47)

73

(2.03)

103

(2.05)

307

(7.94)

Vegetables

128

(18.86)

85

(0.94)

111

(0.67)

243

(2.79)

6.2 Image denoising for Poisson noise

We explore the performance of the proposed method to denoise Poisson noise with . Our examples are (a) the lighthouse, (b) stop sign and (c) vegetables, see the first row in Figure 7. The noisy images and denoised images with are shown as the second row and third row in Figure 7, respectively. Similar to the performance on Gaussian noise, our method keeps sharp edges. The evolution of the energy and of these examples are shown in Figure 8. All energies achieve their minimum within 50 iteration.

Then, we add heavy Poisson noise with . The noisy and denoised images are shown in Figure 9. In this set of experiments, is used. We also show the evolution of energy against the number of iterations in Figure 10. All energies achieve their minimum in about 100 iterations. Figure 11 shows the surface plot of the zoomed region of the stop sign in Figure 9. The surface plot of the denoised image is smooth while that of the noisy image is very oscillating. We then compare the denoised images by the proposed model, the Polyakov action model, CTV and VTV in Table 3, which presents the PSNR, SSIM, number of iterations and CPU time of each denoised image. In the proposed model, is used. In CTV and VTV, we set and respectively. Among all methods, the proposed method provides results with the largest PSNR and SSIM value.

| (a) | (b) | (c) |

|---|---|---|

|

|

|

| (a) | (b) | (c) |

|---|---|---|

|

|

|

|

|

|

| (a) | (b) | (c) |

|---|---|---|

|

|

|

|

|

|

| (a) | (b) | (c) |

|

|

|

| (d) | (e) | (f) |

|

|

|

6.3 Effect of

The regularization term (first term) in (8) depends on two quantities: the surface area and the color elastica . Both terms are closely dependent on . In this section, we explore the effect of by checking the behavior of both terms w.r.t. the noise level with and . The images used are shown in Figure 12: (a) orange ball, (b) crystal cube and (c) fruits.

The first experiment involves Gaussian noise for which the noise level is controlled by : images have better quality with smaller . In Figure 13, we show the behavior of both terms w.r.t. . The first row shows the surface area and the second row shows the color elastica. Both terms have larger values on images with heavier noise (larger ) for most choices of , which justifies the effectiveness of the proposed model (8). In Figure 13, as SD gets larger, the surface area increases faster with smaller while the color elastica increases faster with larger . To make both terms effective, should not be too large or too small.

We then repeat these experiments on Poisson noise in which the noise level is controlled by , the number of photons. Images have better quality with larger . The behavior of the surface area and energy w.r.t. with different is shown in Figure 14. Similar to the behavior for Gaussian noise, both terms have larger values with heavier noise (smaller ). As gets larger, the surface area has larger slope while the color elastica has smaller slope, which again implies that should not be too large or too small to make both terms effective.

The above observation shows that the surface area is more effective with smaller . However, even if our model only contains the surface area term (i.e., ), we face the smoothness problem of edges if is too small. In Figure 15, we use the proposed model with and various to denoise the orange ball which is contaminated by Poisson noise with . The noisy image, denoised image with and are shown in (a)-(f), respectively. Since the scale of surface area changes as varies, we need to set different for each . In our experiments, are used as varies from to . As decreases, the edge of the orange ball in the denoised image becomes more oscillating. The cause of this observation may come from the model itself or the numerical algorithm, and is to be studied in the future.

6.4 Effect of

We fix and explore the effect of on our model. We use the image of crystal cube (shown in Figure 16(a)) with Gaussian noise with . We test our model with and . The noisy and denoised images are shown in Figure 16. When , model (8) reduces to the Polyakov action model and there are perturbations in the flat region of the denoised image, see Figure 16(c). As gets larger, the flat part of the denoised image becomes smoother while the edges are kept. Similar to the elastica model for gray-scale images, the color elastica term helps smoothing the flat region. In Figure 17 we compare the surface plot of the zoomed region of the denoised image with and . The rendered surface of the result with is indeed much smoother. The evolution of the energy are shown in the third row of Figure 16. The color elastica term helps the energy to achieve the minimum faster. With non-zero , the energy of all experiments achieve the minimum within 80 iterations, while with , it takes nearly 150 iterations for the energy to achieve its minimum.

| (a) | (b) | ||

|

|

||

| (c) | (d) | (e) | (f) |

|

|

|

|

|

|

|

|

|

|

|

|

| (a) | (b) | (c) |

|---|---|---|

|

|

|

|

|

|

6.5 An example on image deblurring

To conclude this section, We test the proposed model on image deblurring by minimizing (47). The clean images are blurred by motion kernel and then contaminated by noise. In our algorithm, is used. The clean images, blurred images and deblurred images are shown in Figure 18. The proposed model preserves color well.

7 Conclusion

We proposed a color elastica model (8), which incorporates the Polyakov action and the squared magnitude of mean curvature of the image treated as a two dimensional manifold in spatial-color space, to regularize color images. The proposed model is a geometric extension of the elastica model (2): when applied to gray-scale images, it reduces to a variant of the Euler elastica model. We also proposed an operator-splitting method to solve (8). The proposed method is efficient and robust with respect to parameter choices. The effectiveness of the proposed model and the efficiency of the numerical method are demonstrated by several experiments in color image regularization. The mean curvature term helps to better smooth noisy almost uniform (yet with some color changes) regions in images while keeping the edges sharp.

Acknowledgment

The authors would like to thank the anonymous reviewers of this article for helpful comments and suggestions.

References

- [1] E. Bae, J. Shi, and X.-C. Tai, Graph cuts for curvature based image denoising, IEEE Transactions on Image Processing, 20 (2010), pp. 1199–1210.

- [2] E. Bae, X.-C. Tai, and W. Zhu, Augmented Lagrangian method for an Euler’s elastica based segmentation model that promotes convex contours, Inverse Problems & Imaging, 11 (2017), pp. 1–23.

- [3] L. Bar, A. Brook, N. Sochen, and N. Kiryati, Deblurring of color images corrupted by impulsive noise, IEEE Transactions on Image Processing, 16 (2007), pp. 1101–1111.

- [4] T. Batard and M. Bertalmío, On covariant derivatives and their applications to image regularization, SIAM Journal on Imaging Sciences, 7 (2014), pp. 2393–2422.

- [5] P. Blomgren and T. F. Chan, Color TV: total variation methods for restoration of vector-valued images, IEEE Transactions on Image Processing, 7 (1998), pp. 304–309.

- [6] X. Bresson, P. Vandergheynst, and J.-P. Thiran, Multiscale active contours, International Journal of Computer Vision, 70 (2006), pp. 197–211.

- [7] A. J. Chorin, T. J. Hughes, M. F. McCracken, and J. E. Marsden, Product formulas and numerical algorithms, Communications on Pure and Applied Mathematics, 31 (1978), pp. 205–256.

- [8] K. Dabov, A. Foi, V. Katkovnik, and K. Egiazarian, Image denoising by sparse 3-D transform-domain collaborative filtering, IEEE Transactions on Image Processing, 16 (2007), pp. 2080–2095.

- [9] L.-J. Deng, R. Glowinski, and X.-C. Tai, A new operator splitting method for the Euler elastica model for image smoothing, SIAM Journal on Imaging Sciences, 12 (2019), pp. 1190–1230.

- [10] S. Di Zenzo, A note on the gradient of a multi-image, Computer Vision, Graphics, and Image Processing, 33 (1986), pp. 116–125.

- [11] Y. Duan, W. Huang, J. Zhou, H. Chang, and T. Zeng, A two-stage image segmentation method using Euler’s elastica regularized Mumford-Shah model, in 2014 22nd International Conference on Pattern Recognition, IEEE, 2014, pp. 118–123.

- [12] Y. Duan, Y. Wang, X.-C. Tai, and J. Hahn, A fast augmented lagrangian method for Euler’s elastica model, in International Conference on Scale Space and Variational Methods in Computer Vision, Springer, 2011, pp. 144–156.

- [13] S. Esedoḡlu and R. March, Segmentation with depth but without detecting junctions, Journal of Mathematical Imaging and Vision, 18 (2003), pp. 7–15.

- [14] R. Glowinski, Finite element methods for incompressible viscous flow, Handbook of Numerical Analysis, 9 (2003), pp. 3–1176.

- [15] R. Glowinski, S. Leung, H. Liu, and J. Qian, On the numerical solution of nonlinear eigenvalue problems for the Monge-Ampère operator, ESAIM: Control, Optimisation and Calculus of Variations, 26 (2020), p. 118.

- [16] R. Glowinski, H. Liu, S. Leung, and J. Qian, A finite element/operator-splitting method for the numerical solution of the two dimensional elliptic Monge–Ampère equation, Journal of Scientific Computing, 79 (2019), pp. 1–47.

- [17] R. Glowinski, S. Luo, and X.-C. Tai, Fast operator-splitting algorithms for variational imaging models: Some recent developments, in Handbook of Numerical Analysis, vol. 20, Elsevier, 2019, pp. 191–232.

- [18] R. Glowinski, S. J. Osher, and W. Yin, Splitting methods in communication, imaging, science, and engineering, Springer, 2017.

- [19] R. Glowinski, T.-W. Pan, and X.-C. Tai, Some facts about operator-splitting and alternating direction methods, Splitting Methods in Communication, Imaging, Science, and Engineering, (2017), pp. 19–94.

- [20] B. Goldluecke, E. Strekalovskiy, and D. Cremers, The natural vectorial total variation which arises from geometric measure theory, SIAM Journal on Imaging Sciences, 5 (2012), pp. 537–563.

- [21] V. Grimm, R. I. McLachlan, D. I. McLaren, G. Quispel, and C. Schönlieb, Discrete gradient methods for solving variational image regularisation models, Journal of Physics A: Mathematical and Theoretical, 50 (2017), p. 295201.

- [22] Y. He, S. H. Kang, and H. Liu, Curvature regularized surface reconstruction from point cloud, arXiv preprint arXiv:2001.07884, (2020).

- [23] R. Kimmel, N. Sochen, and R. Malladi, From high energy physics to low level vision, in International Conference on Scale-Space Theories in Computer Vision, Springer, 1997, pp. 236–247.

- [24] E. Kobler, A. Effland, K. Kunisch, and T. Pock, Total deep variation: A stable regularizer for inverse problems, arXiv preprint arXiv:2006.08789, (2020).

- [25] S. Lefkimmiatis, Non-local color image denoising with convolutional neural networks, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 3587–3596.

- [26] H. Liu, R. Glowinski, S. Leung, and J. Qian, A finite element/operator-splitting method for the numerical solution of the three dimensional Monge–Ampère equation, Journal of Scientific Computing, 81 (2019), pp. 2271–2302.

- [27] M. Nitzberg, D. Mumford, and T. Shiota, Filtering, Segmentation and Depth, vol. 662, Springer, 1993.

- [28] A. M. Polyakov, Quantum geometry of bosonic strings, in Supergravities in Diverse Dimensions: Commentary and Reprints (In 2 Volumes), World Scientific, 1989, pp. 1197–1200.

- [29] G. Rosman, L. Dascal, A. Sidi, and R. Kimmel, Efficient Beltrami image filtering via vector extrapolation methods, SIAM Journal on Imaging Sciences, 2 (2009), pp. 858–878.

- [30] G. Rosman, L. Dascal, X.-C. Tai, and R. Kimmel, On semi-implicit splitting schemes for the Beltrami color image filtering, Journal of Mathematical Imaging and Vision, 40 (2011), pp. 199–213.

- [31] G. Rosman, X.-C. Tai, L. Dascal, and R. Kimmel, Polyakov action minimization for efficient color image processing, in European Conference on Computer Vision, Springer, 2010, pp. 50–61.

- [32] A. Roussos and P. Maragos, Tensor-based image diffusions derived from generalizations of the total variation and beltrami functionals, in 2010 IEEE International Conference on Image Processing, IEEE, 2010, pp. 4141–4144.

- [33] G. Sapiro, Vector-valued active contours, in Proceedings CVPR IEEE Computer Society Conference on Computer Vision and Pattern Recognition, IEEE, 1996, pp. 680–685.

- [34] G. Sapiro and D. L. Ringach, Anisotropic diffusion of multivalued images with applications to color filtering, IEEE Transactions on Image Processing, 5 (1996), pp. 1582–1586.

- [35] J. Shen, S. H. Kang, and T. F. Chan, Euler’s elastica and curvature-based inpainting, SIAM journal on Applied Mathematics, 63 (2003), pp. 564–592.

- [36] N. Sochen, R. Kimmel, and R. Malladi, A general framework for low level vision, IEEE Transactions on Image Processing, 7 (1998), pp. 310–318.

- [37] A. Spira, R. Kimmel, and N. Sochen, A short-time Beltrami kernel for smoothing images and manifolds, IEEE Transactions on Image Processing, 16 (2007), pp. 1628–1636.

- [38] X.-C. Tai, J. Hahn, and G. J. Chung, A fast algorithm for Euler’s elastica model using augmented Lagrangian method, SIAM Journal on Imaging Sciences, 4 (2011), pp. 313–344.

- [39] L. Tan, W. Liu, and Z. Pan, Color image restoration and inpainting via multi-channel total curvature, Applied Mathematical Modelling, 61 (2018), pp. 280–299.

- [40] D. Tschumperle and R. Deriche, Vector-valued image regularization with PDEs: A common framework for different applications, IEEE Transactions on Pattern Analysis and Machine Intelligence, 27 (2005), pp. 506–517.

- [41] Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, Image quality assessment: from error visibility to structural similarity, IEEE transactions on image processing, 13 (2004), pp. 600–612.

- [42] Z. Wang, J. Zhu, F. Yan, and M. Xie, Fidelity-Beltrami-sparsity model for inverse problems in multichannel image processing, SIAM Journal on Imaging Sciences, 6 (2013), pp. 2685–2713.

- [43] A. Wetzler and R. Kimmel, Efficient beltrami flow in patch-space, in International Conference on Scale Space and Variational Methods in Computer Vision, Springer, 2011, pp. 134–143.

- [44] D. Yang and J. Sun, BM3D-Net: A convolutional neural network for transform-domain collaborative filtering, IEEE Signal Processing Letters, 25 (2017), pp. 55–59.

- [45] M. Yashtini and S. H. Kang, A fast relaxed normal two split method and an effective weighted TV approach for Euler’s elastica image inpainting, SIAM Journal on Imaging Sciences, 9 (2016), pp. 1552–1581.

- [46] J. Zhang and K. Chen, A new augmented Lagrangian primal dual algorithm for elastica regularization, Journal of Algorithms & Computational Technology, 10 (2016), pp. 325–338.

- [47] J. Zhang, R. Chen, C. Deng, and S. Wang, Fast linearized augmented Lagrangian method for Euler’s elastica model, Numerical Mathematics: Theory, Methods and Applications, 10 (2017), pp. 98–115.

- [48] W. Zhu, T. Chan, and S. Esedoḡlu, Segmentation with depth: A level set approach, SIAM Journal on Scientific Computing, 28 (2006), pp. 1957–1973.

- [49] W. Zhu, X.-C. Tai, and T. Chan, Image segmentation using Euler’s elastica as the regularization, Journal of Scientific Computing, 57 (2013), pp. 414–438.

- [50] D. Zosso and A. Bustin, A primal-dual projected gradient algorithm for efficient Beltrami regularization, Computer Vision and Image Understanding, (2014), pp. 14–52.