Chongqing University of Posts and Telecommunications,

Chongqing 400065, the People’s Republic of China

22email: [email protected], 22email: [email protected], 22email: [email protected], 22email: [email protected], 22email: [email protected]

A Causal Disentangled Multi-Granularity Graph Classification Method

Abstract

Graph data widely exists in real life, with large amounts of data and complex structures. It is necessary to map graph data to low-dimensional embedding. Graph classification, a critical graph task, mainly relies on identifying the important substructures within the graph. At present, some graph classification methods do not combine the multi-granularity characteristics of graph data. This lack of granularity distinction in modeling leads to a conflation of key information and false correlations within the model. So, achieving the desired goal of a credible and interpretable model becomes challenging. This paper proposes a causal disentangled multi-granularity graph representation learning method (CDM-GNN) to solve this challenge. The CDM-GNN model disentangles the important substructures and bias parts within the graph from a multi-granularity perspective. The disentanglement of the CDM-GNN model reveals important and bias parts, forming the foundation for its classification task, specifically, model interpretations. The CDM-GNN model exhibits strong classification performance and generates explanatory outcomes aligning with human cognitive patterns. In order to verify the effectiveness of the model, this paper compares the three real-world datasets MUTAG, PTC, and IMDM-M. Six state-of-the-art models, namely GCN, GAT, Top-k, ASAPool, SUGAR, and SAT are employed for comparison purposes. Additionally, a qualitative analysis of the interpretation results is conducted.

Keywords:

Multi-granularity Interpretability Explainable AI Causal disentanglement Graph classification.1 Introduction

Graph data is characterized by complex structures and vast amounts of data, widely prevalent in our daily lives. Therefore, it is crucial to map graph data into low-dimensional embedding. Among various graph downstream tasks, graph classification is an essential task. Examples of such tasks include superpixel graph classification [8], molecular graph property prediction [10], and more.

In the research on graph classification, representation learning is an important approach for data analysis. Graph data, inherently possessing multiple levels of granularity, comprises nodes at a fine-grained level, coarser-grained substructures. For example, in the Tox21 dataset, atoms are fine-grained, functional groups are coarse-grained. This dataset compounds containing the azo functional group that are associated with carcinogenic and mutagenic properties [5]. In graph classification, however, traditional representation learning methods overlook the multi-granularity of graph data, and these methods’ interpretability is limited. In graph classification, the outcome is primarily determined by certain important substructures [13]. Indeed, current graph classification methods do not consider the inherent multi-granularity nature of graph data. This lack of granularity differentiation during modeling leads to the mixing of critical information and false correlations within the models. As a result, it becomes challenging to accurately distinguish and achieve the goal of building interpretable models.

In summary, there is a need to construct a substructure recognition model that takes into account multi-granularity graph data for modeling the graph representations. Therefore, this paper attempts to build a causal disentangled GNN model based on the idea of multi-granularity [21]. This model can disentangle the important substructures and bias parts in the graph from the multi-granularity perspective, and then conduct representation learning and classification for the entire graph.

Specifically, this paper designs a causal disentangled multi-granularity graph representation learning method (CDM-GNN). First, from a fine-grained perspective, this paper uses the feature and topological information of nodes to build a mask describing the closeness between nodes. Next, from a coarser-grained perspective, CDM-GNN uses this mask to disentangle the important substructures and bias parts. It obtains the reason for learning the current representation, which is the interpretable result. Subsequently, the masked graph is input into each slice layer to disentangle the key substructures from the false association relationship. Increase the depth of the model, gradually transitioning from fine-grained to coarse-grained, and expanding global information. Finally, this paper learns an adaptive weight for each layer of slice results and adaptively fuses the results of each layer. Then it obtains the final representation for graph classification.

The main contributions of this work are summarized as follows:

1. The CDM-GNN considers the multi-granularity characteristics of graph data. It models through granularity transformation, fully taking into account the information at different granularities and their fusion.

2. The proposed model is capable of disentangling the key substructures and bais parts associative relationships in the graph while providing corresponding explanations.

3. Compared with six state-of-the-art models, namely GCN, GAT, Top-k, ASAPool, SUGAR, and SAT in MUTAG, PTC, and IMDB-M, the CDM-GNN model achieves better graph classification results.

2 Related Work

2.1 Graph classification representation learning

To obtain continuous low-dimensional embedding, graph representation learning aims to map non-Euclidean data into a low-dimensional representation space. For graph classification, there are two categories of research methods. One category is similarity-based graph classification methods, including graph kernel methods and graph matching methods. However, these methods are often inflexible and computationally expensive. In these methods, the process of graph feature extraction and graph classification is independent, which limits optimization for specific tasks. The other category is based on GNNs. When applied to graph classification problems, GCN [11] and GAT [20] perform graph classification through convolution and pooling operations. Pooling is the process of graph coarsening, where the operation progressively aggregates fine-grained nodes. Subsequent research has also introduced changes to the pooling operation. For example, SAT [2] proposes a graph transformer method used in pooling.

2.2 Disentangled learning

The idea of disentangling initially originated from Bengio et al. [1] and is primarily focused on computer vision [9]. However, some researchers have extended this concept to graphs. DisenGCN model [15] introduces a neighborhood routing mechanism to disentangle the various latent factors behind interactions in the graph. The IPGDN model [12], based on DisenGCN, add the Hilbert Schmidt Independence Criterion (HSIC) to further enhance the independence between different modules. Based on the routing mechanism, the authors demonstrate the user-item relationship at the granularity of user intent and disentangle these intents in the representations of users and items [22]. However, this method mainly focuses on bipartite graphs and may not be suitable for more complex graph structures. It lacks scalability. In the context of knowledge graphs with richer types of relationships, some works consider leveraging relationship information in the process of disentangled representation learning. For example, they guide the disentangled representation of entity nodes based on the semantics of relationships [24]. However, these methods overlook the information from different types of relationship edges. These studies have successfully achieved disentangled. But, their primary emphasis is on manipulating the intermediate hidden layer states, which poses challenges in comprehending the structure of the graph. Consequently, their interpretability from a human perspective is limited.

2.3 Interpretability method

Despite the excellent representation capability of GNN models, their learning process is often opaque and difficult for humans to understand. To address this issue, some researchers have proposed post-hoc methods for explaining. GNNExplainer model [23] learns masks for the adjacency matrix and node features to identify important substructures. The PGExplainer model [14] attempts to learn an MLP function to mask edges in the graph, incorporating sparsity and continuity constraints in the model to obtain the final explanations. The post-hoc methods for explaining can only understand the model, not adjust the model. Another category of GNN explanation methods involves constructing self-explainable GNNs. Compared with post-hoc methods, this kind of model not only provides predictions but also offers explanations for the reasons behind those predictions. It can guide the model to some extent. Some self-explainable models require prior knowledge. For example, KerGNNs [6] is a subgraph-based node aggregation algorithm that manually constructs graph kernel functions to compare the similarity between graph filters and input subgraphs. The trained graph filters are also visualized and used as the model’s explanation, which is then integrated into the GNN. In addition to explaining isomorphic GNN models, researchers have also explored self-explainable models for heterogeneous GNN models, such as Knowledge Router [3]. However, these self-explainable GNNs have not extensively considered the issue of the multi-granularity structure of graphs.

3 Preliminaries

3.1 Notations

Let be a graph, where is the node set, and is the edge set. The is defined as the adjacency matrix of graph . If there is an edge between node and node , the ; otherwise,. The is defined as the features matrix. represents the features of each node, where denotes the feature dimension for each node. The neighbour of node is . The true label set is .

3.2 A Causal View on GNNs

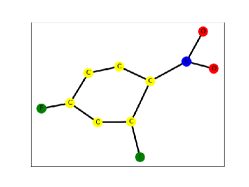

We analyze this problem using the Structural Causal Model (SCM). Figure 1 illustrates the five components. Z: input graph data, Y: labels, B: bias part in the graph, C: important substructures, and E: learned embedding by GNN model.

: Z is composed of B and C.

: The structure of C is learned through GNNs and represented as E. Then, establish a causal correlation between C and Y.

: Due to the confusion between B and C, it affects the representation E obtained from GNNs, which also impacts the prediction of Y. Consequently, a spurious correlation is formed, leading to misleading predictions.

4 Proposed Method

This paper employs a multi-granularity approach for modeling. The CDM-GNN is introduced with an overall framework illustrated in Figure 2.

4.1 Fine-grained closeness mask

Based on the multi-granularity characteristics of graph data, this paper first considers modeling the nodes at a fine-grained level to capture the closeness between nodes, forming a mask matrix.

Given a graph , the attention values are calculated based on the feature similarity between node and node from a fine-grained perspective.

| (1) |

where is the learnable parameters. Then the calculated attention values are normalized.

| (2) |

At the fine-grained level, we calculate the interaction between the structures of node and and use as the attention value of the topological structure to describe the relationship between nodes.

| (3) |

Here, We use restart random walks to describe the degree of structural similarity between the center node and other nodes . Particles start from the center node and randomly walk to their neighbors and . At each step, there is a certain probability of returning to the center node . After iterations, the probability vector of visiting the neighbors around node is obtained.

| (4) |

where and is the degree matrix. is the probability of restarting the random walk. is a one-hot vector where the center node is assigned 1. Others are assigned 0.

The probability vector is proportional to the edge weights. The higher the probability, the larger the edge weight. This probability vector is used as the weight vector. When , the vector converges to the following equation:

| (5) |

In Equation (3), represents the weight of node (). After normalizing , we obtain the following expression:

| (6) |

We integrate and to obtain , resulting in the formation of matrix :

| (7) |

At a fine-grained level, this paper describes the closeness between each node from its features and structure. And it fuses them to form a mask. The mask part is an important substructure, while others are the bias parts.

4.2 Coarse-grained disentangled framework

Firstly, at a fine-grained level, the features are subjected to a simple feature transformation using the function with the learnable parameters .

| (8) |

where the is a multi-layer neural network with .

Next, the transformed features, fine-grained closeness mask, and adjacency matrices of different orders are sent into slice layers. The modeling process starts with the important substructures.

In the important substructures, the matrix is constructed in each slice GNN layer following the approach described in the previous section. In the first layer, and are used, in the second layer, the and are used, and in the third layer, the and are used. These matrices are then inputted into their corresponding slice layers to obtain the hidden layer states , , and , and ,, and are learnable parameters.

| (9) |

The CDM-GNN model stacks the transformed feature results and obtained hidden layer states:

| (10) |

This model adaptive learns the weight for each slice layer:

| (11) |

| (12) |

For the graph , this model performs pooling on the obtained embedding to obtain the representation of :

| (13) |

For bias parts, the CDM-GNN obtains the status of hidden layer , , and . In this model, we share these learnable parameters ,, and .

| (14) |

Similarly, this model stacks the transformed feature results and obtained hidden layer states:

| (15) |

Then, the CDM-GNN model adaptive learns the weight of each slice layer and gets the final embedding :

| (16) |

| (17) |

| (18) |

4.3 Causal distangled learning

This paper aims to train graphs by the causal components of their representations to enable the CDM-GNN model to classify correctly. To achieve this, CDM-GNN employs the supervised classification cross-entropy loss as follows:

| (19) |

| (20) |

For the representation of bias parts, it should not affect the classification results. Therefore, the prediction results of the bias parts should be evenly distributed across all categories. Using uniform distribution to help learn the representation of bias parts as follows:

| (21) |

where means the KL-Divergence, denotes the uniform distribution.

In order to reduce the interference of bias parts, the representation of bias parts is recombined with the causal parts to construct intervention terms. The CDM-GNN redefines the function. It adds the bias representation of random disturbance back to the corresponding positions of the causal part representation to obtain the constructed intervention term :

| (22) |

In this case, the CDM-GNN can get the correct classification results by the representation of the causal part. The loss function is as follows:

| (23) |

| (24) |

The overall loss function is as follows:

| (25) |

where , , and are hyper-parameters that determine the strength of disentanglement and causal influences.

5 Experiment

5.1 Datasets

The commonly-used datasets for graph classification are summarized in Table 1.

-

MUTAG [4]: This dataset contains 188 compounds marked according to whether it has a mutagenic effect on a bacterium.

-

PTC [19]: This dataset contains 344 organic molecules marked according to their carcinogenicity on male mice.

-

IMDB-M [17]: This dataset is a movie collaboration dataset marked according to the genre an ego-network belongs to (romance, action, and science).

| Dataset | Graphs | Classes | Avg. | Avg. |

|---|---|---|---|---|

| MUTAG | 188 | 2 | 17.93 | 19.79 |

| PTC | 344 | 2 | 14.29 | 14.69 |

| IMDB-M | 1500 | 3 | 13.00 | 65.94 |

5.2 Baselines

This paper compares the proposed CDM-GNN model with several state-of-the-art methods, which are summarized as follows:

-

GCN [11]: It is a semi-supervised graph convolution network model for graph embedding.

-

GAT [20]: It is a graph neural network model which employs an attention mechanism to obtain graph representations.

-

Top-K [7]: It is a graph representation method that adaptively selects some critical nodes to form smaller subgraphs based on their importance vectors.

-

ASAPool [16]: It is a graph neural network model that utilizes attention mechanisms to capture the importance of nodes and pools subgraphs into a coarse graph through learnable sparse soft clustering allocation for graph representation.

-

SUGAR [18]: It is a graph representation method that first samples some subgraphs. And then it uses the DQN algorithm to select top-k key subgraphs as representative abstractions of the entire graph.

-

SAT [2]: It is a graph transformer method that incorporates the structure explicitly. Before calculating attention, it fuses structural information into the original self-attention by extracting k-hop subgraphs or k-subtrees on each node.

5.3 Performance on Real-world Graphs

In the graph classification task, this paper adopts accuracy as the evaluation metric to measure the performance of different models.

From Table 2, it can be observed that the proposed CDM-GNN model achieves the best graph classification results on the MUTAG, PTC, and IMDB-M datasets. Specifically, compared with the GCN and GAT, the CDM-GNN has an improvement of about 5 on the MUTAG dataset in terms of graph classification accuracy. Compared to Top-K and ASAPool, which are specifically designed for pooling operations, CDM-GNN demonstrates improvements ranging from 12 to 20. In comparison to methods focusing on substructure extraction, SUGAR and SAT, CDM-GNN shows improvements of 3 to 7. On the PTC dataset, the CDM-GNN also shows a notable improvement and achieves accurate graph classification. On the multi-class IMDB-M dataset, the proposed CDM-GNN achieves about 5.4 improvement over GCN and GAT. When compared to some pooling methods, CDM-GNN shows accuracy improvements ranging from 5.5 to 15. In the substructure research, CDM-GNN demonstrates accuracy improvements of 4 to 9. These results highlight the superior graph classification performance of the CDM-GNN model across different datasets. It surpasses other popular models and specialized approaches for pooling or subgraph analysis.

| Models | MUTAG | PTC | IMDB-M |

|---|---|---|---|

| GCN | 0.8924 | 0.5726 | 0.5700 |

| GAT | 0.8994 | 0.5944 | 0.5810 |

| Top-K Pool | 0.7291 | 0.5721 | 0.4836 |

| ASAPool | 0.8211 | 0.5677 | 0.5794 |

| SUGAR | 0.8660 | 0.5821 | 0.5988 |

| SAT | 0.9030 | 0.6070 | 0.5329 |

| Ours | 0.9474 | 0.6154 | 0.6348 |

5.4 Visualization results

To demonstrate the interpretability of the CDM-GNN method, this paper conducts a qualitative analysis. According to the existing chemical knowledge, people know that the MUTAG dataset determines whether it has a mutagenic effect by judging whether the molecule contains the substructure of or . Therefore, this paper visualizes the MUTAG dataset to observe the interpretability of the CDM-GNN model and perform qualitative analysis. If CDM-GNN model can recognize the important substructure and disentangle this part with bias parts, then it indicates that the model has better interpretability. This model can obtain classification and interpretation results that are consistent with human cognition and prior knowledge.

In this study, the edges of the graph are colored based on the values of the mask, where darker colors indicate greater importance. In the visualized results, we can observe that the edges of important substructures are colored darker, indicating their higher weights and greater significance in the classification of the MUTAG dataset. The edges connecting the important substructures and the bias parts are colored lighter, indicating that the CDM-GNN model has successfully disentangled the important substructures from the bias parts. From Figure 3 (a)(b)(d)(e), it can be observed that regardless of whether the graph contains one or multiple substructures, the CDM-GNN can recognize them and successfully disentangle them from the carbon rings. Even in cases where there are multiple carbon rings in the graph, like Figure 3 (c)(f), the CDM-GNN model can still identify the important parts. This indicates the model has the ability to capture and distinguish the relevant features, enabling it to recognize and disentangle the important parts from other structural components.

5.5 Ablation experiment

Here, this paper discusses the role of three losses in learning for the CDM-GNN model. From Figure 4, It can be observed that when this model only uses the , , and , the plays a more important role in this model training. The CDM-GNN model is designed by incorporating downstream graph classification tasks. The plays a leading role in guiding this model to achieve better differentiation among different categories of graph data. This way, the CDM-GNN model focuses on the goal of graph classification and progressively improves its performance. The is mainly aimed at reducing the interference caused by the bias component. Without this loss, the classification results are the most significant decrease in accuracy. The is to consider the decoupling of causality. If the CDM-GNN model is without , it also leads to a decrease in accuracy. When three losses are used together, the model can achieve the best effect. It has better graph classification results and can separate the important substructure and the bias parts.

6 Conclusion

This paper introduces a causal disentangled multi-granularity graph representation learning method, namely CDM-GNN. It is primarily based on the idea of multi-granularity, aiming to identify important substructures and bias parts and disentangle them in the graph classification tasks. The proposed method achieves favorable classification performance and provides qualitative interpretability of the results. This technology can be extended for researching drug molecule properties and facilitating new drug development. Identifying important substructures that influence drug properties within molecules and their disentanglement can aid in exploring drug molecule properties and innovating drug development based on these substructures. This paper has not yet explored additional downstream graph tasks, such as node classification and link prediction. Future research could explore the extension of the concept of causal disentangled multi-granularity to these graph-related tasks. Subsequent research also can prioritize in-depth discussions of interpretable quantitative assessments for self-explainable models.

6.0.1 Acknowledgments

This work is supported by the National Natural Science Foundation of China (Nos. 62221005, 61936001, 61806031), Natural Science Foundation of Chongqing, China (Nos. cstc2019jcyj-cxttX0002, cstc2021ycjh-bgzxm0013), Project of Chongqing Municipal Education Commission, China (No. HZ2021008), and Doctoral Innovation Talent Program of Chongqing University of Posts and Telecommunications, China (Nos. BYJS202108, BYJS202209, BYJS202118).

References

- [1] Bengio, Y., Courville, A.C., Vincent, P.: Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 35(8), 1798–1828 (2013)

- [2] Chen, D., O’Bray, L., Borgwardt, K.M.: Structure-aware transformer for graph representation learning. In: Chaudhuri, K., Jegelka, S., Song, L., Szepesvári, C., Niu, G., Sabato, S. (eds.) International Conference on Machine Learning, ICML 2022, 17-23 July 2022, Baltimore, Maryland, USA. Proceedings of Machine Learning Research, vol. 162, pp. 3469–3489. PMLR (2022)

- [3] Cucala, D.J.T., Grau, B.C., Kostylev, E.V., Motik, B.: Explainable gnn-based models over knowledge graphs. In: The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022. OpenReview.net (2022)

- [4] Debnath, A.K., Lopez de Compadre, R.L., Debnath, G., Shusterman, A.J., Hansch, C.: Structure-activity relationship of mutagenic aromatic and heteroaromatic nitro compounds. correlation with molecular orbital energies and hydrophobicity. Journal of medicinal chemistry 34(2), 786–797 (1991)

- [5] Fang, Y., Zhang, Q., Zhang, N., Chen, Z., Zhuang, X., Shao, X., Fan, X., Chen, H.: Knowledge graph-enhanced molecular contrastive learning with functional prompt. Nature Machine Intelligence pp. 1–12 (2023)

- [6] Feng, A., You, C., Wang, S., Tassiulas, L.: Kergnns: Interpretable graph neural networks with graph kernels. In: Thirty-Sixth AAAI Conference on Artificial Intelligence, AAAI 2022, Thirty-Fourth Conference on Innovative Applications of Artificial Intelligence, IAAI 2022, The Twelveth Symposium on Educational Advances in Artificial Intelligence, EAAI 2022 Virtual Event, February 22 - March 1, 2022. pp. 6614–6622. AAAI Press (2022)

- [7] Gao, H., Ji, S.: Graph u-nets. In: Chaudhuri, K., Salakhutdinov, R. (eds.) Proceedings of the 36th International Conference on Machine Learning, ICML 2019, 9-15 June 2019, Long Beach, California, USA. Proceedings of Machine Learning Research, vol. 97, pp. 2083–2092. PMLR (2019)

- [8] Hendrycks, D., Dietterich, T.G.: Benchmarking neural network robustness to common corruptions and perturbations. In: 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net (2019)

- [9] Hsieh, J., Liu, B., Huang, D., Fei-Fei, L., Niebles, J.C.: Learning to decompose and disentangle representations for video prediction. In: Bengio, S., Wallach, H.M., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R. (eds.) Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018, December 3-8, 2018, Montréal, Canada. pp. 515–524 (2018)

- [10] Hu, W., Fey, M., Zitnik, M., Dong, Y., Ren, H., Liu, B., Catasta, M., Leskovec, J.: Open graph benchmark: Datasets for machine learning on graphs. In: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H. (eds.) Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual (2020)

- [11] Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. In: 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, April 24-26, 2017, Conference Track Proceedings. OpenReview.net (2017)

- [12] Liu, Y., Wang, X., Wu, S., Xiao, Z.: Independence promoted graph disentangled networks. In: The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, February 7-12, 2020. pp. 4916–4923. AAAI Press (2020)

- [13] Lucic, A., ter Hoeve, M.A., Tolomei, G., de Rijke, M., Silvestri, F.: Cf-gnnexplainer: Counterfactual explanations for graph neural networks. In: Camps-Valls, G., Ruiz, F.J.R., Valera, I. (eds.) International Conference on Artificial Intelligence and Statistics, AISTATS 2022, 28-30 March 2022, Virtual Event. Proceedings of Machine Learning Research, vol. 151, pp. 4499–4511. PMLR (2022)

- [14] Luo, D., Cheng, W., Xu, D., Yu, W., Zong, B., Chen, H., Zhang, X.: Parameterized explainer for graph neural network. In: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H. (eds.) Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual (2020)

- [15] Ma, J., Cui, P., Kuang, K., Wang, X., Zhu, W.: Disentangled graph convolutional networks. In: Chaudhuri, K., Salakhutdinov, R. (eds.) Proceedings of the 36th International Conference on Machine Learning, ICML 2019, 9-15 June 2019, Long Beach, California, USA. Proceedings of Machine Learning Research, vol. 97, pp. 4212–4221. PMLR (2019)

- [16] Ranjan, E., Sanyal, S., Talukdar, P.P.: ASAP: adaptive structure aware pooling for learning hierarchical graph representations. In: The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, February 7-12, 2020. pp. 5470–5477. AAAI Press (2020)

- [17] Rossi, R.A., Ahmed, N.K.: The network data repository with interactive graph analytics and visualization. In: Bonet, B., Koenig, S. (eds.) Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, January 25-30, 2015, Austin, Texas, USA. pp. 4292–4293. AAAI Press (2015)

- [18] Sun, Q., Li, J., Peng, H., Wu, J., Ning, Y., Yu, P.S., He, L.: SUGAR: subgraph neural network with reinforcement pooling and self-supervised mutual information mechanism. In: Leskovec, J., Grobelnik, M., Najork, M., Tang, J., Zia, L. (eds.) WWW ’21: The Web Conference 2021, Virtual Event / Ljubljana, Slovenia, April 19-23, 2021. pp. 2081–2091. ACM / IW3C2 (2021)

- [19] Toivonen, H., Srinivasan, A., King, R.D., Kramer, S., Helma, C.: Statistical evaluation of the predictive toxicology challenge 2000-2001. Bioinform. 19(10), 1183–1193 (2003)

- [20] Velickovic, P., Cucurull, G., Casanova, A., Romero, A., Liò, P., Bengio, Y.: Graph attention networks. In: 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30 - May 3, 2018, Conference Track Proceedings. OpenReview.net (2018)

- [21] Wang, G.: Dgcc: data-driven granular cognitive computing. Granular Computing 2(4), 343–355 (2017)

- [22] Wang, X., Jin, H., Zhang, A., He, X., Xu, T., Chua, T.: Disentangled graph collaborative filtering. In: Huang, J.X., Chang, Y., Cheng, X., Kamps, J., Murdock, V., Wen, J., Liu, Y. (eds.) Proceedings of the 43rd International ACM SIGIR conference on research and development in Information Retrieval, SIGIR 2020, Virtual Event, China, July 25-30, 2020. pp. 1001–1010. ACM (2020)

- [23] Ying, Z., Bourgeois, D., You, J., Zitnik, M., Leskovec, J.: Gnnexplainer: Generating explanations for graph neural networks. In: Wallach, H.M., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E.B., Garnett, R. (eds.) Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, December 8-14, 2019, Vancouver, BC, Canada. pp. 9240–9251 (2019)

- [24] Zhang, S., Rao, X., Tay, Y., Zhang, C.: Knowledge router: Learning disentangled representations for knowledge graphs. In: Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. pp. 1–10 (2021)