A Causal Approach to Detecting Multivariate Time-series Anomalies and Root Causes

Abstract

Detecting anomalies and the corresponding root causes in multivariate time series plays an important role in monitoring the behaviors of various real-world systems, e.g., IT system operations or manufacturing industry. Previous anomaly detection approaches model the joint distribution without considering the underlying mechanism of multivariate time series, making them computationally hungry and hard to identify root causes. In this paper, we formulate the anomaly detection problem from a causal perspective and view anomalies as instances that do not follow the regular causal mechanism to generate the multivariate data. We then propose a causality-based framework for detecting anomalies and root causes. It first learns the causal structure from data and then infers whether an instance is an anomaly relative to the local causal mechanism whose conditional distribution can be directly estimated from data. In light of the modularity property of causal systems (the causal processes to generate different variables are irrelevant modules), the original problem is divided into a series of separate, simpler, and low-dimensional anomaly detection problems so that where an anomaly happens (root causes) can be directly identified. We evaluate our approach with both simulated and public datasets as well as a case study on real-world AIOps applications, showing its efficacy, robustness, and practical feasibility.

1 Introduction

Multivariate time series is ubiquitous in monitoring the behavior of complex systems in real-world applications, such as IT operations management, manufacturing industry and cyber security (Hundman et al., 2018; Mathur & Tippenhauer, 2016; Audibert et al., 2020). Such data includes the measurements of the monitored components, e.g., the operational KPI metrics such as CPU/Database usages in an IT system. An important task in managing these complex systems is to detect unexpected observations deviated from normal behaviors, figure out the root causes of abnormal behaviors, and notify the operators timely to resolve the underlying issues. Detecting anomalies and corresponding root causes in multivariate time series aims to accomplish this task and has been actively studied in machine learning, which automate the identification of issues and incidents for improving system availability in AIOps (AI for IT Operations) (Dang et al., 2019).

Various algorithms have been developed to detect anomalies in multivariate time series data. In general, there are two kinds of directions commonly explored, i.e., treating each dimension individually using univariate time series anomaly detection algorithms (Hamilton, 1994; Taylor & Letham, 2018; Ren et al., 2019), and treating all the dimensions as an entity using multivariate time series anomaly detection algorithms (Zong et al., 2018; Park et al., 2017; Su et al., 2019). The first direction ignores the dependencies between different time series, so it may be problematic especially when sudden changes of a certain dimension do not necessarily mean failures of the whole system, or the relations among the time series become anomalous (Zhao et al., 2020). The second direction takes the dependencies into consideration, which are more suitable for real-world applications where the overall status of a system is more concerned about than a single dimension. Recently, deep learning receives much attention in anomaly detection, e.g., DAGMM (Zong et al., 2018), LSTM-VAE (Park et al., 2017) and OmniAnomaly (Su et al., 2019), which infer dependencies between different time series and temporal patterns within one time series implicitly. Recently, Dai & Chen (2022) developed a graph-augmented normalizing flow approach that models the joint distribution via the learned DAG. However, the dependencies inferred by deep learning models do not represent the underlying process of generating the observed data and the causal relationships between time series are ignored; such methods do not provide a mechanistic understanding of anomalies and it is hard for them to identify the root causes when an anomaly occurs.

In real-world applications, root cause analysis (RCA) is traditionally treated as a module separated from anomaly detection, identifying potential root causes given the detected anomalous metrics by analyzing the dependencies between the monitored metrics (Soldani & Brogi, 2021). Because RCA requires to know which metric is anomalous, univariate (instead of multivariate) time series anomaly detection algorithms are mostly applied to detect anomalies, and then RCA analyzes system/service graphs obtained via domain knowledge or observed data to determine root causes. Both univariate and multivariate algorithms have drawbacks and cannot be integrated with RCA seamlessly.

To overcome these issues, we take a causal perspective (Pearl, 2009; Spirtes et al., 1993) to naturally view anomalies in multivariate time series as instances that do not follow the regular causal mechanism, and propose a novel causality-based framework for detecting anomalies and root causes. Specifically, our approach leverages the causal structure discovered from data so that the joint distribution of multivariate time series is factorized into simpler modules where each module corresponds to a local causal mechanism, reflected by the corresponding conditional distribution. Those local mechanisms are modular or autonomous (Pearl, 2009), and can then be handled separately, which is known as the modularity property of causal systems. In light of this property, the problem is then naturally decomposed into a series of low-dimensional anomaly detection problems. Each sub-problem is concerned with a local mechanism. Because we focus on issues with separate local causal mechanisms, the root causes of an anomaly can be identified at the same time. The main contributions of this paper are summarized below.

-

•

We reformulate anomaly detection and root cause analysis of multivariate time series from a causality perspective, which helps understand where and how anomalies happen and facilitates anomaly detection in light of the understanding.

-

•

We propose a novel framework that decomposes the multivariate time series anomaly detection problem into a series of separate low-dimensional anomaly detection problems by exploiting the causal structure discovered from data, which not only detects the anomalies more accurately but also offers a natural way to find their root causes.

-

•

We perform empirical studies of evaluating our approach with both simulation and public datasets as well as a case study of an internal real-world AIOps application, validating its efficacy and robustness to different causal discovery techniques and settings.

Our formulation offers an alternative understanding of anomalies: an anomaly is a data point that does not follow the regular data-generating process. The modularity property makes our approach simpler to train, suitable for real-world applications and easier for root cause analysis. Our method can detect those anomalies that are hard for the approaches based on modeling marginal/joint distributions only, illustrating the benefit of the causal view and treatment of anomalies.

2 Related Work

Anomaly detection methods for univariate time series can be applied to each dimension of multivariate time series. Popular univariate anomaly detection techniques include statistical or distance-based methods, e.g., KNN (Chaovalitwongse et al., 2007; Angiulli & Pizzuti, 2002), One-Class SVM (Manevitz & Yousef, 2002), and probabilistic methods (Chandola et al., 2009). These methods are computationally efficient and suitable for high dimensional data. But their performance degrades faced with long-term anomalies since the temporal patterns within time series are ignored. To address this issue, temporal prediction methods, e.g., ARIMA, SARIMA (Hamilton, 1994), Prophet (Taylor & Letham, 2018), SR-CNN (Ren et al., 2019), and DONUT (Laptev et al., 2015), have been proposed to model temporal dependencies/autocorrelations. However, these methods treat each dimension individually and ignore the correlations between different time series. As shown in Figure 1, they cannot identify the anomaly corresponding to the abnormal causal mechanism.

Recent years have seen the increasing popularity of unsupervised methods using deep learning techniques, which can infer the correlations between time series. For example, DAGMM (Zong et al., 2018) combines an autoencoder with a Gaussian mixture model to model the joint distribution. MSCRED (Zhang et al., 2019) utilizes the system signature matrix to model the correlations and temporal patterns. LSTM-VAE (Park et al., 2017) combines LSTM with VAE and models temporal dependencies through LSTM. OmniAnomaly (Su et al., 2019) learns robust time series representations with a stochastic variable connection and a planar normalizing flow. USAD (Audibert et al., 2020) uses adversely trained autoencoders inspired by GANs, providing fast training. However, these methods model the joint distribution directly without considering the process behind multivariate time series, and an anomaly that happens to a local mechanism in the process might not change the joint distribution dramatically. Besides, it is difficult for them to leverage the domain knowledge of the monitored system, e.g., the known causal dependencies between time series, and to provide explanations that are crucial for root cause analysis and remediation when an anomaly occurs. Finally, our work also differs substantially from existing studies (Qiu et al., 2012; 2020) which though explore causality in anomaly detection in different ways, but do not use the causal mechanism to model anomalies in time series.

Root cause analysis (RCA) methods leverage the KPI metrics monitored on those services to determine the root causes when an anomaly event is detected. The key idea behind RCA with KPI metrics is to analyze the relationships or dependencies between these metrics and then utilize these relationships to identify root causes when an anomaly occurs. Typically, there are two types of approaches: 1) identifying the anomalous metrics in parallel with the observed anomaly via metric data analysis, and 2) discovering topology/causal graphs that represent the causal relationships between the services.

Nguyen et al. (2011; 2013) propose two similar RCA methods by analyzing low-level system metrics, e.g., CPU, memory and network statistics. Both methods first detect abnormal behaviors for each component via a change point detection algorithm when a performance anomaly is detected, and then determine the root causes based on the propagation patterns obtained by sorting all critical change points in a chronological order. Shan et al. (2019) developed a low-cost RCA method called -Diagnosis to detect root causes of small-window long-tail latency for web services. -Diagnosis assumes that the root cause metrics of an abnormal service have significantly changes between the abnormal and normal periods. But these methods don’t consider the causal relationships between KPI metrics or the dependencies between services in an application.

The second type of RCA approaches leverages such dependencies, which usually involves two steps, i.e., constructing topology/causal graphs given the KPI metrics and domain knowledge, and extracting anomalous subgraphs or paths given the observed anomalies. Such graphs can either be reconstructed from the topology (domain knowledge) of a certain application (Thalheim et al., 2017; Wu et al., 2020; Álvaro Brandón et al., 2020; Samir & Pahl, 2019) or automatically estimated from the metrics via causal discovery techniques (Wang et al., 2018; Mariani et al., 2018; Chen et al., 2019; Meng et al., 2020; Lin et al., 2018; Ma et al., 2019; 2020). To identify the root causes of the observed anomalies, random walk (e.g., Kim et al. (2013); Meng et al. (2020); Wang et al. (2018)), page-rank (e.g., Wu et al. (2020)) or other analysis methods can be applied over the discovered topology/causal graphs. But these methods only accept univariate time series anomaly detectors, i.e., detecting anomalies for each metric separately.

3 The Causal Approach

Given a multivariate time series with length and variables, i.e., , let be the observation of the th variable measured at time . The task in this paper is to detect anomalies after time step that differ from the regular points in significantly and identify the corresponding root causes.

3.1 Why the causal view matters

Let us consider a simple example shown in Figure 1, i.e., the measurements of three components with causal structure . An anomaly labeled by a black triangle happens at time step , where the causal mechanism between and becomes abnormal. Typically it is hard to find such an anomaly based on the marginal distributions or the joint distribution. But from local causal mechanism , such anomaly becomes obvious, e.g., is much lower than its normal values. In this example, at time step the probability densities , , , while , meaning that it is easier to find this anomaly by examining the local causal mechanism .

Figure 2 shows why causality matters with a real-world example. In SWaT (Mathur & Tippenhauer, 2016), at timestamp 491, our causality-based approach detects a true anomaly where the causal mechanism between Metrics 1, 0 and 9 is violated (Metrics 0 and 9 are the causal parents of Metric 1). We plot the probability density of the reconstruction error based on the causal mechanism (top left figure), where the black triangle is the anomaly. Clearly, this anomaly can be easily identified w.r.t. the p-value. But if we check the probability density of the “joint” reconstruction error by AE (top right figure), this anomaly cannot be found w.r.t. the p-value. The bottom figure plots the time series data of these three metrics. Intuitively, we can observe that Metric 1 has a peak value when Metric 0 is low, and Metric 1 is low when Metric 0 is high. In the range (450, 550), this causal mechanism is violated, e.g., Metric 0 is high while Metric 1 is also high. This type of anomalies is hard to be identified by checking joint or marginal distributions.

If the causal structure of the underlying process is given, we can examine whether each variable in the time series follows its regular causal mechanism. The causal mechanism can be represented by the structural equation model, i.e., where are arbitrary measurable functions, are independent noises and represents the causal parents of including both lagged and contemporaneous ones (Pearl, 2009). This causal structure can also be represented by a causal graph whose nodes correspond to the variables at different time lags. In this paper, we assume the graph is a directed acyclic graph (DAG) and that the causal relationships are stationary unless an anomaly occurs. According to the Markov factorization, the joint distribution of can be factored as where denotes the conditional distribution.

The local causal mechanisms, corresponding to these conditional distribution terms, are known to be irrelevant to each other in a causal system (Pearl, 2009). An anomaly can then be identified according to the local causal mechanism. Therefore, we define anomalies as follows.

Definition 1

A point at time step is an anomaly if there exists at least one variable such that violates the local generating mechanism, i.e., given , does not follow , which is the conditional distribution corresponding to the regular causal mechanism.

This definition states that an anomaly happens in the system if the causal mechanism between a variable and its causal parents are violated, e.g., the local causal effect dramatically varies (Fig 1), or a big change happens on a variable and this change propagates to its children. Based on Definition 1, the anomaly detection problem can be divided into several low-dimensional subproblems, e.g., by checking whether each variable follows its regular conditional distribution. Thank to this modularity property, the root causes can be naturally identified when an anomaly event occurs. Here is our definition of root causes.

Definition 2

The root causes of an anomaly point are those variables such that given , does not follow , e.g., an anomaly happens on the local causal mechanism related to those variables.

3.2 Method

We consider the unsupervised learning setting where is given as the training data for learning the graph structures and the conditional distributions. (We will discuss the effects of possible anomalies on the learned causal structure in Section 3.2.4.) For learning causal graphs, we exploit suitable causal discovery methods, as discussed in Section 3.2.1. For learning conditional distributions, we maximize the log likelihoods given the observation data, i.e., maximizing Specifically, let be the set of variables with no causal parents in . There are two cases to be considered:

-

•

Variable with parents (): The conditional distribution of given its causal parents needs to be estimated, i.e., is modeled via conditional density estimation, which can be learned in a supervised manner.

-

•

Variable with no parents (): We model by applying any existing method for modeling time series with the historical data of , meaning that our framework can leverage the state-of-the-art time series models.

The training step produces the causal graph and the estimated conditional distributions corresponding to local causal mechanisms (Section 3.2.2). For anomaly detection of local causal mechanism, we detect data points that do not follow regular conditional distributions. There are multiple possible ways to compute the final anomaly score; Heard & Rubin-Delanchy (2018) compared six methods for combining p-values from individual tests, and showed that taking the minimum is sensitive to the smallest p-value, which is suitable for reporting anomalies that any of the metrics is abnormal. Hence the anomaly score is defined as one minus the minimum value of these estimated probabilities. Intuitively, the purpose of using the minimum function is that we expect the algorithm to report an anomaly if any of the metrics (root variables) or local causal mechanisms (conditional probabilities) becomes abnormal, i.e., a data point is labeled as an anomaly if its anomaly score is larger than a certain threshold. If an anomaly event is detected, the root cause scores are computed for each variable and then the variables with the top scores are selected as the root causes (Section 3.2.3). Algorithm 1 outlines our approach. The anomalies in training data may decrease the performance. We discuss this issue and provide a solution for handling training anomalies in Section 3.2.4.

Input: training data , test data , and threshold ;

Training procedure:

Detection procedure:

3.2.1 Causal discovery

Our approach needs to exploit the causal structure underlying the data. A traditional way to find causal relations is to use interventions or randomized experiments, which are generally too expensive and time-consuming. Discovering causal information by analyzing purely observational data, known as causal discovery, is then an important problem (Spirtes & Glymour, 1991; Peters et al., 2017; Spirtes & Zhang, 2016). Multiple algorithms have been developed for causal discovery from independent and identically distributed (i.i.d.) or time series data, and their results are asymptotically guaranteed under corresponding assumptions. In this paper, we choose causal discovery algorithms such as PC (Spirtes & Glymour, 1991), FGES (Chickering, 2003; 2002; Meek, 1995), depending on whether we are given temporal data (with time-delayed causal relations) and whether the causal relations are linear or nonlinear. For example, we apply FGES with SEM-BIC score if the variables are linearly related and apply FGES with generalized score function (Huang et al., 2018) if they are non-linearly correlated. One concern is whether the missing or incorrect causal links in the inferred causal graph have a big impact on the performance of our approach. We performed an empirical study of this impact with public datasets, which shows that interestingly, our approach is robust to the inferred causal graph. The complexity of PC and GES highly depends on the density of the causal graph. Specifically, FGES is highly scalable when dealing with linear models (Ramsey et al., 2016). In real-world applications, e.g., the public datasets in the experiments, even though the variables may not be exactly linearly correlated, FGES can still generate reasonable causal graphs that are good enough for our approach.

3.2.2 Anomaly detection

After the causal Markov factorization, it becomes easier to model the joint distribution compared to the previous approaches, e.g., the conditional distributions representing local causal mechanisms can be estimated using simpler ML models.

For modeling , one can apply kernel conditional density estimation (Hastie et al., 2009), conditional VAE (CVAE) (Sohn et al., 2015) or even prediction models such as MLP or CNN (Binkowski et al., 2018). Let be the causal time lag for a parent and be the maximum time lag in ; then we define Time lag if is a contemporaneous causal parent of . For causal parent , more of its historical data can also be included, e.g., a window with size : . Therefore, the problem becomes estimating the conditional distribution from the empirical observations where . In this paper, we apply CVAE to model such conditional distribution. The reason why choosing CVAE is that it can be trained fast with a simple architecture and achieve good performance as shown in our experiments. The empirical variational lower bound of CVAE is

where , are MLPs and is a Gaussian distribution. Given , CVAE outputs – reconstruction of , and then is measured by the distribution of . 111We assume the reconstruction error is additive, e.g., , so that . Hence we use the distribution of the reconstruction error for detecting anomalies.

If is empty, i.e., , one way to estimate distribution is to handle via univariate time series models, e.g., ARIMA (Hamilton, 1994), SARIMA (Hyndman & Athanasopoulos, 2018). The other way is to handle the variables in together by utilizing the models for multivariate time series anomaly detection, e.g., Isolation Forest (IF) (Liu et al., 2008), AE (Baldi, 2012), LSTM-VAE (Park et al., 2017). The training data for such models includes all the observations of the variables in , i.e., . For example, the training data for a forecasting based method is where is predicted by a window of its previous data points.

Our approach reduces to the previous univariate/multivariate time series AD approaches if the causal graph is empty, i.e., no causal relationships are considered. When the causal relationships are available obtained by domain knowledge or data-driven causal discovery techniques, our approach can easily utilize such information and reduces the efforts in joint distribution estimation.

3.2.3 Root cause analysis

Root cause analysis (RCA) aims to identify root causes when an anomaly event happens. RCA in real-world applications such as AIOps can be very challenging. One practical issue for identifying root causes is that an anomaly occurs in a variable often makes its causal children variables abnormal due to anomaly propagation. Specifically, based on Definition 2, we propose the following practical algorithm. For variable , define its initial root cause score at time by . Suppose that is the set of the causal children of , the final root cause score is define in a PageRank (Page et al., 1999; Wu et al., 2020) way:

| (1) |

where is a weight parameter satisfying . When is empty, we set . Here the final root cause score of a variable is the weighted combination of its initial root cause score and the final root cause scores of its children to handle the anomaly propagation issue. The root causes at time are identified by picking the variables with top scores.

3.2.4 Negative effect of training anomalies

The existence of anomalies in the training data may decrease the detection performance. Our empirical results show that this issue does not affect the anomaly detection performance much, which is expected to be the case when there are relatively few anomalies in the data. Typically, there are two possible cases where anomalies in training data may have obvious negative impacts on performance. One case is that the value of a metric at certain timestamps becomes extremely large, which can affect the conditional probability estimation. In this case, one can simply remove those values based on statistical rules, e.g., removing them if the absolute value is larger than some threshold in the preprocessing step. The other case is that the proportion of anomalies is relatively large. In this case, we can consider an iterative solution that iteratively updates the causal graph and anomaly detection model, i.e., 1) estimate causal graph and train models with the training data, and 2) remove the anomalies detected by in the training data and then go to Step (1). We repeat the above two steps until the estimated causal model (including the estimated causal structure and quantitative model, e.g., causal coefficients in the linear case) converges.

4 Experiments

This section evaluates the performance of our proposed approach and compares it to several other approaches. The experiments include: 1) evaluating our approach with simulation and public datasets, 2) analyzing how different causal graphs affect the performance, and 3) a case study demonstrating the application of our approach for real-world anomaly detection in AIOps.

The anomaly detection performance is assessed by the precision, recall and F1-score metrics in a point-adjust manner, i.e., all the anomalies of an anomalous segment are considered as correctly detected if at least one anomaly of this segment is correctly detected while the anomalies outside the ground truth anomaly segment are treated as normal. By default, we apply FGES (Chickering, 2003) to discover the causal graph. For , we choose CVAE (Sohn et al., 2015). For , we tested the univariate model and other methods such as IF (Liu et al., 2008), AE (Baldi, 2012), LSTM-VAE (Park et al., 2017) in our experiments. We compare our approach with several unsupervised approaches, e.g., AE (Baldi, 2012), DAGMM (Zong et al., 2018), OmniAnomaly (Su et al., 2019), USAD (Audibert et al., 2020), GANF (Dai & Chen, 2022)222https://github.com/EnyanDai/GANF.

4.1 Experimental Setup and Parameters Settings

For the implementation of our approach, we employ the CVAE to model conditional distributions . We choose the same parameters for all the experiments on both simulated and public real datasets. The encoder and decoder in CVAE are both MLPs with hidden sizes . The latent size is 5 and the prior distribution is assumed to be the standard normal distribution (doesn’t depend on ). For training CVAE, the optimizer is ADAM with learning rate , batch size and epoch num .

For modeling , there are several options to choose in practical applications. For the simulated datasets and our internal AIOps dataset, we choose a univariate anomaly detection method based on a CNN forecasting model. The CNN forecasting model consists of 4 residual blocks with 1D convolutional layers, i.e., the “(input channels, output channels)” pairs are , followed by the concatenate of 1D adaptive average pooling and 1D adaptive max pooling. The output layer is a linear layer. For each residual block, it has two convolutional layers “(input channels, output channels) – (output channels, output channels)”. We choose ADAM as the optimizer with learning rate (with decay), batch size and epoch num . The window size of the historical data for prediction is . For the public datasets, besides this CNN forecasting model, we can also choose isolation forest (IF) and autoencoder (AE). For IF, the max number of samples is . For AE, the hidden sizes of the encoder are , the latent size is , and the hidden sizes of the decoder are .

For the simulated datasets, we apply FGES and set “max degree = 5” and “penalty discount = 20”. For the public datasets SWaT and WADI, we apply FGES and set “max degree = 10” and “penalty discount = 100”. For SMAP and MSL, we apply the PC algorithm with the default parameters. The library for causal discovery we used in this project is Tetrad 333https://github.com/cmu-phil/tetrad. Smaller “max degree” or larger “penalty discount” in FGES leads to more sparse graphs with less edges. Table 1 lists the number of the edges in the causal graphs discovered with different parameters.

The reason why we choose these parameters such as CVAE hidden sizes = [10, 20, 10] is as follows. For all the simulation datasets, the “max-degree” is set to 5 in FGES and the causal relations are instantaneous, meaning that the number of causal parents of each variable is at most 5 so that the input dimensions of the parent variables in CVAEs for modeling conditional probabilities are at most 5. For the public datasets, the “max-degree” is 10 and we found that there are instantaneous causal influences but not time-delayed ones, so the input dimensions of the parent variables in CVAEs are at most 10. That’s why we choose those parameters for the encoder and decoder. For a new dataset, if one considers a similar setting for causal discovery, he/she can use our parameters as default. In general, the input dimensions of CVAEs are at most “max-degree” * “time-lag”, so one can choose the hidden sizes around this number. For modeling conditional probabilities, one can construct a validation set by splitting the training dataset. Under the Gaussian distribution assumption in CVAE, the overfitting issue can be found and avoided by measuring the reconstruction MSE loss.

For all the experiments, the detection thresholds are inferred by taking the th percentile of the detection scores in the test data, e.g., we choose for SWaT and WADI, for SMAP and MSL. For the other methods (except ours) in the simulated datasets, the reported precision, recall and F1-score metrics are the best metrics that can be achieved in the test datasets (by choosing the best threshold).

| SWaT | max degree (penalty discount = 100) | penalty discount (max degree = 10) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| d=5 | 6 | 7 | 8 | 9 | 10 | p=20 | 40 | 60 | 80 | 100 | 120 | |

| Edge num | 70 | 79 | 88 | 95 | 98 | 102 | 139 | 122 | 115 | 111 | 102 | 93 |

| WADI | max degree (penalty discount = 100) | penalty discount (max degree = 10) | ||||||||||

| d=5 | 6 | 7 | 8 | 9 | 10 | p=20 | 40 | 60 | 80 | 100 | 120 | |

| Edge num | 152 | 180 | 195 | 211 | 227 | 249 | 331 | 308 | 278 | 262 | 249 | 225 |

4.2 Simulation datasets

We consider linear/nonlinear causal relationships and three types of anomalies. The simulated time series data can be generated in the following steps:

-

1.

Generate an Erdös Rényi random graph with number of nodes/variables and edge creation probability , then convert it into a DAG. We choose .

-

2.

For the variables with no parents in , randomly pick a signal type from “harmonic”, “pseudo periodic” and “autoregressive” and generate a time series with length according to this type. We use the Python library “TimeSynth”444https://github.com/TimeSynth/TimeSynth to generate such signals. When generating these signals, the stop time is set to 100. For “harmonic”, the frequency and the noise std are uniformly drawn from and , respectively. For “pseudo periodic”, the frequency is uniformly drawn from , “freqSD” and “ampSD” are set to and . For “autoregressive”, “ar_param” is uniformly sampled from and “sigma” is uniformly sampled from .

-

3.

For a variable with parents in , we consider both linear relationship and nonlinear relationship , where is uniformly sampled from and is uniformly sampled from . The time series for those variables are generated in a topological order.

-

4.

Add anomalies into the generated time series: We consider three types of anomalies. The first one is a “measurement” anomaly, i.e., randomly pick a variable , a time step , a scale (uniformly sampled from ) and a duration (uniformly sampled from ), and then set . The second one is an “intervention” anomaly, i.e., after generating “measurement” anomalies for some variables, those anomaly values propagate to the children according to the causal mechanisms. The third one is an “effect” anomaly where anomalies only happen on the variables with no causal children.

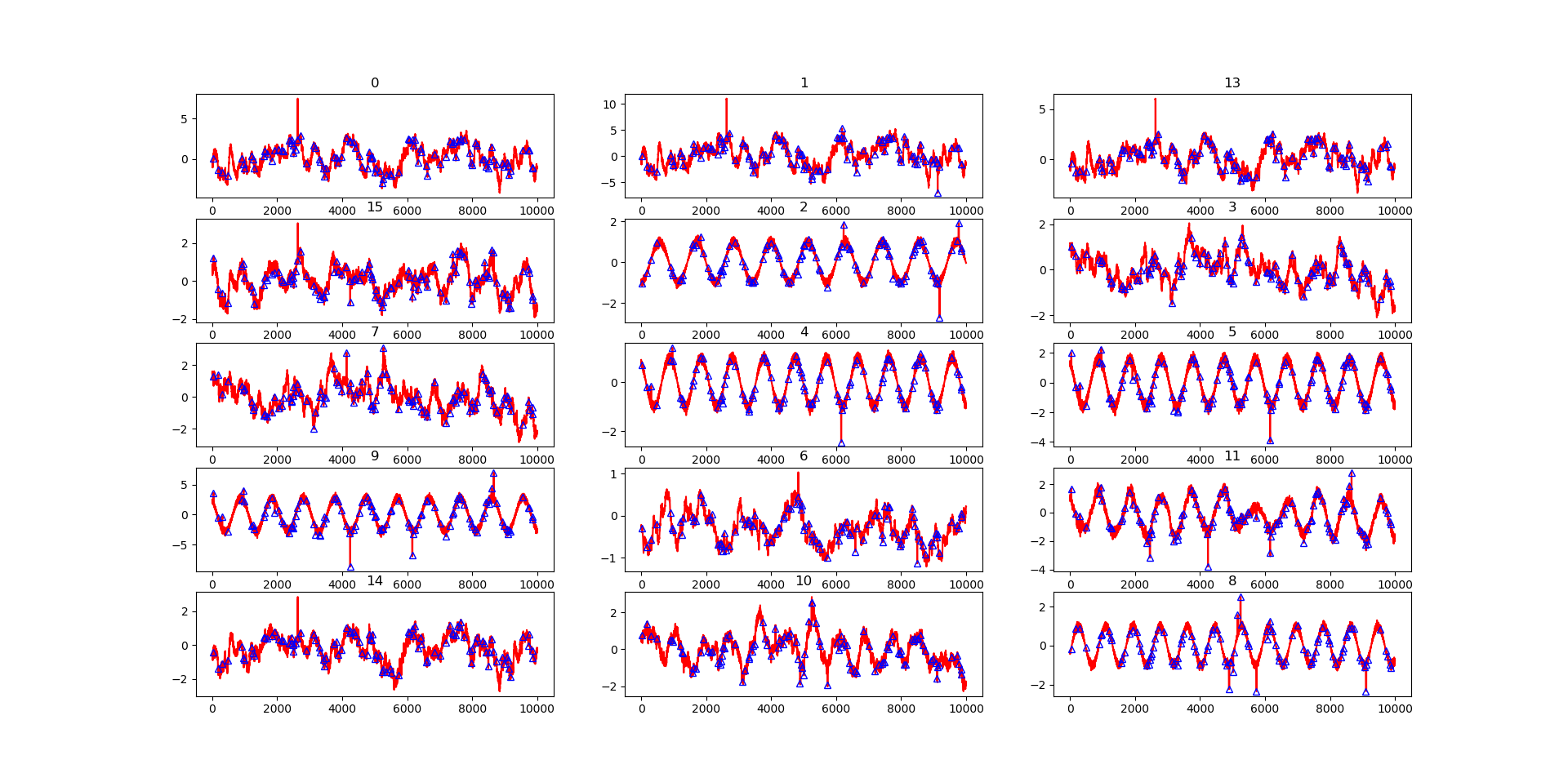

Figure 3 shows the generated causal graph which contains 15 variables. According to this causal graph, we can generate multivariate time series by following the procedure mentioned above, as shown in Figure 4 and Figure 5 where some of the abnormal time steps in the test dataset are labeled by blue triangles.

Performance comparison. In the experiments, we consider six settings derived from the combinations of “linear/nonlinear” and “measurement/intervention/effect”. The simulated time series has variables with length , where the first half is the training data and the rest is the test data. The percentage of anomalies is . Table 2 shows the performance of different unsupervised multivariate time series anomaly detection methods with the generated simulated dataset.

| Lin./Measu. | Lin./Inter. | Lin./Effect | Nonlin./Measu. | Nonlin./Inter. | Nonlin./Effect | |

| IF | 0.374 | 0.403 | 0.220 | 0.336 | 0.422 | 0.367 |

| AE | 0.386 | 0.359 | 0.240 | 0.392 | 0.390 | 0.363 |

| VAE | 0.343 | 0.328 | 0.208 | 0.396 | 0.377 | 0.306 |

| LSTM-VAE | 0.457 | 0.454 | 0.485 | 0.581 | 0.545 | 0.393 |

| DAGMM | 0.746 | 0.542 | 0.721 | 0.456 | 0.589 | 0.359 |

| USAD | 0.252 | 0.260 | 0.220 | 0.346 | 0.302 | 0.279 |

| GANF | 0.292 | 0.340 | 0.213 | 0.355 | 0.316 | 0.286 |

| Ours | 0.791 | 0.757 | 0.740 | 0.757 | 0.759 | 0.637 |

Clearly, our method outperforms all the other methods. It achieves significantly better F1 scores when the relationships are nonlinear or the anomaly type is “intervention”, e.g., ours obtains F1 score 0.759 for “nonlinear, intervention”, while the best F1 score achieved by the others is 0.589. In “linear, measurement/effect”, DAGMM has a similar performance with ours because the data can be modeled well by applying dimension reduction followed by a Gaussian mixture model. But when the relationships become nonlinear, it becomes harder for DAGMM to model the data. This experiment shows that the causal mechanism plays an important role in anomaly detection. Modeling joint distribution without considering causality can lead to a significant performance drop.

We use the same simulation datasets as anomaly detection to evaluate the RCA performance measured by the top-k hit ratio, i.e., the predicted top-k root causes are correct as long as one of them is the groundtruth root cause. Table 3 shows the RCA performance of our approach and the baseline. The baseline ignores the causal relationships while samples root causes based on the probabilities proportional to the anomaly scores.

| Top 1 | Top 2 | Top 3 | Top 4 | |

| Linear/Measu. (Baseline) | ||||

| Linear/Measu. (Ours) | ||||

| Linear/Inter. (Baseline) | ||||

| Linear/Inter. (Ours) | ||||

| Nonlinear/Measu. (Baseline) | ||||

| Nonlinear/Measu. (Ours) | ||||

| Nonlinear/Inter. (Baseline) | ||||

| Nonlinear/Inter. (Ours) |

Our approach achieves and for the “linear” and “nonlinear” settings, respectively, which is significantly better than the baseline.

Training Anomalies. When the fraction of anomalous points is large in the training data, these anomalies may decrease detection performance since the discovered causal graph may not be accurate. In this case, we can apply the solution discussed in Section 3.2.4, updating the causal graph and anomaly detection model iteratively. In this experiment, the training and test data are generated under the setting “linear/measurement”, and a large proportion of noises are added into the training data, i.e., adding additional Gaussian noises to the first 20% data points in the training data. These noisy data points makes estimating accurate causal graphs harder via causal discovery algorithms. In each iteration, 3% data points are detected as anomalies and removed. Figure 6(a) shows the detection performance on the test dataset measured by the F1 scores over each iteration. In the beginning the discovered causal graph has more errors due to the noises in the training data, leading to the low F1 score. After each iteration, our approach removes the detected anomalies from the training data, making the discovered causal graph more accurate in the next iteration, so that the detection performance increases consistently. This experiment empirically verifies our “iterative updates” approach in the case where the training data has a large portion of anomalies. Figure 6(b) plots the difference between the adjacency matrices of two consecutive estimated causal graphs, which increases first then decreases and converges to 0 since the distribution of training data gradually changes from a mix of noises and regular points to regular points only.

This experiment considers an extreme case that the proportion of anomalies and the magnitude of anomalies are large. In practical applications where the proportion of anomalies in training data is relatively small., e.g., the public datasets, there is no need to apply this iterative approach, i.e., one iteration is good enough.

4.3 Public datasets

Four public datasets were used in our experiments: 1) Secure Water Treatment (SWaT) (Mathur & Tippenhauer, 2016): it consists of 11 days of continuous operation, i.e., 7 days collected under normal operations and 4 days collected with attacks, 2) Water Distribution (WADI) (Mathur & Tippenhauer, 2016): It consists of 16 days of continuous operation, of which 14 days were collected under normal operation and 2 days with attacks. 3) Soil Moisture Active Passive (SMAP) satellite and Mars Science Laboratory (MSL) rover Datasets (Hundman et al., 2018), which are two public datasets expert-labeled by NASA.

Performance comparison. Table 4 shows the results on four representative datasets.

| SMAP | MSL | SWaT | WADI | |||||||||

| Methods | Prec. | Recall | F1 | Prec. | Recall | F1 | Prec. | Recall | F1 | Prec. | Recall | F1 |

| IF | 0.815 | 0.591 | 0.685 | 0.854 | 0.922 | 0.887 | 0.998 | 0.669 | 0.801 | 0.541 | 0.794 | 0.644 |

| AE | 0.806 | 0.585 | 0.678 | 0.858 | 0.892 | 0.875 | 0.999 | 0.656 | 0.792 | 0.595 | 0.762 | 0.668 |

| VAE | 0.808 | 0.588 | 0.681 | 0.771 | 0.656 | 0.709 | 0.999 | 0.656 | 0.792 | 0.616 | 0.855 | 0.716 |

| LSTM-VAE | 0.818 | 0.591 | 0.686 | 0.859 | 0.911 | 0.884 | 0.997 | 0.689 | 0.815 | 0.658 | 0.920 | 0.767 |

| DAGMM | 0.800 | 0.877 | 0.837 | 0.900 | 0.864 | 0.882 | 0.829 | 0.767 | 0.797 | 0.639 | 0.501 | 0.412 |

| OmniAnom | 0.758 | 0.975 | 0.853 | 0.901 | 0.889 | 0.895 | 0.722 | 0.983 | 0.833 | 0.265 | 0.980 | 0.417 |

| USAD | 0.769 | 0.983 | 0.863 | 0.861 | 0.964 | 0.910 | 0.987 | 0.740 | 0.846 | 0.645 | 0.322 | 0.430 |

| GANF | 0.692 | 0.549 | 0.612 | 0.285 | 0.773 | 0.416 | 0.964 | 0.706 | 0.815 | 0.576 | 0.596 | 0.586 |

| Ours | 0.874 | 0.982 | 0.925 | 0.867 | 0.961 | 0.912 | 0.945 | 0.892 | 0.918 | 0.749 | 0.901 | 0.818 |

| (std) | 0.001 | 0.006 | 0.003 | 0.003 | 0.011 | 0.007 | 0.009 | 0.016 | 0.008 | 0.021 | 0.029 | 0.023 |

Overall, IF, AE, VAE and DAGMM have relatively lower performance because they neither exploit the temporal information nor leverage the causal relationships between those variables. LSTM-VAE, OmniAnomaly and USAD perform better than these four methods since they utilize the temporal information via modeling the current observations with the historical data, while the DAG-based method GANF does not perform well except for SWaT. Our approach exploits the causal relationships besides the temporal information, achieving significantly better results than the other methods in all the datasets, e.g., ours has the best F1 score 0.918 for SWaT and 0.818 for WADI, while the best F1 scores for SWaT and WADI by other methods are 0.846 and 0.767, respectively. For each public datasets, Table 5 reports the best metrics that can be achieved by choosing the best thresholds in the test datasets. Clearly, if we are allowed to choose better thresholds, the metrics achieved by our approach can be much higher, e.g., F1-score 0.946 for SMAP and 0.913 for MSL.

| Dataset | SMAP | MSL | SWaT | WADI |

|---|---|---|---|---|

| Precision* | ||||

| Recall* | ||||

| F1* |

Table 6 shows the running time of our approach. The most time consuming step is local causal mechanism estimation (conditional distribution estimation). After training, our approach detects anomalies and root causes fast.

| Stage | SWaT | WADI |

|---|---|---|

| Training (Causal discovery) | 10.27s | 42.18s |

| Training (Conditional distribution estimation) | 415.37s | 1026.59s |

| Inference (anomaly detection) | 0.279ms per point | 0.636ms per point |

Ablation study on for . This experiment evaluates the effect of the causal information on anomaly detection. For an anomaly detection method such as IF and AE, we compare with our approach “ours + ” that uses CVAE for (estimates ) and for (estimates ). We report the metrics as mentioned above and the best metrics achieved by choosing the best thresholds in the test datasets.

| SWaT | WADI | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Rec. | F1 | Prec.* | Rec.* | F1* | Prec. | Rec. | F1 | Prec.* | Rec.* | F1* | |

| 0.952 | 0.874 | 0.911 | 0.950 | 0.929 | 0.940 | 0.749 | 0.920 | 0.826 | 0.873 | 0.979 | 0.923 | |

| IF | 0.947 | 0.893 | 0.919 | 0.946 | 0.945 | 0.945 | 0.738 | 0.920 | 0.819 | 0.948 | 0.920 | 0.934 |

| AE | 0.958 | 0.900 | 0.928 | 0.963 | 0.920 | 0.941 | 0.789 | 0.920 | 0.850 | 0.931 | 0.979 | 0.955 |

| LSTM-VAE | 0.954 | 0.874 | 0.912 | 0.951 | 0.936 | 0.944 | 0.748 | 0.920 | 0.825 | 0.949 | 0.920 | 0.934 |

Table 7 shows the performance of our approach with different , where means that the anomalies are detected by only without using . By comparing this table with Table 4 we can observe that “ours + ” performs much better than using only, e.g., “ours + AE” achieves F1 score 0.850 for WADI, while AE obtains 0.668 for WADI. If is not used in anomaly detection, we get a performance drop in terms of F1 score. For example, the best F1 score drops from 0.934 to 0.923 for WADI.

Ablation study on causal discovery and causal mechanism estimation. We also studied the effects of different parameters for discovering causal graphs on the performance of our approach. The parameters that we investigated are “max degree” and “penalty discount” in FGES, both of which affect the structure of the causal graph, e.g., sparsity, indegree, outdegree. In this experiment, we use 6 different “max degree” and 6 different “penalty discount” . Smaller “max degree” or larger “penalty discount” leads to more sparse graphs with less edges, e.g., for SWaT, the number of the edges in is when “max degree” , respectively.

Figure 7 plots the detection precision, recall and F1 score obtained with different “max degree” and “penalty discount”. For SWaT, these two parameters don’t affect the performance much. For WADI, when “max degree” decreases (the causal graph becomes more sparse) or “penalty discount” decreases (the causal graph has more false positive links), the performance also decreases but it doesn’t drop much, i.e., the worst F1 score is still above 0.65. When “max degree” 6 and “penalty discount” 40, we got similar performance, e.g., the F1 score is around 0.8, showing that our approach is robust to the changes of the inferred causal graph.

In practice, the causal graph is not required to be accurate, namely, we just need to ensure that it doesn’t contain too many missing links or false positive links. Besides FGES, other methods such as the PC algorithm (Spirtes & Glymour, 1991) can also be applied to infer the causal graphs. The causal graphs inferred by PC are probably different from those computed by FGES. Our experiments show that our anomaly detection approach is stable even though the causal graphs are different.

| Methods | SWaT | WADI | Methods | SWaT | WADI |

|---|---|---|---|---|---|

| Ours (GES) | Ours (CVAE) | ||||

| Ours (PC) | Ours (MLP) | ||||

| Ours (FGES) | Ours (KMN) |

Table 8 compares the performance of our approach with FGES, GES and PC. For SWaT, using FGES, GES and PC have similar performance. For WADI, using PC performs worse than using GES and FGES, but the F1-score 0.768 is still better than the other approaches. The performance drop is because the causal graph discovered by FGES is more accurate than PC in WADI. As shown in Table 8, we also tested different methods for estimating causal mechanisms (conditional distributions), e.g., CVAE, MLP and KMN (Ambrogioni et al., 2017). CVAE works better than the others in SWaT and WADI so we choose CVAE by default.

4.4 Case Study: Real-world Application in AIOps

Our last experiment is to apply our method for a real-world anomaly detection task in AIOps, where the goal is to monitor the operational key performance indicator (KPI) metrics of database services for alerting anomalies and identifying root causes in order to automate remediation strategies and improve database availability in cloud-based services. In this application, we monitor a total of 61 time series variables measuring the KPI metrics of database services, e.g., read/write IO requests, CPU usage, DB time. The data in this case study consists of the one-month measurements. According to the feedback from domain experts, most of the inferred causal relationships shown in Figure 8 are consistent with the known domain knowledge. For example, the discovered links Redo (redo size) – Lfpw (log file parallel write) – Lfs (log file sync) – COMT (commit) are exactly the same as the domain knowledge.

The incidences that happened are relatively rare, e.g., 2 major incidences one month, and our anomaly detection approach correctly detect these incidences. Therefore, we focus on the root cause analysis in this case study. Figure 8 shows an example of one major incidence, showing several abnormal metrics such as DBt (DB time), Lfs (log file sync), APPL (application), TotPGA (total PGA allocated) and a part of the causal graph. The root cause scores computed by our method are highlighted. We can observe that the top root causes metrics are APPL, DBt and TotPGA, all of which correspond to application or database related issues for the incident as validated by domain experts.

Figure 9 shows another major incidence. The top abnormal variables are SYIO (system I/O), USIO (user I/O), Lfpw (log file parallel write), UTIL (I/O utilization). All of them are related to I/O issues, meaning that the root causes are the components related to I/O.

5 Conclusions

Most previous approaches for multivariate time series anomaly detection model the joint distribution directly without considering the underlying causal process of the observed time series data. This paper presented a new definition and formulation of anomalies in multivariate time series from a causal perspective, and proposed a novel approach that exploits the causal structures discovered from data to help detect anomalies more accurately and identify the root causes robustly according to the local causal mechanism. Our experiments on both simulation and real datasets demonstrated the efficacy, robustness and practical feasibility of the proposed approach in real-world applications.

References

- Ambrogioni et al. (2017) Luca Ambrogioni, Umut Güçlü, Marcel A. J. van Gerven, and Eric Maris. The kernel mixture network: A nonparametric method for conditional density estimation of continuous random variables, 2017. URL https://arxiv.org/abs/1705.07111.

- Angiulli & Pizzuti (2002) Fabrizio Angiulli and Clara Pizzuti. Fast outlier detection in high dimensional spaces. In Principles of Data Mining and Knowledge Discovery, pp. 15–27, 2002.

- Audibert et al. (2020) Julien Audibert, Pietro Michiardi, Frédéric Guyard, Sébastien Marti, and Maria A. Zuluaga. Usad: Unsupervised anomaly detection on multivariate time series. In The 26th ACM SIGKDD Intl Conference on Knowledge Discovery & Data Mining, KDD’20, pp. 3395–3404, 2020.

- Baldi (2012) Pierre Baldi. Autoencoders, unsupervised learning, and deep architectures. In ICML Workshop on Unsupervised and Transfer Learning, volume 27 of PMLR, pp. 37–49. PMLR, 2012.

- Binkowski et al. (2018) Mikolaj Binkowski, Gautier Marti, and Philippe Donnat. Autoregressive convolutional neural networks for asynchronous time series. In Proceedings of the 35th International Conference on Machine Learning, volume 80 of PMLR, pp. 580–589. PMLR, 2018.

- Chandola et al. (2009) Varun Chandola, Arindam Banerjee, and Vipin Kumar. Anomaly detection: A survey. 41(3), 2009.

- Chaovalitwongse et al. (2007) Wanpracha Art Chaovalitwongse, Ya-Ju Fan, and Rajesh C. Sachdeo. On the time series -nearest neighbor classification of abnormal brain activity. IEEE Transactions on Systems, Man, and Cybernetics - Part A: Systems and Humans, 37(6):1005–1016, 2007.

- Chen et al. (2019) Pengfei Chen, Yong Qi, and Di Hou. Causeinfer: Automated end-to-end performance diagnosis with hierarchical causality graph in cloud environment. IEEE Transactions on Services Computing, 12(2):214–230, 2019. doi: 10.1109/TSC.2016.2607739.

- Chickering (2002) David Maxwell Chickering. Learning equivalence classes of Bayesian-network structures. J. Mach. Learn. Res., 2(3):445–498, 2002. ISSN 1532-4435; 1533-7928/e.

- Chickering (2003) David Maxwell Chickering. Optimal structure identification with greedy search. J. Mach. Learn. Res., 3(3):507–554, 2003. ISSN 1532-4435; 1533-7928/e.

- Dai & Chen (2022) Enyan Dai and Jie Chen. Graph-augmented normalizing flows for anomaly detection of multiple time series. In International Conference on Learning Representations, 2022. URL https://openreview.net/forum?id=45L_dgP48Vd.

- Dang et al. (2019) Yingnong Dang, Qingwei Lin, and Peng Huang. Aiops: real-world challenges and research innovations. In 2019 IEEE/ACM 41st International Conference on Software Engineering: Companion Proceedings (ICSE-Companion), pp. 4–5. IEEE, 2019.

- Dor & Tarsi (1992) Dorit Dor and Michael Tarsi. A simple algorithm to construct a consistent extension of a partially oriented graph. Technical report, 1992.

- Hamilton (1994) James Douglas Hamilton. Time Series Analysis. Princeton University Press, 1994.

- Hastie et al. (2009) Trevor Hastie, Robert Tibshirani, and Jerome Friedman. The elements of statistical learning: data mining, inference and prediction. Springer, 2009.

- Heard & Rubin-Delanchy (2018) N A Heard and P Rubin-Delanchy. Choosing between methods of combining -values. Biometrika, 105(1):239–246, Jan 2018. ISSN 1464-3510. doi: 10.1093/biomet/asx076. URL http://dx.doi.org/10.1093/biomet/asx076.

- Huang et al. (2018) Biwei Huang, Kun Zhang, Yizhu Lin, Bernhard Schölkopf, and Clark Glymour. Generalized score functions for causal discovery. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD ’18, pp. 1551–1560, 2018.

- Hundman et al. (2018) Kyle Hundman, Valentino Constantinou, Christopher Laporte, Ian Colwell, and Tom Soderstrom. Detecting spacecraft anomalies using lstms and nonparametric dynamic thresholding. In 24th ACM SIGKDD Intl Conf. on Knowledge Discovery & Data Mining, pp. 387–395, 2018.

- Hyndman & Athanasopoulos (2018) Robin John Hyndman and George Athanasopoulos. Forecasting: Principles and Practice. OTexts, 2nd edition, 2018.

- Kim et al. (2013) Myunghwan Kim, Roshan Sumbaly, and Sam Shah. Root cause detection in a service-oriented architecture. In Proceedings of the ACM SIGMETRICS/International Conference on Measurement and Modeling of Computer Systems, SIGMETRICS ’13, pp. 93–104, New York, NY, USA, 2013. Association for Computing Machinery. ISBN 9781450319003. doi: 10.1145/2465529.2465753. URL https://doi.org/10.1145/2465529.2465753.

- Laptev et al. (2015) Nikolay Laptev, Saeed Amizadeh, and Ian Flint. Generic and scalable framework for automated time-series anomaly detection. KDD ’15, pp. 1939–1947, 2015.

- Lin et al. (2018) JinJin Lin, Pengfei Chen, and Zibin Zheng. Microscope: Pinpoint performance issues with causal graphs in micro-service environments. In ICSOC, 2018.

- Liu et al. (2008) Fei Tony Liu, Kai Ming Ting, and Zhi-Hua Zhou. Isolation forest. In 2008 Eighth IEEE International Conference on Data Mining, pp. 413–422, 2008.

- Ma et al. (2019) Meng Ma, Weilan Lin, Disheng Pan, and Ping Wang. Ms-rank: Multi-metric and self-adaptive root cause diagnosis for microservice applications. In 2019 IEEE International Conference on Web Services (ICWS), pp. 60–67, 2019. doi: 10.1109/ICWS.2019.00022.

- Ma et al. (2020) Meng Ma, Jingmin Xu, Yuan Wang, Pengfei Chen, Zonghua Zhang, and Ping Wang. AutoMAP: Diagnose Your Microservice-Based Web Applications Automatically, pp. 246–258. Association for Computing Machinery, New York, NY, USA, 2020. ISBN 9781450370233. URL https://doi.org/10.1145/3366423.3380111.

- Manevitz & Yousef (2002) Larry M. Manevitz and Malik Yousef. One-class svms for document classification. J. Mach. Learn. Res., 2:139–154, March 2002.

- Mariani et al. (2018) Leonardo Mariani, Cristina Monni, Mauro Pezzé, Oliviero Riganelli, and Rui Xin. Localizing faults in cloud systems. In 2018 IEEE 11th International Conference on Software Testing, Verification and Validation (ICST), pp. 262–273, 2018. doi: 10.1109/ICST.2018.00034.

- Mathur & Tippenhauer (2016) Aditya P. Mathur and Nils Ole Tippenhauer. Swat: a water treatment testbed for research and training on ics security. In 2016 International Workshop on Cyber-physical Systems for Smart Water Networks (CySWater), pp. 31–36, 2016.

- Meek (1995) Christopher Meek. Causal inference and causal explanation with background knowledge. In Proceedings of the Eleventh Conference on Uncertainty in Artificial Intelligence, UAI’95, pp. 403–410. Morgan Kaufmann Publishers Inc., 1995.

- Meng et al. (2020) Yuan Meng, Shenglin Zhang, Yongqian Sun, Ruru Zhang, Zhilong Hu, Yiyin Zhang, Chenyang Jia, Zhaogang Wang, and Dan Pei. Localizing failure root causes in a microservice through causality inference. In 2020 IEEE/ACM 28th International Symposium on Quality of Service (IWQoS), pp. 1–10, 2020. doi: 10.1109/IWQoS49365.2020.9213058.

- Nguyen et al. (2011) Hiep Nguyen, Yongmin Tan, and Xiaohui Gu. Pal: Propagation-aware anomaly localization for cloud hosted distributed applications. SLAML ’11, New York, NY, USA, 2011. Association for Computing Machinery. ISBN 9781450309783. doi: 10.1145/2038633.2038634. URL https://doi.org/10.1145/2038633.2038634.

- Nguyen et al. (2013) Hiep Nguyen, Zhiming Shen, Yongmin Tan, and Xiaohui Gu. Fchain: Toward black-box online fault localization for cloud systems. In 2013 IEEE 33rd International Conference on Distributed Computing Systems, pp. 21–30, 2013. doi: 10.1109/ICDCS.2013.26.

- Page et al. (1999) Lawrence Page, Sergey Brin, Rajeev Motwani, and Terry Winograd. The pagerank citation ranking: Bringing order to the web. Technical Report 1999-66, Stanford InfoLab, November 1999. URL http://ilpubs.stanford.edu:8090/422/. Previous number = SIDL-WP-1999-0120.

- Park et al. (2017) Daehyung Park, Yuuna Hoshi, and Charles Kemp. A multimodal anomaly detector for robot-assisted feeding using an lstm-based variational autoencoder. IEEE Robotics and Automation Letters, PP, 11 2017.

- Pearl (2009) Judea Pearl. Causality: Models, Reasoning and Inference. Cambridge University Press, USA, 2nd edition, 2009.

- Peters et al. (2017) J. Peters, D. Janzing, and B. Schölkopf. Elements of Causal Inference - Foundations and Learning Algorithms. Adaptive Computation and Machine Learning Series. The MIT Press, Cambridge, MA, USA, 2017.

- Qiu et al. (2012) Huida Qiu, Yan Liu, Niranjan A. Subrahmanya, and Weichang Li. Granger causality for time-series anomaly detection. In IEEE Intl Conf. on Data Mining, pp. 1074–1079, 2012.

- Qiu et al. (2020) Juan Qiu, Qingfeng Du, Kanglin Yin, Shuang-Li Zhang, and Chongshu Qian. A causality mining and knowledge graph based method of root cause diagnosis for performance anomaly in cloud applications. Applied Sciences, 10(6), 2020.

- Ramsey et al. (2016) Joseph Ramsey, Madelyn Glymour, Ruben Sanchez-Romero, and Clark Glymour. A million variables and more: the fast greedy equivalence search algorithm for learning high-dimensional graphical causal models, with an application to functional magnetic resonance images. International Journal of Data Science and Analytics, 3:121–129, 2016.

- Ren et al. (2019) Hansheng Ren, Bixiong Xu, Yujing Wang, Chao Yi, Congrui Huang, Xiaoyu Kou, Tony Xing, Mao Yang, Jie Tong, and Qi Zhang. Time-series anomaly detection service at microsoft. In 25th ACM SIGKDD Intl Conf. on Knowledge Discovery & Data Mining, pp. 3009–3017, 2019.

- Samir & Pahl (2019) Areeg Samir and Claus Pahl. Dla: Detecting and localizing anomalies in containerized microservice architectures using markov models. In 2019 7th International Conference on Future Internet of Things and Cloud (FiCloud), pp. 205–213, 2019. doi: 10.1109/FiCloud.2019.00036.

- Shan et al. (2019) Huasong Shan, Yuan Chen, Haifeng Liu, Yunpeng Zhang, Xiao Xiao, Xiaofeng He, Min Li, and Wei Ding. e-diagnosis: Unsupervised and real-time diagnosis of small- window long-tail latency in large-scale microservice platforms. In The World Wide Web Conference, WWW ’19, pp. 3215–3222, New York, NY, USA, 2019. Association for Computing Machinery. ISBN 9781450366748. doi: 10.1145/3308558.3313653. URL https://doi.org/10.1145/3308558.3313653.

- Sohn et al. (2015) Kihyuk Sohn, Honglak Lee, and Xinchen Yan. Learning structured output representation using deep conditional generative models. In C. Cortes, N. Lawrence, D. Lee, M. Sugiyama, and R. Garnett (eds.), Advances in Neural Information Processing Systems, volume 28, 2015.

- Soldani & Brogi (2021) Jacopo Soldani and Antonio Brogi. Anomaly detection and failure root cause analysis in (micro)service-based cloud applications: A survey, 2021. URL https://arxiv.org/abs/2105.12378.

- Spirtes & Zhang (2016) P. Spirtes and K. Zhang. Causal discovery and inference: concepts and recent methodological advances. Applied Informatics, 3, 2016. https://doi.org/10.1186/s40535-016-0018-x.

- Spirtes et al. (1993) P. Spirtes, C. Glymour, and R. Scheines. Causation, Prediction, and Search. Spring-Verlag Lectures in Statistics, 1993.

- Spirtes & Glymour (1991) Peter Spirtes and Clark Glymour. An algorithm for fast recovery of sparse causal graphs. Social Science Computer Review, 9(1):62–72, 1991.

- Su et al. (2019) Ya Su, Youjian Zhao, Chenhao Niu, Rong Liu, Wei Sun, and Dan Pei. Robust anomaly detection for multivariate time series through stochastic recurrent neural network. In 25th ACM SIGKDD Intl Conference on Knowledge Discovery & Data Mining, KDD’19, pp. 2828–2837, 2019.

- Taylor & Letham (2018) Sean J. Taylor and Benjamin Letham. Forecasting at scale. The American Statistician, 72(1):37–45, 2018.

- Thalheim et al. (2017) Jörg Thalheim, Antonio Rodrigues, Istemi Ekin Akkus, Pramod Bhatotia, Ruichuan Chen, Bimal Viswanath, Lei Jiao, and Christof Fetzer. Sieve: Actionable insights from monitored metrics in distributed systems. In Proceedings of the 18th ACM/IFIP/USENIX Middleware Conference, Middleware ’17, pp. 14–27, New York, NY, USA, 2017. Association for Computing Machinery. ISBN 9781450347204. doi: 10.1145/3135974.3135977. URL https://doi.org/10.1145/3135974.3135977.

- Wang et al. (2018) Ping Wang, Jingmin Xu, Meng Ma, Weilan Lin, Disheng Pan, Yuan Wang, and Pengfei Chen. Cloudranger: Root cause identification for cloud native systems. In 2018 18th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGRID), pp. 492–502, 2018. doi: 10.1109/CCGRID.2018.00076.

- Wu et al. (2020) Li Wu, Johan Tordsson, Erik Elmroth, and Odej Kao. Microrca: Root cause localization of performance issues in microservices. In NOMS 2020 - 2020 IEEE/IFIP Network Operations and Management Symposium, pp. 1–9, 2020. doi: 10.1109/NOMS47738.2020.9110353.

- Zhang et al. (2019) Chuxu Zhang, Dongjin Song, Yuncong Chen, Xinyang Feng, Cristian Lumezanu, Wei Cheng, Jingchao Ni, Bo Zong, Haifeng Chen, and Nitesh V. Chawla. A deep neural network for unsupervised anomaly detection and diagnosis in multivariate time series data. In The Thirty-Third AAAI Conference on Artificial Intelligence, AAAI 2019, pp. 1409–1416, 2019.

- Zhao et al. (2020) Hang Zhao, Yujing Wang, Juanyong Duan, Congrui Huang, Defu Cao, Yunhai Tong, Bixiong Xu, Jing Bai, Jie Tong, and Qi Zhang. Multivariate time-series anomaly detection via graph attention network. CoRR, abs/2009.02040, 2020.

- Zong et al. (2018) Bo Zong, Qi Song, Martin Renqiang Min, Wei Cheng, Cristian Lumezanu, Daeki Cho, and Haifeng Chen. Deep autoencoding gaussian mixture model for unsupervised anomaly detection. In International Conference on Learning Representations, 2018.

- Álvaro Brandón et al. (2020) Álvaro Brandón, Marc Solé, Alberto Huélamo, David Solans, María S. Pérez, and Victor Muntés-Mulero. Graph-based root cause analysis for service-oriented and microservice architectures. Journal of Systems and Software, 159:110432, 2020. ISSN 0164-1212. doi: https://doi.org/10.1016/j.jss.2019.110432. URL https://www.sciencedirect.com/science/article/pii/S0164121219302067.