A Bayesian Approach to Spherical Factor Analysis for Binary Data

Abstract

Factor models are widely used across diverse areas of application for purposes that include dimensionality reduction, covariance estimation, and feature engineering. Traditional factor models can be seen as an instance of linear embedding methods that project multivariate observations onto a lower dimensional Euclidean latent space. This paper discusses a new class of geometric embedding models for multivariate binary data in which the embedding space correspond to a spherical manifold, with potentially unknown dimension. The resulting models include traditional factor models as a special case, but provide additional flexibility. Furthermore, unlike other techniques for geometric embedding, the models are easy to interpret, and the uncertainty associated with the latent features can be properly quantified. These advantages are illustrated using both simulation studies and real data on voting records from the U.S. Senate.

1 Introduction

Factor analysis (Child, 2006; Mulaik, 2009) has a long history that dates back at least to the pioneering work of Charles Spearman on intelligence during the first decade of the 20th century. Factor analysis is widely used across all sorts of disciplines within the social and natural sciences to account for and explain the correlations observed across multivariate responses. In traditional factor analysis, which in the case of normally distributed data includes principal component analysis as a special case, (the mean of) each response variable is represented as a linear combination of a common set of subject-specific factors. These factors can be interpreted as providing a linear embedding of the original high-dimensional data into a low-dimensional Euclidean space. These embeddings can be useful for various purposes, including data description, sparse estimation of covariance matrices, and/or as inputs to predictive models.

Linear embeddings on Euclidean spaces are relatively easy to compute and interpret. However, they might not be appropriate in all circumstances, particularly when the underlying data-generation mechanism involves highly non-linear phenomena. Over the last 30 years, a rich literature has developed covering the use of nonlinear embeddings and data-driven, non-Euclidean embedding manifolds. Auto-encoders (e.g., see Kramer, 1991, Xu & Durrett, 2018, Davidson et al., 2018 and Kingma et al., 2019) are a natural generalization of principal component analysis. They explain the structure in the data through the composition of two non-linear functions (often represented as neural networks). The first function (usually called the encoder) embeds the input data into the low-dimensional latent variables, while the second function (called the decoder) maps those latent variables into the original space of the data in a way that minimizes the reconstruction error. Alternatively, locally-linear embeddings (Roweis & Saul, 2000) reconstruct each input as a weighted average of its neighbours, and subsequently map the data into a low-dimensional embedding using those same weights. Isomap (Tenenbaum et al., 2000) extends the classical multidimensional scaling method into a general, data-driven manifold using geodesic distances measured on a neighbourhood graph. The goal is to preserve the intrinsic geometry of the manifold in which the data lies. Laplacian eigenmaps (Belkin & Niyogi, 2002) also seek to preserve the intrinsic geometry of local neighborhoods. The embedding eigenvectors are obtained as the solution of a generalized eigenvalue problem based on the Laplacian matrix of the neighbourhood graph. Local tangent space alignment (Zhang & Zha, 2004) finds the local geometry through the tangent space in the neighbourhood of inputs from which a global coordinate system for a general manifold is constructed. The inputs are embedded into a low-dimensional space through such global coordinate system. Finally, Gaussian process latent variable models (Lawrence, 2005) use Gaussian process priors to model the embedding function. These various approaches are very flexible in capturing the shape of the relationship between latent variables and outcomes, as well as the geometry of the underlying manifold on which the data lives. As a consequence, they can produce very compact representations of high-dimensional data using a very small number of latent dimensions. However, interpreting and quantifying the uncertainty associated with these embeddings can be quite difficult because of identifiability issues. Indeed, when performing non-linear embeddings, invariance to affine transformations is not enough to ensure identifiability of the latent features. When the goal is prediction or sparse estimation of covariance matrices, this does not matter as these quantities are (usually) identifiable functions of the unidentified latent factors. However, when interest lies in the embedding coordinates themselves (as is the case in many social sciences applications), the lack of identifiability means that we are in the presence of irregular problems in which standard techniques for generating confidence/credible intervals are not applicable. Similarly, interpretation of the latent positions is dependent on the exact geometry of the embedding space, which is in turn only partially specified unless the lack of identifiability is addressed.

An alternative to the nonparametric embedding methods discussed above is to consider more flexible (but fixed) embedding spaces for which identifiability constraints can be easily derived. Such approach trades off some of the flexibility of the nonparametric methods for interpretability and the ability to properly quantify the uncertainty associated with the embedding. Along these lines, this manuscript proposes a general framework for embedding multivariate binary data into a general Riemannian manifold by exploiting the random utility formulation underlying binary regression models (Marschak, 1960; McFadden, 1973). To focus ideas, and because of their practical appeal, we place a special emphasis on spherical latent spaces. Most of our attention in this paper is focused on two key challenges. The first one is eliciting prior distribution for model parameters that do not degenerate as the dimension of the embedding space grows. Well calibrated priors, in the previous sense, are key to enable dimensionality selection. The second challenge is designing efficient computational algorithms for a model in which some of the parameters live on a Riemannian manifold.

It is worthwhile noting that the literature has considered generalizations of data-reduction techniques such as principal components and factor analysis to situations in which the observations live on a manifold. Examples include principal Geodesic Analysis (Fletcher et al., 2004; Sommer et al., 2010; Zhang & Fletcher, 2013) and principal nested spheres (Jung et al., 2012). This literature, however, is only marginally relevant to us since it is the parameters of our model, and not the data itself, that are assumed to live on a spherical manifold.

The remainder of the paper is organized as follows: Section 2 reviews traditional factor analysis models for binary data, including their random utility formulation. Section 3 introduces our spherical factor models and discusses the issues associated with prior elicitation. Section 4 discusses our computational approach to estimating model parameters, which is based on Geodesic Hamiltonian Monte Carlo algorithms. Section 5 illustrates the behavior of our model on both simulated and real datasets. Finally, Section 6 concludes the paper with a discussion of future directions for our work.

2 Bayesian factor analysis models for binary data: A brief overview

Consider data consisting of independent multivariate binary observations associated with subjects, where is a vector in which each entry is associated with a different item. In the political science application we discuss in Section 5, represents the vote of legislator (subject) in question (item) (with corresponding to an affirmative vote, and corresponding to a negative one). On the other hand, in marketing applications, might represent whether consumer (subject) bought product (item) (if ) or not (if ).

A common approach to modeling this type of multivariate data relies on a generalized bilinear model of the form

| (1) |

where is the (known) link function, and the intercepts as well as the bilinear terms and are all unknown and need to be estimated from the data (e.g., see Bartholomew, 1984, Legler et al., 1997, Ansari & Jedidi, 2000 and Jackman, 2001). Different choices for the link function lead to well-known classes models, such as logit and probit models. Furthermore, given a distribution over the latent factors , integrating over them (which, in the case of binary data, can be done in closed form only in very special cases) yields a wide class of correlation structures across items.

The class of factor analysis models for binary data in (1) can be derived through the use of random utility functions (Marschak, 1960; McFadden, 1973). To develop such derivation, we interpret the latent trait as representing the preferences of subject over a set of unobserved item characteristics, and associate with each item two positions, (corresponding to a positive responsive, i.e., ) and (corresponding to a negative one, i.e., ). Assuming that individuals make their choice for each item based on the relative value of two random quadratic utilities,

| (2) |

where and represent random shocks to the utilities, and are independently distributed for all and and have cumulative distribution function , it is easy to see that

where and . This formulation, which is tightly linked to latent variable representations used to fit categorical models (e.g., see Albert & Chib, 1993), makes it clear how the factor model embeds the binary observation into a Euclidean latent space. Furthermore, this construction also highlights a number of identifiability issues associated with the model. In particular, note that the utility functions in (2) are invariant to affine transformations. Therefore, the positions , and are only identifiable up to translations, rotations and rescalings, and the scaling cannot be identified separately from the scale of the latent space. These identifiability issues are usually dealt with by fixing for all , and by either fixing the position of legislators (e.g., see Clinton et al., 2004), or by fixing the location and scale of the ideal points, along with constraints on the matrix of discrimination parameters (e.g., see Geweke & Singleton, 1981). In either case, identifiability constraints can be enforced either a priori, or a posteriori using a parameter expansion approach (e.g., see Liu & Wu, 1999, Rubin & Thomas, 2001 and Hoff et al., 2002).

Bayesian inference for this class of models requires the specification of prior distributions for the various unknown parameters. For computational simplicity, it is common to use (hierarchical) priors for the s, s and s, so that computation can proceed using well-established Markov chain Monte Carlo algorithms (e.g., see Albert & Chib, 1993 and Bafumi et al., 2005). However, the hyperparameters of these priors need to be selected carefully. For example, when the dimension of the embedding space is unknown and needs to be estimated from the data, it is important to ensure that the prior variance on induced by these priors remains bounded as if one is to avoid Bartlett’s paradox (Bartlett, 1957). One approach to accomplish this goal is to select the prior covariance matrix for the s to be diagonal, and to have the marginal variance of its components decrease fast enough as more of them are added.111As an example, consider a probit model (i.e., ) where, a priori, , , and . In this case, converges in distribution to a uniform distribution on as (see the online supplementary materials. This type of construction, which is related but distinct from the one implied by the Indian Buffet process (Griffiths & Ghahramani, 2011), provides a prior that is consistent as the number of components grows, and which can be interpreted as a truncation of an infinite dimensional model. Assigning prior distributions to the s and s (which, in turn, imply priors on the s and s) is also a possibility, but it is rarely done in practice. In that setting, ensuring a satisfactory behavior as the number of dimensions grows typically requires that the variances of all three latent positions decrease as the number of dimensions of the latent space increases. This observation will be important to some of the developments in Section 3.2.

3 Bayesian factor models for binary data on spherical spaces

3.1 Likelihood formulation

The formulation of classical factor models for binary data in terms of random utility functions described in Section 2 lends itself naturally to extensions to more general embedding spaces. In particular, we can generalize the model by embedding the positions , and into a connected Riemannian manifold equipped with a metric , and then rewriting the utility functions in (2) as

| (3) |

Assuming that the errors and are such that their differences are independent with cumulative distribution function , this formulation leads to a likelihood function of the form

where is the matrix of observations whose rows correspond to , and

| (4) |

In this paper, we focus on the case in which corresponds to the unit hypersphere in dimensions, , which is equipped with its geodesic distance , the shortest angle separating two points measured over the great circle connecting them. There are two alternative ways to describe such a space. The first one uses a hyperspherical coordinate system and relies on a vector of angles, . The second one uses the fact that is a submanifold of , and relies on a vector of Cartesian coordinates subject to the constraint . The two representations are connected through the transformation

| (5) | ||||

While the hyperspherical coordinate representation tends to be slightly more interpretable and directly reflects the true dimensionality of the embedding space, Cartesian coordinates often result in more compact expressions. For example the distance metric can be simply written as . Furthermore, the Cartesian coordinate representation will be key to the development of our computational approaches. Notation-wise, in the sequel, we adopt the convention of using Greek letters when representing points in through their angular representation, and using Roman letters when representing them through embedded Cartesian coordinates.

Lastly, we discuss the selection of the link function. must account for the fact that, when is used as embedding space and as the distance metric, the function introduced in (4) has as its range the interval . While various choices are possible, we opt to work with the cumulative distribution of a shifted and scaled beta distribution,

| (6) |

Note that , which controls the dispersion of the density associated with , plays an analogous role to the one that played in Section 2. In fact, note that

| (7) |

This observation, together with the fact that for small values of , make it clear that the projection of the likelihood of a spherical model in on its tangent bundle is very close to a version of the Euclidean factor model in described in (1) in which the link function is the probit link. We expand on this connection in Section 3.3

Finally, a short note on the connection between the likelihood functions associated with two models defined in and , respectively. Let and be (the angular representations of) two pairs of points in spherical spaces of dimension and , respectively. It is easy to verify that, when as well as and for all , then . Hence, the likelihood function of a spherical factor model in which the latent positions are embedded in and for which for all and , is identical to the likelihood function that would be obtained by directly fitting a model in which the latent positions are embedded in . This observation will play an important role in the next Section, which is focused on the defining prior distributions for the latent positions.

3.2 Prior distributions

As we discussed in the introduction, a key challenge involved in the implementation of the spherical factor models we just described is selecting priors for the latent positions and and . This challenge is specially daunting in settings where estimating the dimension of the latent space is of interest.

To illustrate the issues involved, consider the use of the von Mises–Fisher family as priors for the latent positions. The Hausdorff density with respect to the uniform measure on the sphere for the von Mises–Fisher distribution on is given by

where is the modified Bessel function of order , satisfies and represents the mean direction, and is a scalar precision parameter. The von Mises–Fisher distribution is a natural prior in this setting because it is the spherical analogue to the multivariate Gaussian distribution with diagonal, homoscedastic covariance matrix that is sometimes used as a prior for the latent positions in traditional factor models. Indeed, note that

We are interested in the behavior of the prior on

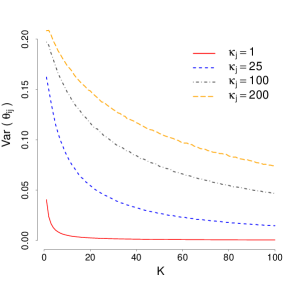

(recall Equation (4)) induced by a von Mises–Fisher prior with mean direction and precision for , and independent von Mises–Fisher priors with mean direction and precision for both and . Because, and are assigned the same prior distributions, it is clear from a simple symmetry argument that for all values of , and . On the other hand, Figure 1 shows the value as a function of the embedding dimension for various combinations of , and . Note that, in every case, it is apparent that , which implies that converges in distribution to a point mass at as the number of latent dimensions grows.

|

|

|

|

|

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

This behavior, which is clearly unappealing, is driven by two features. First, the von Mises–Fisher prior has a single scale parameter for all its dimensions. Secondly, the surface area of the dimensional sphere, , tends to zero as . In fact, not only the von Mises-Fisher distribution, but also other widely used distributions on the sphere such as the Fisher-Bingham and the Watson distributions suffer from the same drawback. Dryden (2005) proposes to overcome this phenomenon by scaling the (common) concentration parameter of the von Mises-Fisher according to the number of dimensions. However, this approach is unappealing in our setting for two reasons. First, it does not appear to overcome the degeneracy issues just discussed. Secondly, under this approach changes in would fundamentally change the nature and structure of the prior distribution. In the sequel, we discuss a novel class of flexible priors distributions on that allow for different scales across various dimensions and can be used to construct priors for that do not concentrate on a point mass as the number of dimensions increase.

We build our new class of prior distributions in terms of the vector of angles associated with the hyperspherical coordinate system. In particular, we assign each component of a (scaled) von Mises distribution centered at 0, so that

| (8) |

where is a vector of dimension-specific precisions. We call this prior the spherical von Mises distribution, and denote it by .

One key feature of this prior is that the parameters control the concentration of each marginal distribution around their mean. In particular, when , the marginal distribution of becomes a point mass at zero, and concentrates all its mass in a greater nested hypersphere of dimension (see Figure 2 for an illustration).

A second key feature of this class of priors is that

| (9) |

Somewhat informally, this means that for large values of the precision parameters, the prior behaves like a zero-mean multivariate normal distribution with covariance matrix .

Finally, we note that the density spherical von Mises distribution can be written in terms of the Cartesian coordinates associated with (recall Equation (5)). More specifically, the density of the Hausdorff measure with respect to the uniform distribution on the sphere associated with (8) is given by

| (10) |

See the online supplementary materials for the derivation.

Coming back to our spherical factor model, we use the spherical von Mises distribution as priors for the s, s and s. In particular, we set

| (11) | ||||

| (12) | ||||

| (13) |

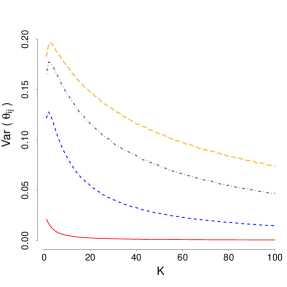

independently across all and . By setting up the priors on the latent positions to have increasing marginal precisions, we can avoid the degeneracy issues that arose in the case of the von Mises-Fisher priors. This is demonstrated in Figure 3, which shows the value of as function of the latent dimension for various combinations of , and under (11-13). Note that, in every case, the variance of the prior decreases slightly with the addition of the first few dimensions, but then seems to quickly stabilize around a constant that is determined by the value of the hyperparameters. Figure 4 explores in more detail the shape of the implied prior distribution on , as well as the effect of various hyperparameters. Note that the implied prior can either be unimodal (which typically happens for relatively large values and ), or trimodal (which happens for low values of and ). The intuition behind the formulation in Equations (11-13) comes from the fact that these polynomial rates ensures that converges to a constant with the number of dimensions under the Gaussian approximation in Equation (9).

|

|

|

|

|

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

In addition to avoiding degeneracy issues, using an increasing sequence for the marginal precision of all three sets of s, s and s means that the prior encourages the model to concentrate most of the mass on a embedded sub-sphere of (recall the discussion at the end of Section 3.1). Hence, we can think of as representing the highest intrinsic dimension allowed by our prior, and as the combination of and as controlling the “effective” prior dimension of the space, . Another implication of this prior structure is that we can think of our prior as encouraging a decomposition of the (spherical) variance that is reminiscent of the principal nested sphere analysis introduced in Jung et al. (2012).

3.3 Connection to the Euclidean model

In Section 3.1 we argued that the spherical model of dimension contains all other spherical models of lower dimension as special cases. Similarly, the Euclidean space of dimension includes all spherical spaces of dimension or lower. It is also true that the -dimensional Euclidean model discussed in Section 2 (with a probit link) can be seen as a limit case of our spherical model on .

The argument relies on projecting the spherical model onto the tangent bundle of the manifold, and making , and while keeping and constant for all . As mentioned in previous sections, under these circumstances, (i) the link function defined in Equation (6) projects onto the probit link function, (ii) the geodesic distance between the original latent positions converges to the Euclidean distance between their projections onto the tangent space, and (iii) the spherical von-Mises priors defined in Equations (11-13) project onto Gaussian priors.

This observation is important for at least three reasons. Firstly, it can guide prior elicitation, specially for the precision parameters and . Secondly, spherical models can be seen as interpolating (in terms of complexity) between Euclidean models of adjacent dimensions. In particular, note that the likelihood for Euclidean models involves parameters (since the s are not identifiable and typically fixed) while the likelihood of the spherical model relies on parameters, and finally, . Thirdly, it enhances the interpretability of the model. In particular, the reciprocal of the precision, can be interpreted as a measure of sphericity of the latent space that can be directly contrasted across datasets.

3.4 Parameter identifiability

The likelihood function for the spherical factor model discussed in Section 3.1 is invariant to simultaneous rotations of all latent positions. However, the structure of the priors in (11-13) induces weak identifiability for the s, s, and s in the posterior distribution. Reflections, which are not addressed by our prior formulation, can be accounted for by fixing the octant of the hypersphere to which a particular belongs. In our implementation, this constraint is enforced a posteriori by post-processing the samples generated by the Markov chain Monte Carlo algorithm described in Section 4. On the other hand, while the scale parameters are not identifiable in the Euclidean model, the analogous precisions are identifiable in the spherical model. This is because has finite measure, and therefore the likelihood is not invariant to rescalings of the latent positions. More generally, partial Procrustes analysis (which accounts for invariance to translations and rotations, but retains the scale) can be used to postprocess the samples to ensure identifiability.

3.5 Hyperpriors

Completing the specification of the model requires that we assign hyperpriors to , and . The precisions and are assigned independent Gamma distributions, and . Similarly, the concentration parameters associated with the link function are assumed to be conditionally independent and given a common prior, , where is in turn given a conditionally conjugate Gamma hyperprior, . The parameters , , , , , and for these hyperpriors are assigned to strongly favor configurations in which (which is consistent with the assumption that a Euclidean model is approximately correct), and so that the induced prior distribution on is, for large , close to a distribution (the proper, reference prior for the probability of a Bernoulli distribution). Various combinations of hyperparameters satisfy these requirements, most of which lead to priors on that are trimodal. We recommend picking one in which the hyperpriors are not very concentrated (e.g., see Figure 5) and studying the sensitivity of the analysis to the prior choice. In our experience, inferences tend to be robust to reasonable changes in the hyperparameters (see Section 5.1.1).

4 Computation

The posterior distribution for the spherical factor model is analytically intractable. Hence, inference for the model parameters is carried out using Markov chain Monte Carlo (MCMC) algorithms. The algorithm we propose is a hybrid that combines Gibbs sampling, random walk Metropolis-Hastings and Hamiltonian Monte Carlo steps to generate samples from the full conditional distributions of each parameter. The simplest steps correspond to sampling the parameters , , and . More specifically, we sample from its inverse-Gamma full conditional posterior distribution, and sample , and each of the s using random walk Metropolis Hastings with log-Gaussian proposals. The variance of the proposals for these steps are tuned so that the acceptance rate is roughly 40%. On the other hand, for sampling the latent positions we employ the Geodesic Hamiltonian Monte Carlo (GHMC) algorithm described in Byrne & Girolami (2013), which provides a scalable and efficient way to obtain samples from target distributions defined on manifolds that can be embedded in a Euclidean space.

As an example, consider the step associated with updating , the latent factor for individual . Denoting the associated coordinates in by (recall Equation (5)), the density of the Hausdorff measure associated with the full conditional posterior is given by

where . Then, given tuning parameters and , the GHMC step takes the form:

-

1.

Initialize , as well as the auxiliary momentum variable by sampling .

-

2.

Project the momentum onto the tangent space at by setting , and then set .

-

3.

For each of the leap steps:

-

(a)

Update the momentum by setting .

-

(b)

Project the momentum onto the tangent space at by setting , and then set the angular velocity of the geodesic flow .

-

(c)

Update and jointly according to the geodesic flow with step size of ,

-

(d)

Update .

-

(e)

Project the momentum onto the tangent space at by setting .

-

(a)

-

4.

Set the proposed values as and the proposed value is accepted with probability

The gradient of the logarithm of the Hausdorff measure may appear forbidding to derive. One practical difficulty is dealing with the spherical constraint . We discuss the calculation of the gradient in the online supplementary materials, where we also derive a recursive formula linking the gradient of the prior density in dimensions to that of the gradient in dimension . Detailed expressions for the Hausdorff measures associated with the full conditional posteriors of the s, s and s, as well as their corresponding gradients, can be found in the online supplementary materials. In our experiments, we periodically “jitter” the step sizes and the number of leap steps (e.g., see Gelman et al., 2013, pg. 306). The specific range in which and move for each (group of) parameter and each dataset is selected to target an average acceptance probability between 60% and 90% (Beskos et al., 2013; Betancourt et al., 2014).

Our implementation of the algorithm is available at https://github.com/forreviewonly/review/.

5 Illustrations

In this section, we illustrate the performance of the proposed model on both simulated and real data sets. In all of these analyses, the number of leaps used in the HMC steps is randomly selected from a discrete uniform distribution between 1 and 10 every 50 samples. Similarly, the leap sizes are drawn from uniform distribution on or for each , and from a uniform distribution on or for each and . All inference presented in this Section are based on 20,000 samples obtained after convergence of the Markov chain Monte Carlo algorithm. The length of the burn in period varied between 10,000 and 20,000 iterations depending on the dataset, with a median around 10,000. Convergence was checked by monitoring the value of the log-likelihood function, both through visual inspection of the trace plot, and by comparing multiple chains using the procedure in Gelman & Rubin (1992).

5.1 Simulation Study

We conducted a simulation study involving four distinct scenarios to evaluate our spherical model. For each scenario, we generate one data set consisting of items and subjects (similar in size to the roll call data from the U.S. Senate presented in Section 5.2). In our first three scenarios, the data is simulated from spherical factor models on , and , respectively. In all cases, the subject-specific latent positions, as well as the item-specific latent positions, are sampled from spherical von-Mises distributions where all component-wise precisions are equal to 2. Note that this data generation mechanism is slightly different from the model we fit to the data (in which the precision of the components increase with the index of the dimension). For the fourth scenario, data was simulated from a Euclidean probit model (recall Section 2) in which the intercepts , the discrimination parameters , and latent traits are sampled from standard Gaussian distributions.

In each scenario, both spherical and Euclidean probit factor models of varying dimension are fitted to the simulated datasets. The left column of Figure 6 shows the value of the Deviance Information Criteria (DIC) (Spiegelhalter et al., 2002, 2014; Gelman et al., 2014) as a function of the dimension of the fitted model’s latent space for each of the four scenarios in our simulation. In our models, the DIC is defined as:

where is the matrix of probabilities given by for the spherical model and for the Euclidean probit model, , and the expectations and variances are computed with respect to the posterior distribution of the parameters (which are in turn approximated from the samples generated by our Markov chain Monte Carlo algorithm). The first term in the DIC can be understood as a goodness-of-fit measure, while the second one can be interpreted as a measure of model complexity.

| In-sample | Principal nested | ||

|---|---|---|---|

| accuracy | sphere decomposition | ||

|

Scenario 1 () |

|

|

|

|

Scenario 2 () |

|

|

|

|

Scenario 3 ( |

|

|

|

|

Scenario 4 () |

|

|

|

From Figure 6, we can see that DIC is capable of identifying the presence of sphericity in the underlying latent space, as well as its correct dimension. In Scenarios 1 to 3, the optimal model under DIC is correctly identified as a spherical model with the right dimension. Furthermore, as we would expect, the optimal Euclidean model in each case has one additional dimension (i.e., the dimension of the space corresponds to the lowest-dimensional Euclidean space in which the true spherical latent space can be embedded). For Scenario 4, DIC correctly selects a Euclidean model in three dimensions, followed very closely by a spherical space of the same dimension. Again, these results match our previous discussion about the ability of a spherical model to approximate a Euclidean model.

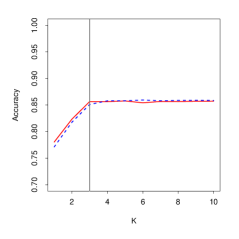

The second column of Figure 6 shows the in-sample predictive accuracy of the models in each scenario. This accuracy is closely linked to the goodness-of-fit component of the DIC. Note that, from the point of view of this metric, both the spherical and Euclidean models have about the same performance. Furthermore, in both cases, as the dimension increases, the predictive accuracy increases sharply at first, but quickly plateaus once we reach the right model dimension, generating a clear elbow in the graph. Similar elbows can be seen in the third column of Figure 6, which shows the amount of variance associated with each component of a principal nested spheres decomposition (Jung et al., 2012) of the subject’s latent positions for the . This decomposition can be interpreted as a version of principal component analysis for data on an spherical manifold. Note, however, that the elbow in Scenario 4 is much less sharp than the elbows we observed in Scenarios 1, 2 and 3.

To get a better sense of the relationship between the spherical and Euclidean models, Figure 7 presents histograms of the posterior samples for the hyperparameters , and of our spherical model for Scenario 2 (left column) and Scenario 4 (right column). We show these two scenarios because the true dimension of the latent space is 3 in both cases. Note that the posterior distributions of these hyperparameters concentrate on very different values depending on whether the truth corresponds to a Euclidean or a spherical model. Furthermore, in all cases the posterior distributions are clearly different from the priors.

| Scenario 2 () | Scenario 4 () | |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

Finally, Figure 8 presents posterior estimates for the subject’s latent positions for Scenario 1. To facilitate comparisons between the truth and the estimates, we plot each dimension separately. While the first dimension seems to be reconstructed quite accurately (the coverage rate for the 95% credible intervals shown in the left panel is 98%, with an approximate 95% confidence interval of ), the reconstruction of the second component is less so (the coverage in this case is 68%, with an approximate 95% confidence interval of ).

5.1.1 Sensitivity analysis

To assess the sensitivity of our estimates to the values of the hyperparameters, we repeated our analysis using a different prior specification in which , and . The induced prior on for can be seen in Figure 9. (Note the very different shape when compared with Figure 5.)

We present here details only for Scenario 1; the results for the other 3 scenarios are very similar. Similarly to Figure 6, Figure 10 presents the value of the DIC, the in-sample accuracy and the principal nested sphere decomposition associated under both the original and the alternative priors. The main difference we observe is in the values of the DIC, with the alternative prior tending to give higher plausibility to models dimension 5 and above.

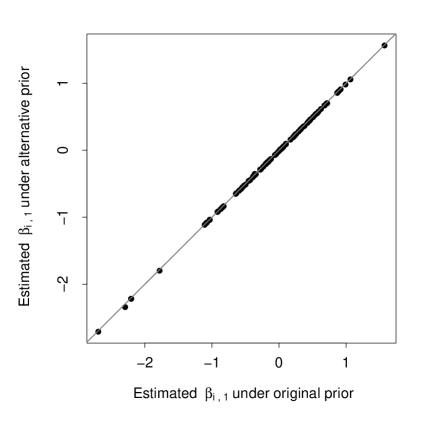

Furthermore, Figure 11 shows histograms of the posterior distributions for the hyperparameters , and under our two priors. The posterior distribution of these parameters appear to be very similar, suggesting robustness to this prior change. Finally, Figure 12 presents the posterior means of the subject’s latent positions under our alternative prior. Note that the point estimates are nearly identical under both priors.

| Original prior | Alternative prior | |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

5.2 Roll call voting in the U.S. Senate

Factor models are widely used for the analysis of voting records from deliberative bodies (e.g., see Jackman, 2001, Clinton et al., 2004 and Bafumi et al., 2005). In this context, the embedding space provides an abstract representation for the different policy positions, and legislator-specific latent traits are interpreted as their revealed preferences over one or more policy dimensions (e.g., economic vs. social issues in a two dimensional setting, see Moser et al., 2020) or as their ideological preferences in a liberal-conservative scale (in one-dimensional settings, see for example Jessee, 2012).

While Euclidean policy spaces often provide an appropriate description of legislator’s decision-making process, they might not be appropriate in all settings. The idea that spherical policy spaces might be appealing alternatives to Euclidean ones dates back at least to Weisberg (1974), who provides a number of examples and notes that “circular shapes may be expected for alliance structures and for vote coalitions where extremists of the left and right coalesce for particular purposes”. Spherical policy spaces also provide a mechanism to operationalize the so-called “horseshoe theory” (Pierre, 2002; Taylor, 2006, pg. 118), which asserts that the far-left and the far-right are closer to each other than they are to the political center, in an analogous way to how the opposite ends of a horseshoe are close to each other.

In this Section we use our spherical factor models to investigate the geometry of policy spaces in two different U.S. Senates, the 102nd (which met between January 3, 1991 and January 3, 1993, during the last two years of George H. W. Bush’s presidency) and the 115th (which met between January 3, 2017 and January 3, 2019, during the first two years of Donald Trump’s presidency). The data we analyze consists of the record of roll call votes, and it is publicly available from https://voteview.com/. Before presenting our results, we provide some additional background into the data and the application. Roll call votes are those in which each legislators votes ”yea” or ”nay” as their names are called by the clerk, so that the names of senators voting on each side are recorded. It is important to note that not all votes fall into this category. Voice votes (in which the position of individual legislator are not recorded) are fairly common. This means that roll call votes represent a biased sample from which to infer legislator’s preferences. Nonetheless, roll call data is widely used in political science. The U.S. Senate is composed of two representatives from each of the 50 States in the Union. The total number of Senators included in each raw dataset might be slightly higher because of vacancies, which are typically filled as they arise. While turnover in membership is typically quite low, these changes lead to voting records with (ignorable) missing values on votes that came up during the period in which a Senator was not part of the chamber. Additionally, missing values can also occur because of temporary absences from the chamber, or because of explicit or implicit abstentions. As is common practice, we exclude from our analysis any senators that missed more than 40% of the votes cast during a given session, which substantially reduces the number missing values. The remaining ones, which are a small percentage of the total number of votes, were treated in our analysis as if missing completely at random. While this assumption is not fully supported by empirical evidence (e.g., see Rodríguez & Moser, 2015), it is extremely common in applications. Furthermore, the relatively low frequency of missing values in these datasets suggests that deviations from it will likely have a limited impact in our results. See table 1 for a summary of the features of the two post processed datasets.

| Session | Senators () | Measures () | Missing votes |

|---|---|---|---|

| 102nd | 100 | 550 | 2222 (4.04%) |

| 115th | 96 | 599 | 1236 (2.15%) |

As in Section 5.1, we fit both Euclidean and spherical factor models of varying dimensions to each of these two datasets. The left column of Figure 13 shows the DIC values associated with these models. For the 102nd Senate, we can see that a Euclidean model of dimension 3 seems to provide the best fit overall while, among the spherical models, a model of dimension 3 also seems to outperform. On the other hand, for the 115th Senate, a two-dimensional spherical model seems to provide the best fit to the data, closely followed by a circular (one-dimensional spherical) model. The right column of Figure 13 presents the principal nested sphere decomposition associated with the posterior mean configuration estimated by an eight-dimensional spherical model on each of the two Senates. The differences are substantial. In the case of the 115th Senate (for which DIC suggests that the geometry of the latent space is indeed spherical), the first component of the decomposition explains more than 95% of the variability in the latent space, and the first three components explain close to 99%. On the other hand, for the 102nd Senate, the first three spherical dimensions explain less than 70% of the total variability.

| Principal nested | ||

|---|---|---|

| sphere decomposition | ||

|

102nd Senate |

|

|

|

115th Senate |

|

|

At a high level, these results are consistent with the understanding that scholars of American politics have of contemporaneous congressional voting patterns. While congressional voting in the U.S. has tended to be at least two-dimensional for most of its history (with one dimension roughly aligning with economic issues, and the other(s) corresponding to a combination of various social and cultural issues), there is clear evidence that it has become more and more unidimensional over the last 40 years (e.g., see Poole & Rosenthal, 2000). Increasing polarization has also been well documented in the literature (e.g., see Jessee, 2012 and Hare & Poole, 2014). The rise of extreme factions within both the Republican and Democratic parties willing to vote against their more mainstream colleagues, however, is a relatively new phenomenon that is just starting to be documented (see Ragusa & Gaspar, 2016, Lewis, 2019a, Lewis, 2019b) and can explain the dominance of the spherical model for the 115th Senate voting data.

Figure 14 presents histograms of the posterior samples for the hyperparameters , and for each of the two Senates. Note that, as with the simulations, the posterior distributions differ substantially from the priors. Furthermore, the model prefers relatively smaller values of and relatively larges values of and for the 115th Senate when compared with the 102nd.

| 102nd Senate | 115th Senate | |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

To conclude this Section, we focus our attention on the one-dimensional versions of the Euclidean and spherical models. While one-dimensional models are not preferred by the DIC criteria in our datasets, researchers often use them in practice because the resulting ranking of legislators often reflects their ordering in a liberal-conservative (left-right) scale. Figure 15 compares the median rank order of legislators estimated under the one-dimensional Euclidean and one-dimensional spherical (circular) models for the 102nd (left panel) and the 115th (right panel) Senates. To generate the ranks under the circular model, we “unwrap” the circle and compute the ranks on the basis of the associated angles (which live in the interval ). For the 102nd Senate (where DIC would prefer the one-dimensional Euclidean model over the circular model), the median ranks of legislators under both models are very similar. This result provides support to our argument that the spherical model can provide a very good approximation to the Euclidean model when there is no evidence of sphericity in the data. On the other hand, for the 115th (where the circular model would be preferred over the one-dimensional Euclidean model), the ranking of Republican legislators differs substantially between both models. Digging a little bit deeper, we can see that the five legislators for which the ranks differ the most are Rand Paul (KY), Mike Lee (UT), Jeff Flake (AZ), Bob Corker (TN) and John Neely Kennedy (LA), all known for being hard line conservatives, but also for bucking their party and voting with (left wing) Democrats on some specific issues.

6 Discussion

We have developed a new flexible class of factor analysis models for multivariate binary data that embeds the observations on spherical latent spaces. The results from our illustrations suggest that (i) it is possible to distinguish between different geometries and dimensions for the embedding space, (ii) our model can closely approximate traditional factor models when the latent space is Euclidean, (iii) the use spherical latent spaces can be justified, both theoretically and empirically, in applications related to choice models.

The assumption that of a spherical latent space might not be appropriate in every application. For example, we find it hard to justify their use in settings such as educational testing. However, we believe that this class of models can have broader applications beyond the analysis of roll call data discussed in Section 5.2. One such area of application is marketing, where choice models are widely used to understand consumer behavior. In this context, spherical models could serve to explain the apparent lack of transitivity of preferences that is sometimes present in real marketing data.

While the focus of this paper has been on latent spherical spaces, it is clear that our approach can be extended to other classes of embedding manifolds. The main challenge associated with this kind of extension is the construction of prior distributions, specially if we aim to estimate the intrinsic dimension of the space. Similarly, this class of models can be extended beyond binary data to nominal and categorical observations using similar random utility formulations. This is ongoing work in our group.

7 Supplementary material

7.1 Stability of priors in the Euclidean factor model

Let , , , and . Because is the sum of random variables with finite second moments, a simple application of the central limit theorem indicates that converges in distribution to standard normal distribution as . Now, note that by construction, and that,

As a consequence, converges in distribution to a uniform distribution in .

7.2 Derivation of the gradient of log prior and log Jacobian

The gradient with respect to the log of Hausdorff measure may appear forbidding to derive, especially the prior component of the full conditional because the expression of the gradient changes with the predetermined maximum dimension. Hence, we develop an automatic method to facilitate the computation of the gradient. Interestingly, the GHMC algorithm projects all the gradient to the tangent space through the momentum update, which allows the gradient computed with or without the constraint to be the same. Nevertheless, it is generally recommended to derive the gradients under the unit norm constraint since they are invariant to the reparameterization with respect to the constraint.

| (14) |

where , and is the gradient of log-likelihood, log of prior and log of Jacobian respectively with respect to the Hausdorff measure. For , the model becomes the circular factor model and we focus on the derivation for in this paper. Let be the independent random variable and be the dependent random variable. As a result, the gradient associated with the last dimension is 0. The first components of the gradient from the prior and Jacobian are shown as follows,

| (15) |

where is an upper triangular matrix of size without the first columns and all non-zero entries are 1. As a special case when , becomes a 0 matrix and becomes scalar 1. Once the maximum dimension is specified, , can be generated and all the gradients can then be vectored easily during the implementation without explicitly deriving the expression for different maximum dimension. Recall that, and share the same prior and hence the corresponding gradient expression will also be the same.

7.3 Hausdorff measure used in the Geometric Hamiltonian Monte Carlo and their gradients

7.3.1 Hausdorff measure

Using the hyperspherical coordinates in (5) from the main paper, we can express our prior proposed prior in terms of Hausdorff measure,

7.3.2 Gradients of the loglikelihood

Recall from Section 4 from the main paper, the gradients with respect to the Hausdorff measure for are derived under the unit norm constraints in which their first components are independent variables while the last dimension is dependent. Therefore the gradient for the last dimension is always 0 and the gradients shown in this Section are for the first dimension.

The gradient of log conditional density of under the Hausdorff measure is,

where is defined in (15). The density of the associated Hausdorff measure with respect to the likelihood component is given by

where Hence, the gradient of the likelihood under the Hausdorff measure is simply

where

Next the gradient of log conditional density of under the Hausdorff measure is,

where is defined in (15). The density of the associated Hausdorff measure with respect to the likelihood component is given by

where Hence, the gradient of the likelihood under the Hausdorff measure is simply

where

Lastly the gradient of log conditional density of under the Hausdorff measure is,

where is defined in (15). The density of the associated Hausdorff measure with respect to the likelihood component is given by

where Hence, the gradient of the likelihood under the Hausdorff measure is simply

where

References

- Albert & Chib (1993) Albert, J. H. & Chib, S. (1993). Bayesian analysis of binary and polychotomous response data. Journal of the American statistical Association 88, 669–679.

- Ansari & Jedidi (2000) Ansari, A. & Jedidi, K. (2000). Bayesian factor analysis for multilevel binary observations. Psychometrika 65, 475–496.

- Bafumi et al. (2005) Bafumi, J., Gelman, A., Park, D. K. & Kaplan, N. (2005). Practical issues in implementing and understanding bayesian ideal point estimation. Political Analysis 13, 171–87.

- Bartholomew (1984) Bartholomew, D. J. (1984). Scaling binary data using a factor model. Journal of the Royal Statistical Society: Series B (Methodological) 46, 120–123.

- Bartlett (1957) Bartlett, M. S. (1957). Comment on ‘a statistical paradox’by dv lindley. Biometrika 44, 533–534.

- Belkin & Niyogi (2002) Belkin, M. & Niyogi, P. (2002). Laplacian eigenmaps and spectral techniques for embedding and clustering. In Advances in neural information processing systems, pp. 585–591.

- Beskos et al. (2013) Beskos, A., Pillai, N., Roberts, G., Sanz-Serna, J.-M., Stuart, A. et al. (2013). Optimal tuning of the hybrid monte carlo algorithm. Bernoulli 19, 1501–1534.

- Betancourt et al. (2014) Betancourt, M., Byrne, S. & Girolami, M. (2014). Optimizing the integrator step size for hamiltonian monte carlo. arXiv preprint arXiv:1411.6669 .

- Byrne & Girolami (2013) Byrne, S. & Girolami, M. (2013). Geodesic monte carlo on embedded manifolds. Scandinavian Journal of Statistics 40, 825–845.

- Child (2006) Child, D. (2006). The essentials of factor analysis. Bloomsbury Academic, 3rd edition.

- Clinton et al. (2004) Clinton, J., Jackman, S. & Rivers, D. (2004). The statistical analysis of roll call data. American Political Science Review 98, 355–370.

- Davidson et al. (2018) Davidson, T. R., Falorsi, L., De Cao, N., Kipf, T. & Tomczak, J. M. (2018). Hyperspherical variational auto-encoders. arXiv preprint arXiv:1804.00891 .

- Dryden (2005) Dryden, I. L. (2005). Statistical analysis on high-dimensional spheres and shape spaces. Ann. Statist. 33, 1643–1665. doi:10.1214/009053605000000264.

- Fletcher et al. (2004) Fletcher, P. T., Lu, C., Pizer, S. M. & Joshi, S. (2004). Principal geodesic analysis for the study of nonlinear statistics of shape. IEEE transactions on medical imaging 23, 995–1005.

- Gelman et al. (2013) Gelman, A., Carlin, J. B., Stern, H. S., Dunson, D. B., Vehtari, A. & Rubin, D. B. (2013). Bayesian data analysis. CRC press.

- Gelman et al. (2014) Gelman, A., Hwang, J. & Vehtari, A. (2014). Understanding predictive information criteria for Bayesian models. Statistics and Computing 24, 997–1016.

- Gelman & Rubin (1992) Gelman, A. & Rubin, D. (1992). Inferences from iterative simulation using multiple sequences. Statistical Science 7, 457–472.

- Geweke & Singleton (1981) Geweke, J. F. & Singleton, K. J. (1981). Maximum likelihood” confirmatory” factor analysis of economic time series. International Economic Review pp. 37–54.

- Griffiths & Ghahramani (2011) Griffiths, T. L. & Ghahramani, Z. (2011). The indian buffet process: An introduction and review. Journal of Machine Learning Research 12.

- Hare & Poole (2014) Hare, C. & Poole, K. T. (2014). The polarization of contemporary american politics. Polity 46, 411–429.

- Hoff et al. (2002) Hoff, P. D., Raftery, A. E. & Handcock, M. S. (2002). Latent space approaches to social network analysis. Journal of the american Statistical association 97, 1090–1098.

- Jackman (2001) Jackman, S. (2001). Multidimensional analysis of roll call data via bayesian simulation: Identification, estimation, inference, and model checking. Political Analysis 9, 227–241.

- Jessee (2012) Jessee, S. A. (2012). Ideology and spatial voting in American elections. Cambridge University Press.

- Jung et al. (2012) Jung, S., Dryden, I. L. & Marron, J. (2012). Analysis of principal nested spheres. Biometrika 99, 551–568.

- Kingma et al. (2019) Kingma, D. P., Welling, M. et al. (2019). An introduction to variational autoencoders. Foundations and Trends® in Machine Learning 12, 307–392.

- Kramer (1991) Kramer, M. A. (1991). Nonlinear principal component analysis using autoassociative neural networks. AIChE journal 37, 233–243.

- Lawrence (2005) Lawrence, N. (2005). Probabilistic non-linear principal component analysis with gaussian process latent variable models. Journal of machine learning research 6, 1783–1816.

- Legler et al. (1997) Legler, J. M., Ryan, L. M. & Ryan, L. M. (1997). Latent variable models for teratogenesis using multiple binary outcomes. Journal of the American Statistical Association 92, 13–20.

- Lewis (2019a) Lewis, J. (2019a). Why are Ocasio-Cortez, Omar, Pressley, and Talib estimated to be moderates by NOMINATE? https://voteview.com/articles/Ocasio-Cortez_Omar_Pressley_Tlaib.

- Lewis (2019b) Lewis, J. (2019b). Why is Alexandria Ocasio-Cortez estimated to be a moderate by NOMINATE? https://voteview.com/articles/ocasio_cortez.

- Liu & Wu (1999) Liu, J. S. & Wu, Y. N. (1999). Parameter expansion for data augmentation. Journal of the American Statistical Association 94, 1264–1274.

- Marschak (1960) Marschak, J. (1960). Binary-choice constraints and random utility indicators. In Stanford Symposium on Mathematical Methods in the Social Sciences. Stanford, CA: Stanford University Press.

- McFadden (1973) McFadden, D. (1973). Conditional logit analysis of qualitative choice behavior. In Frontiers of Economics, Ed. P. Zarembka, pp. 105–142. Institute of Urban and Regional Development, University of California.

- Moser et al. (2020) Moser, S., Rodriguez, A. & Lofland, C. L. (2020). Multiple ideal points: Revealed preferences in different domains. Political Analysis Forthcoming.

- Mulaik (2009) Mulaik, S. A. (2009). Foundations of factor analysis. CRC press, 2nd edition.

- Pierre (2002) Pierre, F. J. (2002). Le siècle des idéologies.

- Poole & Rosenthal (2000) Poole, K. T. & Rosenthal, H. (2000). Congress: A political-economic history of roll call voting. Oxford University Press on Demand.

- Ragusa & Gaspar (2016) Ragusa, J. M. & Gaspar, A. (2016). Where’s the tea party? an examination of the tea party’s voting behavior in the house of representatives. Political Research Quarterly 69, 361–372.

- Rodríguez & Moser (2015) Rodríguez, A. & Moser, S. (2015). Measuring and accounting for strategic abstentions in the us senate, 1989–2012. Journal of the Royal Statistical Society: Series C (Applied Statistics) 64, 779–797.

- Roweis & Saul (2000) Roweis, S. T. & Saul, L. K. (2000). Nonlinear dimensionality reduction by locally linear embedding. science 290, 2323–2326.

- Rubin & Thomas (2001) Rubin, D. B. & Thomas, N. (2001). Using parameter expansion to improve the performance of the em algorithm for multidimensional irt population-survey models. In Essays on item response theory, pp. 193–204. Springer.

- Sommer et al. (2010) Sommer, S., Lauze, F. & Nielsen, M. (2010). The differential of the exponential map, jacobi fields and exact principal geodesic analysis. CoRR, abs/1008.1902 .

- Spiegelhalter et al. (2014) Spiegelhalter, D. J., Best, N. G., Carlin, B. P. & Van der Linde, A. (2014). The deviance information criterion: 12 years on. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 76, 485–493.

- Spiegelhalter et al. (2002) Spiegelhalter, D. J., Best, N. G., Carlin, B. P. & Van Der Linde, A. (2002). Bayesian measures of model complexity and fit. Journal of the royal statistical society: Series b (statistical methodology) 64, 583–639.

- Taylor (2006) Taylor, J. (2006). Where did the party go?: William Jennings Bryan, Hubert Humphrey, and the Jeffersonian legacy. University of Missouri Press.

- Tenenbaum et al. (2000) Tenenbaum, J. B., De Silva, V. & Langford, J. C. (2000). A global geometric framework for nonlinear dimensionality reduction. Science 290, 2319–2323.

- Weisberg (1974) Weisberg, H. F. (1974). Dimensionland: An excursion into spaces. American Journal of Political Science pp. 743–776.

- Xu & Durrett (2018) Xu, J. & Durrett, G. (2018). Spherical latent spaces for stable variational autoencoders. arXiv preprint arXiv:1808.10805 .

- Zhang & Fletcher (2013) Zhang, M. & Fletcher, T. (2013). Probabilistic principal geodesic analysis. In Advances in Neural Information Processing Systems 26, Eds. C. J. C. Burges, L. Bottou, M. Welling, Z. Ghahramani & K. Q. Weinberger, pp. 1178–1186. Curran Associates, Inc.

- Zhang & Zha (2004) Zhang, Z. & Zha, H. (2004). Principal manifolds and nonlinear dimensionality reduction via tangent space alignment. SIAM Journal on Scientific Computing pp. 313–338.