3D Pose Estimation and Future Motion Prediction from 2D Images

Abstract

This paper considers to jointly tackle the highly correlated tasks of estimating 3D human body poses and predicting future 3D motions from RGB image sequences. Based on Lie algebra pose representation, a novel self-projection mechanism is proposed that naturally preserves human motion kinematics. This is further facilitated by a sequence-to-sequence multi-task architecture based on an encoder-decoder topology, which enables us to tap into the common ground shared by both tasks. Finally, a global refinement module is proposed to boost the performance of our framework. The effectiveness of our approach, called PoseMoNet, is demonstrated by ablation tests and empirical evaluations on Human3.6M and HumanEva-I benchmark, where competitive performance is obtained comparing to the state-of-the-arts.

keywords:

pose estimation , motion prediction , multitask learning0.8pt

1 Introduction

Obtaining three-dimensional human poses from monocular image data is a fundamental yet challenging problem in computer vision. In many recent efforts [1, 2, 3, 4], 3D human pose estimation has been decomposed into a two-stage process: first, the 2D keypoints that correspond to the body joints are detected from the 2D image, after which the detected joints are lifted to obtain 3D pose. This type of solution is elegant in terms of the simplicity of problem formulation, unfortunately it suffers from inherent ambiguities caused by projection: different 3D poses can share the same 2D pose projection given a specific viewpoint; that is, the mapping between the 2D joints detection and 3D pose is not bijective. To resolve this ambiguity of 3D pose estimation from a monocular image, video-based pose estimation is also investigated in the literature [5, 6]. Existing video-based pose estimation methods, however, either need to observe a relatively long history (243 frames [5] or can only handle a short video sequence (4-6 frames [6]) to achieve their best results.

In the meantime, there has been a growing stream of research endeavours on the closely related problem of future motion prediction: by observing a past sequence of 3D poses, the future 3D motion could be generated [7, 8, 9, 10]. However, the requirement of past 3D pose sequence could be problematic: the MoCap data can be obtained relatively easily in a controlled lab environment but it is nearly infeasible to be captured in the wild at a reasonable cost. Besides, in predicting the future motion, motion prediction takes as input a short history of 3D pose sequence, which, in a sense, could be considered as a generalization of video-based pose estimation, where instead of current poses, the output here is future poses.

Motivated by these observations, in this paper, we propose an approach to jointly address the closely related problems of inferring 3D human poses and predicting future motions from an RGB video. It not only allows us to tap into the common ground of the human motion dynamics shared by both problems but also facilitates the removal of the unnecessary MoCap constraints from the problem of future 3D motion prediction. A sequence-to-sequence multi-task approach is proposed to exploit the benefits of pose estimation from RGB video and future motion prediction from a partial RGB image sequence. A simple yet effective encoder-decoder framework back-boned by Recurrent Neural Networks (RNNs) called PoseMoNet is thus proposed to tackle both problems. As depicted in Fig. 1, it contains four major components: (1) a pre-trained 2D keypoint detector is used to detect the 2D pose of a human for each of the input video frame; (2) a Pose Lifting Network (PLN) maps a 2D pose sequence to 3D pose sequence and encodes the motion dynamics in a latent representation; (3) the latent representation and a predicted seed pose from the last observed time step are passed to the Motion Generation Network (MGN) to predict future motions; and (4) a global trajectory refinement module will further polish the entire trajectory estimated by both the PLN and the MGN. While in existing encoder-decoder architectures for motion prediction [8, 9, 11, 10], the role of the encoder is to extract temporal motion dynamic from past 3D pose sequence to a latent representation and the decoder will then derive the future motion from it, our PLN encoder only requires 2D pose sequence as input. In short, our PLN tries to estimate the corresponding 3D pose sequence from the given 2D pose sequence as well as extract and compress the motion dynamics. It is worth mentioning that although the deep learning methods can generate future dynamics and achieve decent results on quantitative metrics, studies [9, 11] have shown the generated future motion tends to converge to a mean pose.

A stronger evidence[11] reveals that, quantitatively, predicting future motion as with a zero-velocity baseline can beat many well-trained deep learning methods. In other words, the trained model, instead of being trained to really understand human dynamics and predict the future motion, is actually overfitted to the quantitative metric. We address this issue by adopting a Lie algebra-based pose representation and propose a self-projection mechanism that encourages the generated future motion to be more realistic and dynamic.

Our work is motivated and inspired by existing literature [2, 9, 11, 10] but different from them. First, none of the current literature attempts to handle 3D pose sequence estimation and future motion prediction simultaneously. We utilize this multitask setting and shows that by pairing future motion prediction with 3D pose sequence estimation, the learning system gains robustness and achieves better performance with smaller joints error on both tasks. For the future motion prediction task, our proposed framework eliminates the requirement of the ground truth past 3D pose sequence and the input of a ground truth seed pose, where only the 2D video sequence is leveraged instead. Furthermore, our framework shows that it is unnecessary to involve past 3D pose sequences to generate the future as it can be achieved from 2D pose sequence input by our pose estimation task.

Our main contributions are summarized below:

-

1.

To our best knowledge, this is the first work to jointly tackle the closely related two problems of 3D pose sequence estimation and future motion prediction. Given a short video sequence as an initial part of a motion sequence, our approach, PoseMoNet, estimates not only the poses of these existing frames but also those of future frames to complete the entire motion dynamics.

-

2.

Based on Lie algebra pose representation, a sequence-to-sequence multi-task framework is proposed to address this relatively new problem. Empirically, it is demonstrated to be capable of predicting future motion dynamics faithfully, as well as maintaining a competitive estimation of 3D poses from a partial sequence of RGB images.

2 Related Work

2.1 3D Pose Estimation

3D human pose estimation research is affected by deep learning significantly in recent years where conventional methods [12, 13] are overtaken by deep learning methods. As the 2D human pose estimation results are progressively improved, researchers have also started to use detected 2D keypoints as an intermediate for 3D human pose estimation. Many works try to build algorithms that map the 2D keypoints to a 3D pose, such as using the nearest neighbor search [3], or building a parametrized method with neural networks to approximate it [2]. However, body parts occlusion is a critical issue for these types of 2D-to-3D methods, [14] proposed to use multi-scale heatmaps together with an occlusion augmentation module to further resolve the occlusion problem. The graphical structure of human pose is also leveraged by novel graph convolutional network methods. In [15], the authors further inject the graph structure into a GAN-based human pose estimation model to capture the relationship of body joints. Pose Graph Convolutional Network (PGCN) [16] is introduced to exploit structural relationships between body key points to further improve the localization performance for pose estimation. A similar idea is examined from a different perspective in [17], joint interdependency is leveraged to estimate 3D joint position from RGB images by incorporating structural relationships of the human body, and a progressively designed propagating LSTM framework is used to tackle the pose estimation stage by stage. In [18, 19], weakly-supervised methods are proposed to leverage both synthetic data and weakly-labeled real-world data, where the depth-aid regularizer provides weak supervision for 3D pose estimation without any costly 3D annotation required. Further improvements are investigated by introducing the temporal dimension at the input. A sequence of 2D keypoints are used to provide smoother spatial constraints where [6] leverages recurrent models and [5] built a 1D convolution model with optional temporal dilation. A grounded spatial-temporal learning framework was proposed in [20] to leverage both the temporal context in the video sequence and the spatial information in the graph-based skeleton. In the light of exploring spatial-temporal learning, [21] use the entire video as the context for predicting the bone direction along with a consistent bone length across the entire video. [22] proposed a multi-step refinement and estimation framework that refines the 2D input keypoint sequence and then concurrently considering the structure of 2D inputs and 3D outputs. Interestingly, another family of methods [23, 24, 25] train deep models to directly model the human shape represented by SMPL [26] and perform reverse inference from the shape to the corresponding pose.

2.2 Human Motion Prediction

The essence of motion prediction or generation can be thought of as a sequence generation task. Conventional approaches leveraged Gaussian processes [27] and hidden Markov models [28] to learn the representation of human motion. With the recent release of several large-scale motion capture (MoCap) datasets [29, 30], most of the recent research efforts involved deep models, more specifically, recurrent neural networks. Encoder-Recurrent-Decoder (ERD) [10] can be considered as one of the earliest attempts to leverage the superior learning power with deep models for human motion prediction. A residual Gated Rectified Unit network for generating future human dynamics is proposed in [11], as well as a zero-velocity baseline that outperforms previous works. Interestingly, the zero-velocity baseline outperforms [10] by simply predicting the last observed pose as the result. Structural-RNN [31] considers the body parts of humans and the object in the scene from a graph structure perspective though it requires human intervention to design such graph structures. Generative adversarial networks are employed and both the geometric property of articulated objects and adversarial learning concept are utilized to further push the quantitative results for predicting long-term human motion [8]. The only drawback is the involvement of the classifier (discriminator) make the training process more sensitive and complicated. Instead of using a recurrent architecture to model the motion context, a modified highway unit is utilized in [32]. And a hierarchical motion context network combined with a Lie algebra pose representation similar to [33] is introduced in [9]. Furthermore, human dynamics can be learned by working directly in the trajectory space, instead of the traditionally used pose space [34], by using discrete cosine transform. With recent advances of the attention mechanism, in [35], an attention-based feed-forward network is proposed to accommodate the observation that human motion tends to repeat itself. [36] applies a transformer-based architecture with the global attention mechanism as well as proposing a memory-based dictionary which help to preserve the global motion pattern.

2.3 Multitask Learning

Popular deep learning methods are usually built for a single particular task. However, potential benefits may be obtained through a multitask learning (MTL) setting on related tasks. Early works have shown that MTL is intuitively plausible to obtain inductive bias by including implicit data augmentation, regularization, and representation bias [37]. Common MTL methods with deep learning usually follow different patterns, such as joint learning (parameter sharing) and learning with auxiliary tasks. Parameter sharing usually has two forms: hard sharing and soft sharing. Hard sharing comes with the form of using task-specific output layers while sharing the layers before task-specific layers among all tasks [37]. Soft sharing usually means most of the layers in the neural networks are jointly trained together under the supervision from all tasks [38].

In the field of computer vision, significant efforts are put into leveraging MTL or joint learning. An Identity-Aware CycleGAN (IACycleGAN) model that applies a new perceptual loss to supervise the image generation network is introduced for both face photo-sketch synthesis and recognition [39]. It is reasonable to improve the super-resolution performance on both the color and the depth image by incorporate mutual information shared by them together with a GAN-based architectural design [40]. A 2D/3D multitask system is proposed in [41] where uses the pose estimation part as the primary tasks and jointly leverage the learned features for fine-grained action recognition. The idea of MTL seems to be effectively involved in existing works with an encoder-decoder pattern for different types of computer vision tasks. Face alignment in the video is tackled with a recurrent encoder-decoder architecture in [42], where the proposed framework predicts 2D facial keypoint maps and also a constrained shape response map. In [43], the authors proposed a multi-purpose framework for image-to-video translation, and a recurrent encoder-decoder architecture is used for face alignment as well as generating future motion masks. These works also motivate us to explore the possibility of applying this pattern for 3D human pose estimation though they are not performing the 3D human pose estimation task itself.

In particular, MTL also motivates the literature of pose estimation. A pose grammar mechanism that encodes kinematics, symmetry, and motor coordination, is proposed to tackle the problem of 3D human pose estimation [44]. Jointly learning a head pose estimation network by leveraging a coarse-to-fine network is efficient and the synthetic data is involved in training [45]. Body part segmentation can also provide extra information for accurately estimated the pose consider that joints should not be predicted outside the body part and particular joints can only be located in specific body parts [46].

3 Methodology

An overview of the proposed architecture is shown in Figure 1. There are 4 major components in our framework: (1) a 2D keypoint detector network, where we directly incorporate the stacked hourglass model [47] to acquire 2D pose sequence as input to the encoder from video frames. (2) the encoder: a Pose Lifting Network (PLN) for 3D pose sequence estimation; (3) the decoder: a Motion Generation Network (MGN) for predicting future motion; (4) a Global Refinement (GR) module for further polishing the entire pose trajectory which contains the estimated 3D pose sequence and future motion sequence. In addition, both the PLN and the MGN contain a novel Self-Projection (SP) layer.

The input to the framework is a sequence of RGB video frames, denoted as . These observed video frames are then fed to a 2D pose detector network to obtain a sequence of 2D keypoints in the form of normalized image coordinate. In this work, we use a 16-joint human skeleton model. The 2D pose sequence is then passed to the Pose Lifting Network (PLN) that lifts the 2D pose sequence to its predicted corresponding 3D representations. A Motion Generation Network (MGN) that uses the last predicted 3D pose as the seed pose and the latent representation from the PLN to predict future poses. Finally, a global refinement (GR) module is applied over the entire predicted sequence, including both the predicted 3D pose and the 3D future motion. Furthermore, we consider the commonly used 3D coordinate representation as a good baseline though it suffers from the problem of lacking physical constraints. In addition, a Lie algebra representation is used as to provide more grounded physical constraints, especially the 3D ground truth past sequence is not used in our work as the common 3D future motion prediction. Therefore, at each time step, two 3D poses are estimated (applied for both PLN and MGN).

In the rest of this section, we first introduce two pose representations we leveraged throughout our work, followed by a detailed demonstration of the architecture of our approach, PoseMoNet, as shown in Figure 4. Finally, we conclude this section with a brief analysis of the multitask foundation of our work.

3.1 Pose Representation

The coordinate-based pose representation is straightforward. It is the relative position of all joints with respect to the hip joint under a specified camera coordinate system, as shown in Figure 2 (left). However, this type of representation contains no physical constraints and no other information such as rotation except for the spatial position of joints. If this is unclear to you, imagine to rotate your wrist and you should be able to notice that although those are different poses, the relative position of your wrist to other joints remains the same as before.

Based on the above observation, we introduce a Lie algebra pose representation that utilizes the theory of Lie groups [33, 9]. The human skeleton structure makes humans articulated objects, which can be easily characterized as a kinematic tree of rigid bones connected by joints. A kinematic chain forms a model for the skeletal structure, which can be considered as an assembly of bones connected and constrained by the bones. The relative geometry of the successive bones, and , can be represented by a point in the Special Euclidean group .

We use the example shown in Figure 2 (right) to illustrate the setup. For the bone contains two joints, i and j, denoted as , a local coordinate system is set to use joint i as the origin and the -axis aligned to the bone in the direction from i to j. Then a 3D rigid transformation can be used to transform the local coordinate system at bone to the one that represents the successive bone . To summary, the 3D rigid transformation, which is an element of , is denoted as a matrix of the form , with being a rotation matrix, and a 3D translation vector. Mathematically, the joint with coordinates w.r.t. coordinate system at will have coordinates w.r.t coordinate system with

Therefore, the entire forward chain is naturally represented as the product of a ground of 3D rigid transformations and an entire human skeleton can be constructed by several such chains. The Lie group manifold contains motions as curves, however, it is non-trivial to parametrize and regress curves on this manifold [48].

In other words, Lie groups are geometric objects, i.e., manifolds, while Lie algebras are linear objects, i.e., vector spaces. Therefore, it can beneficial to use Lie algebra parameters over the SE(3) matrix representation from the computation perspective. The product that represents a kinematic chain in needs to be mapped to its Lie algebra space, denoted as .

Lie algebra refers to the identity of in the tangent space [48]. A logarithm map can be used to associate ,

| (1) |

where can be comfortably mapped to the vector form, . Readers may refer to [48] for a closed-form solution and its mathematical proof and deviation details.

The above builds the foundation of recasting a skeletal pose as a parameterized vector, . denotes the number of kinematic chains (which is 5 in our case), is the number of joints in the -th chain, and the Lie algebra parameter vector of joint in chain . We can again look at the right plot in Figure 2,

3.2 PoseMoNet

In this section, we introduce the details of the architectural design of each component in the PoseMoNet by first presenting a novel Self-Projection module. Then we demonstrate the Pose Lifting Network (PLN) followed by the Motion Generator Network (MGN). The Global Refinement (GR) module is used to further refine the estimated result with a grid-based pose representation.

The Self-Projection Module

Previous works investigated the usage of Lie algebra-based pose representation [9, 33] to provide implicit physical constraints for estimating the 3D pose of an articulated object. We further attempt to use the Lie algebra pose representation to consolidated the prediction. The self-projection module, which is a derived version of the Mixture-of-Expert layer for regressing both the joint relative coordinates and the Lie algebra pose representation, is shown in 3. A Mixture-of-Expert design of soft parameter sharing is utilized. The output from the RNN cell is the input of a shared layer followed by the two separated expert paths by using softmax gating.

Given a kinematic chain of m joints with the corresponding parametrized Lie algebra pose , we are able to obtain the location of the Joint by a completely differentiable forward kinematics

At time step , the desired output from both the coordinate pose path and the Lie algebra path should be well-aligned after the forward kinematic inference if the predictions are perfect. The training process should be able to penalize the bad prediction where the two outputs are very different after the kinematic chain forwarding. We define this process as . Note that this kinematic chain forwarding process contains inevitable tiny biases during the calculation, therefore we consider this as a regularization step for a more stable training process. The L2 norm of the outputs of these two paths is then used as a regularization term for supervision, denoted as

Pose Lifting Network

The Pose Lifting Network (PLN) is designed to handle the task of lifting a 2D pose sequence to its corresponding 3D pose trajectory on its own duty, as well as behave as the encoder network in the overall framework.

As shown in Figure 4, the architecture design of the PLN is summarized as the following: it consists of a feed-forward neural network (FFNN), then a 2-layer bi-directional Gated Rectified Unit (GRU), and finally a Self-Projection (SP) layer for producing the 3D pose trajectory. There are two linear layers in the FFNN and both are followed by a Rectified Linear Unit (ReLU) as the non-linearity. Dropout is added after all non-linearity layers except for the output layer from the SP layer, which does not have an activation function.

More formally, we define the input of the PLN as a length sequence of 2D pose . There are two outputs from PLN: a latent representation of the entire sequence, , and a predicted 3D pose sequence . We note that where at each time step , , with and . The latent representation, , is a tensor of shape . With abbreviating the forward pass as a function mapping , the loss function for PLN is

Motion Generator Network

The overall architecture of the Motion Generator Network (MGN) is similar to the PLN with the following differences, as shown at the right side of Figure 4. Starting from the first step of motion generation at time , the input is the predicted 3D pose instead of the ground truth 3D pose . To our knowledge, this setup also differs from all previous works [8, 9, 11, 10]. We follow the common practice presented in previous works [11] to predict the residual of the motion from the previous time step.

Mathematically, at time step , the input of the MGN is and the latent representation . As before, we use a function mapping to abbreviate the forward pass in MGN. Consider the future motion prediction is a -step process, for every time step , where , the predicted 3D pose . We shorten the entire step-by-step process as . The loss function for the MGN is then

Global Refinement Module

We investigate two baseline architectures, the vanilla Gated Rectified Unit (GRU) and a Convolutional Encoder-Decoder (ConvED) to handle both the temporal and spatial context inside the pose trajectory, and then we propose a Global Refinement (GR) module built upon the two baselines for pose sequence refinement. The overall design in demonstrated in Figure 6. Overall, the refinement is performed over the entire predicted pose trajectory, . The refinement module contains two paths and a trainable adaptive weighting vector, . We denote the two forward passes that happened in the two paths as and . Formally, with refers to the Hadamard product, the forward pass of the GR Module can be represented as , which gives us another loss function

The idea of using a 2D convolutional architecture to process pose trajectories can become intuitive if the pose trajectory is converted to a spatio-temporal grid representation which is identical to common digital images. A demonstration of the spatio-temporal pose grid representation is shown in Figure 5. Suppose the human skeleton model joints, and there are time steps and in total for the human pose trajectory. A built pose grid is then of shape where the height is the joint index dimension and the width is the temporal dimension. We only show the , i.e., a 3-channel case to save the space but it can be easily generalized to a higher dimension case when the Lie Algebra representation is included (i.e., from 3 channels to 9 channels). While the adjacent sub-regions in the grid represent closely related joints, different sizes of receptive fields across different convolutional layers can, therefore, learn the spatial relationship of joints across the time dimension implicitly.

Loss Function

The overall loss function which is a weighted combination of the loss functions defined in the above sections:

In our experiments, we use and . The value of the weights are chosen by empirical results. It is worth to notice that the term behaves more like a constraint noise regularizer as there are always small biases caused by the re-projection process, i.e., the process of converting the Lie algebra-pose to the coordinate-based pose. Using a weighted loss in our framework can be considered from two aspects. First, is used to control the balance of the importance between pose estimation and future motion prediction. Consider the stochasticity and a usually longer length of the temporal scope for motion generation, the weight of the motion generation loss term is reduced to 0.2. Second, the regularizer weight, , is used to re-scale the regularizer to a similar scale of the other terms in the loss function.

3.3 Analysis of the Multitask Formulation

A common practice for using an encoder-decoder or a sequence-to-sequence model for future motion prediction is that the encoder is only used for encoding the observed pose sequence [8, 9, 11, 10] and it outputs a latent vector representation that ideally covers all the information of the past 3D pose trajectory. In our problem setup, instead, we use a past 2D pose sequence only.

The question left is, does a 2D pose sequence contain sufficient information that represents the 3D dynamic of human motion? We build ablative experiments in Section 4 to test it and, unfortunately, the answer is negative. This observation drove the design of PLN of including a 3D pose sequence estimation task to force the model to encode sufficient information about the 3D dynamics from a 2D pose sequence in the latent representation.

To sum up, the design of the overall framework reinforces the multitask foundation from two different granularity. First, with the incorporation of the Self-Projection layer, the outputs from recurrent cells are used to estimate two different types of pose representations where the soft parameter sharing mechanism contained in the SP layers can also introduce plausible regularization power and induction bias. It frames the multitask setting at a local level. Secondly, along with the MGN, the PLN is trained to not only lifting the 2D pose sequence to its corresponding 3D pose sequence but also try to estimate and encode 3D dynamics efficiently for generating reasonable future 3D motion, it concludes the multitask setup at the global level.

4 Empirical Evaluations

4.1 Dataset and Evaluation

Human3.6M [30] is a large-scale dataset that contains 3.6 million video frames with 3D human poses captured by a MoCap system in an indoor environment. Eleven subjects who are professional actors perform 15 different daily activities such as eating, walking, smoking, discussing, and greeting. All of the performances are captured by 4 cameras placed at the pre-defined positions in the room so the intrinsic and extrinsic camera parameters are known. Only video frames from seven of the eleven subjects are annotated and publicly available, so we follow the previous work to split the training and testing set [1, 2, 4, 5, 6]. We downsample the video frame rate by a factor of 2 reducing the video from 50 MHz to 25 MHz. To align with the literature, we modify the skeleton data in Human3.6M to a 16-joint version. Note that we do not train specific models for each action but only train a multi-action model in an end-to-end fashion while early works may use multiple models and each for a single type of action only.

HumanEva-I [29], on the other hand, is a relatively small dataset, where three subjects are recorded from three camera views at 60 Hz. Only three primary actions are used for evaluation, namely, Walk, Job and Boxing. Due to its limited size and reported issue with the corrupt frames [5] which makes it is hard to be used for training and precise evaluation for pose estimation from video, we only show qualitative results on it.

For 3D pose sequence estimation, we adopt evaluation protocols from existing works [5, 6, 2, 4, 3], namely Mean Per Joint Position Error (MPJPE) and Procrustes-MPJPE (P-MPJPE). Where MPJPE is the average Euclidean distance of predicted joints to the ground-truth and P-MPJPE is the MPJPE calculated after the estimated 3D pose is aligned to the ground-truth by the Procrustes method (a similarity transformation). For future motion prediction, we benchmark the result with the most commonly used metric, Mean Angle Error (MAE) [10, 31, 11, 9, 8]. We also report the evaluation results by adopting the 2D ground truth pose sequence as input to demonstrate the lower bound of errors of our PoseMoNet.

4.2 Implementation Detail

For all recurrent models in the framework, the hidden state size is set to 512 with a 25% dropout rate. We train the model with an initial learning rate of 0.001. The training process lasts for a fixed number 30 epochs for Human3.6M with a batch size of 32 as well as decaying the learning rate every 10000 training steps by a factor of 0.9. Throughout the experiments, models are trained models with 9, 27, and 54 frames length of the input past 2D pose sequence along with a fixed 20-frame future motion context. As HumanEva-I is a much smaller dataset, the training process lasts for 600 epochs with a similar hyper-paramter setup as Human3.6M.

4.3 Ablative Study

Lie Algebra Pose Representation

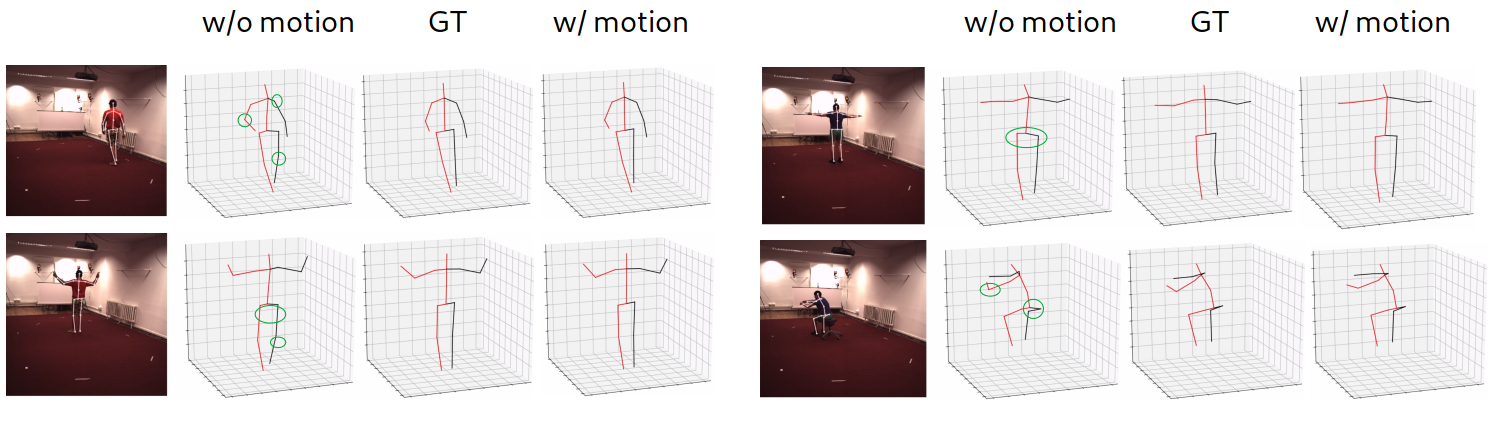

It has been shown in previous works that directly regressing the future poses with the ordinary ”XYZ” pose representation [8, 9, 11] can easily produce unrealistic poses, we first validate this observation with our PoseMoNet by turning off the supervision at the Lie algebra pose representation outputted by the self-projection module. It is worth to highlight that, although the ordinary ”XYZ” pose representation is most commonly used and there is no significant shortage of using it, we argue and empirically show that only the plain pose representation is insufficient in our work. We have 2 fundamental differences compared with existing works. First, to the best of our knowledge, a 3D MoCap pose sequence is required as the input for all the existing 3D future motion prediction method, where in our work, we only use 2D pose keypoint sequence as the only input to the task. Secondly, in our work, we are looking 1 more step further by considering a real world application scenario, we only leverage estimated 2D keypoint location without any human intervention, which make the problem more difficult and significantly different. The result shows that both training and prediction are unstable and can easily lead to divergence. Therefore, we omit the quantitative comparison for this ablative experiment and show an expressive qualitative visualization.

We demonstrate the visual results in Figure 7. As mentioned, we first train our framework without supervision on the Lie algebra pose representation. The result is shown in the middle column, denote as ”XYZ”. We also replicated the model proposed as Res-GRU [11] and show the result in the right column. We see that the previous competitive method may also suffer from the issue caused by no physical constraints. Finally, we add the results predicted by our framework trained completely with both pose representation and all loss terms. Not surprisingly, while other setups start to diverge on the predictions under a longer-than-usual context, the prediction from our framework, even after a 1600ms temporal context, is still natural and dynamic. This observation consolidates the effectiveness of including the Lie algebra pose representation in the training phase as well as using it to provide self-regularization with implicit physical constraints.

Multitask vs. Single-task

We demonstrate the advantage of building the multitask framework over training two standalone models with ablative experiments. The first group of models is Pose Liter Networks (PLN) being trained without the Motion Generator Networks (MGN) followed after. Different input lengths are covered in our experiments: the sequences of 5, 9, 27, and 54 frames, are used to train 4 PLNs, respectively. We then train another group of 4 models with the exact same configuration but with the Motion Generator Network trained together. The comparison of the quantitative results between these two groups is shown in Table 1.

| 9-frame | 27-frame | 54-frame | |

|---|---|---|---|

| w/o motion (GT) | 43.9 (31.4) | 41.5 (31.7) | 37.5 (27.1) |

| w/ motion (GT) | 42.3 (29.1) | 38.3 (27.5) | 33.4 (25.6) |

| w/o motion (SH) | 58.5 (45.7) | 53.2 (41.3) | 47.4 (28.5) |

| w/ motion (SH) | 56.8 (44.5) | 51.1 (40.9) | 45.3 (27.1) |

The quantitative results provide us a better understanding of the benefits of introducing the multitask setting. We observe that, for varying lengths of input sequences, training the PLN along with the MGN leads to better quantitative results under both protocols. Surprisingly, if we focus on protocol 2, P-MPJPE, the distance metric after the alignment at scale, rotation, and transformation, we notice a more significant boost over protocol 1 (MPJPE). We argue that by including the MGN, i.e., providing a broader temporal context in the future, our PLN obtained stronger constraints and resulted in predicting results that are closer to the ground truth, as shown in Figure 8.

For the sake of the completeness of the ablative study, we also performed experiments on removing the video pose estimation task, i.e., the supervision for 3D pose estimation is turned off and therefore only 2D pose sequence is used directly as input to generate future motion, and the training process suffered from huge fluctuation and diverged quickly, which consolidated the fact that 2D and 3D pose are not bijective therefore it can lead to massive ambiguity even in the latent space function mapping.

With vs. Without the Global Refinement Module

| GRU | ConvED | GRU + ConvED w/o AW | GRU + ConvED w/ AW | |

|---|---|---|---|---|

| MPJPE | 2.6 | 1.4 | 2.9 | 3.1 |

| MAE | 0.04 | 0.07 | 0.05 | 0.05 |

The proposed global refinement module consists of two baseline refinement networks mentioned previously. The effectiveness of the global refinement module is validated from two perspectives. To select the best architecture for pose trajectory refinement, we compared different settings for refining the entire pose trajectory under the following settings: using only the GRU-based model, using only the ConvED, and combining them to the proposed global refinement module. Quantitative results are obtained with different lengths of input video sequences and reported in Table 2. We see that the GRU alone provides more improvement for pose estimation, which consolidated our observation that a significant amount of errors on pose sequence estimation is caused by the non-smooth prediction among consecutive frames. On the other hand, ConvED focuses more on the spatiotemporal context of the pose sequence, which not surprisingly enhanced the result of the motion generator more than the GRU. On combining results from both paths, the adaptive weighting mechanism has been shown to be helpful in terms of quantitative performance.

Besides, the effectiveness of the refinement module along with the multitask vs. single task setting is also examined by first attaching the GR module only on the estimated 3D pose sequence, i.e., the output from the Pose Lifting Network, then we apply the GR module on the entire estimated pose sequence (predicted 3D pose + predicted future motion). Qualitative results is briefly reported in Table 2.

4.4 Evaluation on Human3.6M Dataset

3D Pose Estimation in Video

| MPJPE | Direct. | Discuss | Eating | Greet | Phone | Photo | Pose | Purch. | Sitting | SitD. | Smoke | Wait | WalkD. | Walk | WalkT. | Avg. |

| Ionescu et al. [30] | 132.7 | 183.6 | 132.3 | 164.4 | 162.1 | 205.9 | 150.6 | 171.3 | 151.6 | 243.0 | 162.1 | 170.7 | 177.7 | 96.6 | 127.9 | 162.1 |

| Martinez et al. [2] | 51.8 | 56.2 | 58.1 | 59.0 | 69.5 | 78.4 | 55.2 | 58.1 | 74.0 | 94.6 | 62.3 | 59.1 | 65.1 | 49.5 | 52.4 | 62.9 |

| Hossain & Little [6] | 48.4 | 50.7 | 57.2 | 55.2 | 63.1 | 72.6 | 53.0 | 51.7 | 66.1 | 80.9 | 59.0 | 57.3 | 62.4 | 46.6 | 49.6 | 58.3 |

| Zhao el al. [1] | 47.3 | 60.7 | 51.4 | 60.5 | 61.1 | 49.9 | 47.3 | 68.1 | 86.2 | 55.0 | 67.8 | 61.0 | 42.1 | 60.6 | 45.3 | 57.6 |

| Yang et al. [49] | 51.5 | 58.9 | 50.4 | 57.0 | 62.1 | 65.4 | 49.8 | 52.7 | 69.2 | 85.2 | 57.4 | 58.4 | 43.6 | 60.1 | 47.7 | 58.6 |

| Fang et al. [44] | 50.1 | 54.3 | 57.0 | 57.1 | 66.6 | 73.3 | 53.4 | 55.7 | 72.8 | 88.6 | 60.3 | 57.7 | 62.7 | 47.5 | 50.6 | 60.4 |

| Cai el al. [20] | 44.6 | 47.4 | 45.6 | 48.8 | 50.8 | 59.0 | 47.2 | 43.9 | 57.9 | 61.9 | 49.7 | 46.6 | 51.3 | 37.1 | 39.4 | 48.8 |

| Pavllo et al. [5] | 45.2 | 46.7 | 43.3 | 45.6 | 48.1 | 55.1 | 44.6 | 44.3 | 57.3 | 65.8 | 47.1 | 44.0 | 49.0 | 32.8 | 33.9 | 46.8 |

| Cheng et al. [14] | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | 44.8 |

| Ours (9-frame) | 54.3 | 60.3 | 51.9 | 55.9 | 58.4 | 66.2 | 59.9 | 55.6 | 65.7 | 68.2 | 56.4 | 58.8 | 57.6 | 48.4 | 50.0 | 56.8 |

| Ours (27-frame) | 46.0 | 51.4 | 44.3 | 49.1 | 53.6 | 59.3 | 47.5 | 46.9 | 65.5 | 75.7 | 49.6 | 50.5 | 52.4 | 43.7 | 46.2 | 51.1 |

| Ours (54-frame) | 42.7 | 45.0 | 40.5 | 43.4 | 46.4 | 51.4 | 46.0 | 40.7 | 52.3 | 51.1 | 44.2 | 44.1 | 43.4 | 38.1 | 38.3 | 44.3 |

| Chen et al. [21] | 41.4 | 43.5 | 40.1 | 42.9 | 46.6 | 51.9 | 41.7 | 42.3 | 53.9 | 60.2 | 45.4 | 41.7 | 46.0 | 31.5 | 32.7 | 44.1 |

| Cheng et al. [14] | 36.2 | 38.1 | 42.7 | 35.9 | 38.2 | 45.7 | 36.8 | 42.0 | 45.9 | 51.3 | 41.8 | 41.5 | 43.8 | 33.1 | 28.6 | 40.1 |

| P-MPJPE | Direct. | Discuss | Eating | Greet | Phone | Photo | Pose | Purch. | Sitting | SitD. | Smoke | Wait | WalkD. | Walk | WalkT. | Avg. |

| Martinez et al. [2] | 39.5 | 43.2 | 46.4 | 47.0 | 51.0 | 56.0 | 41.4 | 40.6 | 56.5 | 69.4 | 49.2 | 45.0 | 49.5 | 38.0 | 43.1 | 47.7 |

| Hossain & Little [6] | 36.9 | 37.9 | 42.8 | 40.3 | 46.8 | 46.7 | 37.7 | 36.5 | 48.9 | 52.6 | 45.6 | 39.6 | 43.5 | 35.2 | 38.5 | 42.0 |

| Yang et al. [49] | 26.9 | 30.9 | 36.3 | 39.9 | 43.9 | 47.4 | 28.8 | 29.4 | 36.9 | 58.4 | 41.5 | 30.5 | 29.5 | 42.5 | 32.2 | 37.7 |

| Cai el al. [20] | 35.7 | 37.8 | 36.9 | 40.7 | 39.6 | 45.2 | 37.4 | 34.5 | 46.9 | 50.1 | 40.5 | 36.1 | 41.0 | 29.6 | 33.2 | 39.0 |

| Pavllo et al. [5] | 34.1 | 36.1 | 34.4 | 37.2 | 36.4 | 42.2 | 34.4 | 33.6 | 45.0 | 52.5 | 37.4 | 33.8 | 37.8 | 25.6 | 27.3 | 36.5 |

| Cheng et al. [14] | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | 34.1 |

| Ours (27-frame) | 35.2 | 39.4 | 36.6 | 42.3 | 43.7 | 45.5 | 34.9 | 35.4 | 47.1 | 55.2 | 43.3 | 38.1 | 36.3 | 34.7 | 36.2 | 40.2 |

| Ours (54-frame) | 28.4 | 29.6 | 33.9 | 38.5 | 37.4 | 41.9 | 29.4 | 30.9 | 39.8 | 49.7 | 38.5 | 31.6 | 31.8 | 28.2 | 31.7 | 34.7 |

| Chen et al. [21] | 32.6 | 35.1 | 32.8 | 35.4 | 36.3 | 40.4 | 32.4 | 32.3 | 42.7 | 49.0 | 36.8 | 32.4 | 36.0 | 24.9 | 26.5 | 35.0 |

| Cheng et al. [14] | 28.7 | 30.3 | 35.1 | 31.6 | 30.2 | 36.8 | 31.5 | 29.3 | 41.3 | 45.9 | 33.1 | 34.0 | 31.4 | 26.1 | 27.8 | 32.8 |

The first table focuses on MPJPE metric: Note that 2 results from [14] are reported, the one with only the averaged result has the same problem setting with the compared literature, the one with complete results for each category involves augmented data that goes beyond the standard setting considered here. Methods leverage extra dataset or use extra data augmentations [21, 14] are included at the bottom of the table for the completeness purpose; they serve as a form of performance upper-bound for all the methods considered in our standard setting.

Moreover, the alternative metric of P-MPJPE is reported in the second table. Note here Cheng et al. [14] has again two results being reported here, where the first one use the exact same configuration with other compared methods and the one noted with used extra data augmentation step to improve the result.

: the corresponding method uses a single frame as input;

: using multiple frames as input but with less or a similar number of frames compared to us;

: using more frames as input compared to us (243 vs. ours 54).

: using extra data augmentation to obtained better 2D keypoint sequence input or involved extra dataset in training

As shown in Table 3, we compare with previous state-of-the-art methods for 3D pose estimation on the Human3.6M dataset. Different collections of methods are included. We have evaluated the methods proposed in [2, 1] which focus on 3D pose estimation from a single image. For a fair comparison, we also compete with methods that leverage a video sequence as input [6, 5, 20, 21, 14, 49]. It is important to note that a recently proposed work [14] leverages extra data augmentation step to improve the 2D keypoint detection result, which further pushed the performance. Two results, one without the data augmentation step (which is using the same input compared with other methods) and one with their proposed data augmentation technique, are reported from [14] and we include both results. Unfortunately, as the source code is not publicly available at the time we conduct experiments, we are not able to reproduce the proposed data augmentation. For the sake of completeness, we report both results in Table 3.

We can see that compared with the state-of-the-art methods, by exploiting the temporal information along the sequence as well as the motion dynamics from future motion prediction task, our approach achieves the decent performance with the smallest error averaging across all actions and holding the 1st place for most categories of actions, such as Eating, Greet, Purch, etc. Interestingly, two methods [6, 5] that use very large context information ( 243 frames) outperform our framework on action types ”Walking” and ”Walking Together”. These two types of actions are highly predictable if being estimated from a larger context due to its cyclic nature, where the pose estimation task can be benefited. On the other hand, our method performs particularly well on more random actions, such as ”Eating”, ”Photo”, ”Purch” and ”Smoke” compared with existing methods. This observation meets the fact that a human does not need to see a relatively long presence of other people to estimate and understand their pose from 2D inputs.

Furthermore, our framework only needs to observe a relatively short sequence (54 frames) to produce sound predictions for both 3D pose estimation and motion prediction, which makes it comes with the potential to be deployed easier in a real-world real-time situation. We argue that the longer the context is, the more diminishing of returns, even potential negative effects, can be observed. This can be seen in the cases of estimating pose stochastic activities such as ”Sitting”, ”Purch”, ”Posing” and ”Discussing”. Our method has more stable and quantitatively better results compared to methods that leverage a larger context [5, 14]. We have also noticed that existing works can produce better quantitative results with even a single-frame input [5] though longer contexts are used in our framework, we argue that the architecture in [5] at the single-frame setting requires significantly more computations as it is a 5-block residual architecture, also with more parameters, where our recurrent-based architecture can be more efficient. It is also worth to mention that the future motion predictions task suffer significantly from using an estimated 2D keypoint sequence as the original input, it also affects the performance of pose estimation, particularly when the sequence is short (i.e., 9-frame).

Consider that the skeleton visualization of the 3D poses is somehow abstract, we attempt to perform a reverse fitting process with the SMPL [26] shape model. A grounded visualization of the estimated poses can be obtained and is shown in Figure 9. The predictions produced by our proposed framework can not only achieve decent quantitative performance but they are also physically reasonable that rarely the body parts have collision or cross each other. Furthermore, we also provide visualization for HumanEva-I here to demonstrate the generalization ability of the proposed work.

Future Motion Prediction

| Methods | Discussion | Greeting | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 80ms | 160ms | 320ms | 400ms | 560ms | 640ms | 720ms | 1000ms | 80ms | 160ms | 320ms | 400ms | 560ms | 640ms | 720ms | 1000ms | |

| ERD [10] | 2.22 | 2.38 | 2.58 | 2.69 | 2.89 | 2.93 | 2.94 | 3.11 | 1.70 | 2.04 | 2.60 | 2.81 | 3.29 | 3.47 | 3.55 | 3.43 |

| LSTM-3LR [10] | 1.80 | 2.00 | 2.13 | 2.13 | 2.29 | 2.32 | 2.36 | 2.44 | 0.93 | 1.51 | 2.27 | 2.54 | 2.97 | 3.05 | 3.12 | 3.09 |

| S-RNN [31] | 1.16 | 1.40 | 1.75 | 1.85 | 2.06 | 2.07 | 2.08 | 2.19 | 1.33 | 1.60 | 1.83 | 1.98 | 2.27 | 2.28 | 2.30 | 2.31 |

| Res-GRU [11] | 0.31 | 0.69 | 1.03 | 1.12 | 1.52 | 1.61 | 1.70 | 1.87 | 0.52 | 0.86 | 1.30 | 1.47 | 1.78 | 1.75 | 1.82 | 1.96 |

| Zero-velocity [11] | 0.31 | 0.67 | 0.97 | 1.04 | 1.41 | 1.56 | 1.71 | 1.96 | 0.54 | 0.89 | 1.30 | 1.49 | 1.79 | 1.74 | 1.77 | 1.80 |

| MHU [32] | 0.31 | 0.66 | 0.93 | 1.00 | 1.37 | 1.51 | 1.66 | 1.88 | 0.54 | 0.87 | 1.27 | 1.45 | 1.75 | 1.71 | 1.74 | 1.87 |

| HMR [9] | 0.29 | 0.55 | 0.83 | 0.94 | 1.35 | 1.49 | 1.61 | 1.72 | 0.52 | 0.85 | 1.25 | 1.40 | 1.65 | 1.62 | 1.67 | 1.73 |

| AGED [8] | 0.27 | 0.56 | 0.76 | 0.83 | 1.25 | - | - | 1.55 | 0.56 | 0.81 | 1.30 | 1.46 | - | - | - | 1.69 |

| Traj [34] | 0.20 | 0.51 | 0.77 | 0.85 | 1.33 | - | - | 1.70 | 0.36 | 0.60 | 0.95 | 1.13 | - | - | - | - |

| Ours | 0.30 | 0.55 | 0.82 | 0.97 | 1.38 | 1.55 | 1.65 | 1.85 | 0.53 | 0.78 | 1.28 | 1.41 | 1.69 | 1.67 | 1.71 | 1.79 |

| Methods | Posing | Walking Dog | ||||||||||||||

| 80ms | 160ms | 320ms | 400ms | 560ms | 640ms | 720ms | 1000ms | 80ms | 160ms | 320ms | 400ms | 560ms | 640ms | 720ms | 1000ms | |

| ERD [10] | 2.42 | 2.77 | 3.26 | 3.39 | 3.43 | 3.42 | 3.45 | 3.87 | 1.58 | 1.78 | 2.02 | 2.10 | 2.31 | 2.37 | 2.48 | 2.60 |

| LSTM-3LR [10] | 1.22 | 1.89 | 3.02 | 3.53 | 4.25 | 4.57 | 4.83 | 4.60 | 0.76 | 1.29 | 1.91 | 2.18 | 2.72 | 3.01 | 3.30 | 3.78 |

| S-RNN [31] | 1.74 | 1.89 | 2.23 | 2.43 | 2.67 | 2.73 | 2.79 | 3.42 | 1.57 | 1.73 | 1.93 | 1.96 | 2.13 | 2.17 | 2.23 | 2.20 |

| Res-GRU [11] | 0.41 | 0.84 | 1.53 | 1.81 | 2.06 | 2.21 | 2.24 | 2.53 | 0.56 | 0.95 | 1.33 | 1.48 | 1.78 | 1.81 | 1.88 | 1.96 |

| Zero-velocity [11] | 0.28 | 0.57 | 1.13 | 1.38 | 1.81 | 2.14 | 2.23 | 2.78 | 0.60 | 0.98 | 1.36 | 1.50 | 1.74 | 1.80 | 1.87 | 1.96 |

| MHU [32] | 0.33 | 0.64 | 1.22 | 1.47 | 1.82 | 2.11 | 2.17 | 2.51 | 0.56 | 0.88 | 1.21 | 1.37 | 1.67 | 1.72 | 1.81 | 1.90 |

| HMR [9] | 0.24 | 0.53 | 1.12 | 1.42 | 1.75 | 1.89 | 2.02 | 2.50 | 0.55 | 0.87 | 1.20 | 1.36 | 1.65 | 1.70 | 1.77 | 1.84 |

| AGED [8] | 0.31 | 0.58 | 1.12 | 1.34 | - | - | - | 2.65 | 0.50 | 0.81 | 1.15 | 1.27 | - | - | - | 1.92 |

| Traj [34] | 0.19 | 0.44 | 1.01 | 1.24 | - | - | - | - | 0.46 | 0.79 | 1.12 | 1.29 | - | - | - | - |

| Ours | 0.24 | 0.57 | 1.15 | 1.45 | 1.80 | 2.10 | 2.15 | 2.67 | 0.53 | 0.88 | 1.15 | 1.41 | 1.70 | 1.76 | 1.83 | 1.99 |

It is worth highlighting that our proposed work has a fundamental difference with the existing motion prediction literature where makes it is infeasible to have a completely fair and controlled experiment: we do not use the historical 3D ground truth as the input of the motion prediction task. In Table 4, all the previous state-of-the-art methods use past 3D ground truth as the context as well as a ground truth seed pose for generating the future poses. We highlight our results with bold font whenever our results can achieve the top-3 overall ranking. In spite of this, it is still clear that our quantitative results are comparable with methods that leveraged 3D ground truth. This helps to demonstrate the further potential of our proposed framework.

Following the literature, we evaluate the future motion generation task on four commonly used test action classes, namely, discussion, greeting, posing, and walking dog. Mean Angle Error (MAE) is reported for this task. We compare with milestone works in the literature including ERD [10], S-RNN [31], Res-GRU [11], HMU [7] and a recently proposed Lie algebra-based method, HMR [9]. Unfortunately, as mentioned before, there is no easy way to waive the disadvantage of not using 3D ground truth but we are still able to obtain comparable quantitative results.

From Table 4, we can see that we can achieve comparable quantitative performance with the state-of-the-art methods [8, 34] within a 560ms time interval. Interestingly, the quantitative advantage of our method starts to deviate after 720ms. First, we believe this observation is acceptable, as there is, indeed, a potential drawback of using only the 2D pose sequence as the input. Besides, we argue that there are always trade-offs between the quantitative result and the quality of generated future motion as it has been pointed out that a very simple baseline, Zero-velocity [11], which always produces the static mean pose outperforms many existing works quantitatively.

We further validate the above observation by a qualitative visualization provided in 10. Surprisingly, we found that the relatively high error measured under the MAE metrics not necessarily translates to a ”bad” prediction of the future motion due to the fact that MAE is ambiguous which leads to the result of different sets of angles that may yield similar poses. The visualization further consolidated that we are still able to generate sound poses for future motions. In particular, many previous methods have the problem that the predicted future motion starts to either drift or converge to a static pose [10, 7] but in our case, for example, the walking action can keep being dynamic after a relatively long period.

| Methods | Discussion | Greeting | ||||||

|---|---|---|---|---|---|---|---|---|

| 80ms | 160ms | 320ms | 400ms | 80ms | 160ms | 320ms | 400m | |

| Zero-velocity [11] | 0.48 | 0.79 | 1.10 | 1.24 | 0.68 | 1.01 | 1.47 | 1.61 |

| AGED [8] | 0.40 | 0.68 | 0.85 | 0.97 | 0.62 | 0.92 | 1.30 | 1.42 |

| Traj [34] | 0.27 | 0.56 | 0.84 | 0.95 | 0.55 | 0.89 | 1.20 | 1.36 |

| Ours | 0.30 | 0.55 | 0.82 | 0.97 | 0.53 | 0.78 | 1.28 | 1.41 |

| Methods | Posing | Walking Dog | ||||||

| 80ms | 160ms | 320ms | 400ms | 80ms | 160ms | 320ms | 400m | |

| Zero-velocity [11] | 0.45 | 0.70 | 1.35 | 1.57 | 0.64 | 1.10 | 1.56 | 1.73 |

| AGED [8] | 0.39 | 0.63 | 1.31 | 1.62 | 0.65 | 1.01 | 1.33 | 1.54 |

| Traj [34] | 0.26 | 0.56 | 1.21 | 1.45 | 0.59 | 0.91 | 1.20 | 1.47 |

| Ours | 0.24 | 0.57 | 1.15 | 1.46 | 0.53 | 0.88 | 1.15 | 1.41 |

Note that the shape visualization is only for a demonstrative purpose, we do not estimate the shape parameters in our framework. This fact also proves the effectiveness of our framework from another aspect where the predicted future motions are realistic and dynamic even we do not explicitly model the shape and constraints provided with the shape fitting process.

For pursuing a relative fair comparison, with the lighted shined by state-of-the-art methods, we have also either re-implemented [11, 8] or adopted public code base[34] to retrain previous methods by feeding them the estimated 3D historical pose sequence as the input. Due to the fact that different skeletal structures are used (different number of joints) as well as the input is no longer historical 3D ground truth poses, the quantitative results shacked significantly and we reported them in Table 5. Clearly, the performance of the previous methods is significantly affected by the noisier input and our method is achieving the best or the second-best position among most of the time horizons.

4.5 Runtime and Efficiency

A complete theoretical complexity analysis of our proposed framework is beyond the scope of this work. Therefore, we use a recently configured workstation to empirically evaluate the computational complexity. The workstation comes with an Intel i9-7900X CPU, RTX 2080 Ti GPU with 12 GB graphic memory, and 128 GB random access memory (RAM). We randomly select 1000 input 2D keypoint sequences of length 27, which is the same as our previous experiment, and use them to perform inference with different lengths of future motion conditions. The experiment covers both cases where the Lie-based pose representation is included or not and tests the runtime differences by involving the motion generator. Quantitative results is shown in Table 6. Using recurrent architecture in our framework leads to a step-by-step inference nature, i.e., for inference at time step , the computation at time step must be finished, the computational cost of the proposed method grows at a linear scale, therefore, for both the training and inference stage, the inference and training time only depend on how many steps we have for pose estimation and future motion generation. Real-time applications then become fully considerable. The recurrent architecture can be a better fit when the input sequence is relatively short (i.e., with 9, 27, 54-frame setting), however, convolutional architectures [5] can be more efficient when the longer input sequence is involved (i.e., over 100-frame input). From a potential application aspect, it should be decided on the application end which one is a better fit.

| # of Future Time Steps | 0 | 27 | 54 |

|---|---|---|---|

| w\Lie | 83ms | 140ms | 189ms |

| w\o Lie | 57ms | 113ms | 157ms |

5 Conclusion

The closely related, but often separately considered tasks of 3D human pose estimation and future motion prediction are jointly tackled in this paper. By doing so, a dedicated approach, PoseMoNet, is developed to exploit the innate connections of both tasks: The proposed framework, to the best of our knowledge, is the first work to tackle the 3D pose sequence estimation and future motion prediction together. A novel mixture-of-expert self-projection module is introduced to implicitly leverage the physical constraints provided by the Lie algebra pose representation; Multiple granularities of multitask settings are investigated and leveraged in the proposed framework. We demonstrated that 3D MoCap data is not a sole requirement for generating future 3D motion by combining it with the 3D pose sequence estimation task. Empirical experiments on well-known Human3.6M and HumanEva-I benchmarks demonstrate the competitive performance in addressing the problems of 3D pose sequence estimation and future motion generation. Future work may focus on multiple aspects. An interesting direction is that an investigation beyond the estimation of current and future single poses, including estimation of current and future 3D shapes, and further investigation into visual scenarios involving the interactions of multiple people. In addition, recurrent architectures are the major components in our proposed work, future works may pay attention to other advanced research efforts such as transformer architectures that can avoid step-by-step inference caused by the recurrent architecture as well as graph convolution networks that can leverage the skeletal property of articulated objects.

References

- [1] L. Zhao, X. Peng, Y. Tian, M. Kapadia, D. N. Metaxas, Semantic graph convolutional networks for 3d human pose regression, in: CVPR, 2019, pp. 3425–3435.

- [2] J. Martinez, R. Hossain, J. Romero, J. J. Little, A simple yet effective baseline for 3d human pose estimation, in: ICCV, 2017, pp. 2640–2649.

- [3] C.-H. Chen, D. Ramanan, 3d human pose estimation= 2d pose estimation+ matching, in: CVPR, 2017, pp. 7035–7043.

- [4] B. X. Nie, P. Wei, S.-C. Zhu, Monocular 3d human pose estimation by predicting depth on joints, in: ICCV, IEEE, 2017, pp. 3467–3475.

- [5] D. Pavllo, C. Feichtenhofer, D. Grangier, M. Auli, 3d human pose estimation in video with temporal convolutions and semi-supervised training, in: CVPR, 2019, pp. 7753–7762.

- [6] M. Rayat Imtiaz Hossain, J. J. Little, Exploiting temporal information for 3d human pose estimation, in: ECCV, 2018, pp. 68–84.

- [7] P. Ghosh, J. Song, E. Aksan, O. Hilliges, Learning human motion models for long-term predictions, in: Proceedings of the International Conference on 3D Vision, IEEE, 2017, pp. 458–466.

- [8] L.-Y. Gui, Y.-X. Wang, X. Liang, J. M. Moura, Adversarial geometry-aware human motion prediction, in: ECCV, 2018, pp. 786–803.

- [9] Z. Liu, S. Wu, S. Jin, Q. Liu, S. Lu, R. Zimmermann, L. Cheng, Towards natural and accurate future motion prediction of humans and animals, in: CVPR, 2019, pp. 10004–10012.

- [10] K. Fragkiadaki, S. Levine, P. Felsen, J. Malik, Recurrent network models for human dynamics, in: ICCV, 2015, pp. 4346–4354.

- [11] J. Martinez, M. J. Black, J. Romero, On human motion prediction using recurrent neural networks, in: CVPR, 2017, pp. 2891–2900.

- [12] M. Burenius, J. Sullivan, S. Carlsson, 3d pictorial structures for multiple view articulated pose estimation, in: CVPR, 2013, pp. 3618–3625.

- [13] X. Chen, A. L. Yuille, Articulated pose estimation by a graphical model with image dependent pairwise relations, in: Advances in neural information processing systems, 2014, pp. 1736–1744.

- [14] Y. Cheng, B. Yang, B. Wang, R. T. Tan, 3d human pose estimation using spatio-temporal networks with explicit occlusion training, Thirty-Fourth AAAI Conference on Artificial Intelligence (2020) 10631–10638.

- [15] L. Tian, P. Wang, G. Liang, C. Shen, An adversarial human pose estimation network injected with graph structure, Pattern Recognition 115 (2021) 107863.

- [16] Y. Bin, Z.-M. Chen, X.-S. Wei, X. Chen, C. Gao, N. Sang, Structure-aware human pose estimation with graph convolutional networks, Pattern Recognition 106 (2020) 107410.

- [17] K. Lee, I. Lee, S. Lee, Propagating lstm: 3d pose estimation based on joint interdependency, in: Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 119–135.

- [18] Y. Cai, L. Ge, J. Cai, J. Yuan, Weakly-supervised 3d hand pose estimation from monocular rgb images, in: Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 666–682.

- [19] Y. Cai, L. Ge, J. Cai, N. Magnenat-Thalmann, J. Yuan, 3d hand pose estimation using synthetic data and weakly labeled rgb images, IEEE transactions on pattern analysis and machine intelligence (2020).

- [20] Y. Cai, L. Ge, J. Liu, J. Cai, T.-J. Cham, J. Yuan, N. M. Thalmann, Exploiting spatial-temporal relationships for 3d pose estimation via graph convolutional networks, in: ICCV, 2019, pp. 2272–2281.

- [21] T. Chen, C. Fang, X. Shen, Y. Zhu, Z. Chen, J. Luo, Anatomy-aware 3d human pose estimation in videos, IEEE Transactions on Circuits and Systems for Video Technology (2021) 1–1.

- [22] J. Xu, Z. Yu, B. Ni, J. Yang, X. Yang, W. Zhang, Deep kinematics analysis for monocular 3d human pose estimation, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 899–908.

- [23] A. Kanazawa, J. Y. Zhang, P. Felsen, J. Malik, Learning 3d human dynamics from video, in: CVPR, 2019, pp. 5614–5623.

- [24] J. Y. Zhang, P. Felsen, A. Kanazawa, J. Malik, Predicting 3d human dynamics from video, in: ICCV, 2019, pp. 7114–7123.

- [25] M. Kocabas, N. Athanasiou, M. J. Black, Vibe: Video inference for human body pose and shape estimation, in: CVPR, 2020, pp. 5253–5263.

- [26] M. Loper, N. Mahmood, J. Romero, G. Pons-Moll, M. J. Black, Smpl: A skinned multi-person linear model, ACM transactions on graphics 34 (6) (2015) 1–16.

- [27] J. M. Wang, D. J. Fleet, A. Hertzmann, Gaussian process dynamical models for human motion, IEEE Trans. Pattern Anal. Mach. Intell. 30 (2) (2007) 283–298.

- [28] A. M. Lehrmann, P. V. Gehler, S. Nowozin, Efficient nonlinear markov models for human motion, in: CVPR, 2014, pp. 1314–1321.

- [29] L. Sigal, A. O. Balan, M. J. Black, Humaneva: Synchronized video and motion capture dataset and baseline algorithm for evaluation of articulated human motion, International Journal of Computer Vision 87 (1-2) (2010) 4.

- [30] C. Ionescu, D. Papava, V. Olaru, C. Sminchisescu, Human3.6m: Large scale datasets and predictive methods for 3d human sensing in natural environments, IEEE Trans. Pattern Anal. Mach. Intell. 36 (7) (2014) 1325–1339.

- [31] A. Jain, A. R. Zamir, S. Savarese, A. Saxena, Structural-rnn: Deep learning on spatio-temporal graphs, in: CVPR, 2016, pp. 5308–5317.

- [32] Y. Tang, L. Ma, W. Liu, W. Zheng, Long-term human motion prediction by modeling motion context and enhancing motion dynamic, Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI) (2018) 935–941.

- [33] C. Xu, L. N. Govindarajan, Y. Zhang, L. Cheng, Lie-x: Depth image based articulated object pose estimation, tracking, and action recognition on lie groups, International Journal of Computer Vision 123 (3) (2017) 454–478.

- [34] W. Mao, M. Liu, M. Salzmann, H. Li, Learning trajectory dependencies for human motion prediction, in: ICCV, 2019, pp. 9489–9497.

- [35] W. Mao, M. Liu, M. Salzmann, History repeats itself: Human motion prediction via motion attention, in: European Conference on Computer Vision, Springer, 2020, pp. 474–489.

- [36] Y. Cai, L. Huang, Y. Wang, T.-J. Cham, J. Cai, J. Yuan, J. Liu, X. Yang, Y. Zhu, X. Shen, et al., Learning progressive joint propagation for human motion prediction, in: European Conference on Computer Vision, Springer, 2020, pp. 226–242.

- [37] R. Caruana, Multitask learning, Machine learning 28 (1) (1997) 41–75.

- [38] L. Duong, T. Cohn, S. Bird, P. Cook, Low resource dependency parsing: Cross-lingual parameter sharing in a neural network parser, in: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 2: Short Papers), 2015, pp. 845–850.

- [39] Y. Fang, W. Deng, J. Du, J. Hu, Identity-aware cyclegan for face photo-sketch synthesis and recognition, Pattern Recognition 102 (2020) 107249.

- [40] L. Zhao, H. Bai, J. Liang, B. Zeng, A. Wang, Y. Zhao, Simultaneous color-depth super-resolution with conditional generative adversarial networks, Pattern Recognition 88 (2019) 356–369.

- [41] D. C. Luvizon, D. Picard, H. Tabia, 2d/3d pose estimation and action recognition using multitask deep learning, in: CVPR, 2018, pp. 5137–5146.

- [42] X. Peng, R. S. Feris, X. Wang, D. N. Metaxas, A recurrent encoder-decoder network for sequential face alignment, in: ECCV, 2016, pp. 38–56.

- [43] L. Zhao, X. Peng, Y. Tian, M. Kapadia, D. Metaxas, Learning to forecast and refine residual motion for image-to-video generation, in: ECCV, 2018, pp. 387–403.

- [44] H.-S. Fang, Y. Xu, W. Wang, X. Liu, S.-C. Zhu, Learning pose grammar to encode human body configuration for 3d pose estimation, in: Thirty-Second AAAI Conference on Artificial Intelligence, 2018, pp. 6821–6828.

- [45] Y. Wang, W. Liang, J. Shen, Y. Jia, L.-F. Yu, A deep coarse-to-fine network for head pose estimation from synthetic data, Pattern Recognition 94 (2019) 196–206.

- [46] F. Xia, P. Wang, X. Chen, A. L. Yuille, Joint multi-person pose estimation and semantic part segmentation, in: CVPR, 2017, pp. 6769–6778.

- [47] A. Newell, K. Yang, J. Deng, Stacked hourglass networks for human pose estimation, in: ECCV, Springer, 2016, pp. 483–499.

- [48] Y. Ma, S. Soatto, J. Kosecka, S. S. Sastry, An invitation to 3-d vision: from images to geometric models, Vol. 26, Springer Science & Business Media, 2012.

- [49] W. Yang, W. Ouyang, X. Wang, J. Ren, H. Li, X. Wang, 3d human pose estimation in the wild by adversarial learning, in: CVPR, 2018, pp. 5255–5264.